The CloudLab Manual

CloudLab is a "meta-cloud"—

The current CloudLab deployment consists of more than 33,000 cores in over 1800 allocatable nodes distributed across three sites at the University of Wisconsin, Clemson University, and the University of Utah. CloudLab interoperates with existing testbeds including GENI and Emulab, to take advantage of hardware at dozens of sites around the world.

The control software for CloudLab is open source, and is built on the foundation established for Emulab, GENI, and Apt. Pointers to the details of this control system can be found on CloudLab’s technology page.

1 Getting Started

This chapter will walk you through a simple experiment on CloudLab and introduce you to some of its basic concepts.

Start by pointing your browser at https://www.cloudlab.us/.

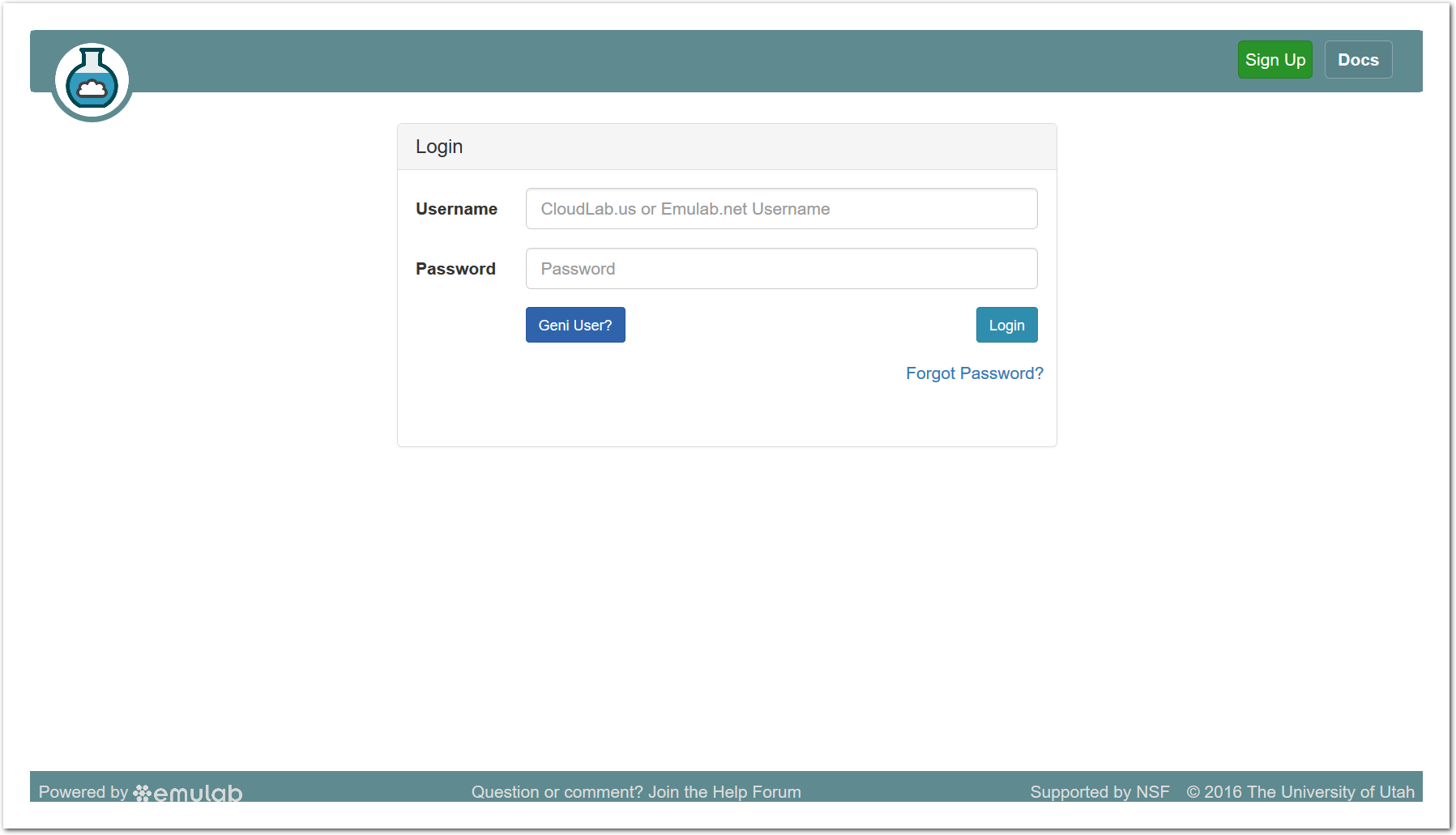

- Log inYou’ll need an account to use CloudLab. If you already have an account on Emulab.net, you may use that username and password. Or, if you have an account at the GENI portal, you may use the “GENI User” button to log in using that account. If not, you can apply to start a new project at https://www.cloudlab.us/signup.php and taking the "Start New Project" option. See the chapter about CloudLab users for more details about user accounts.

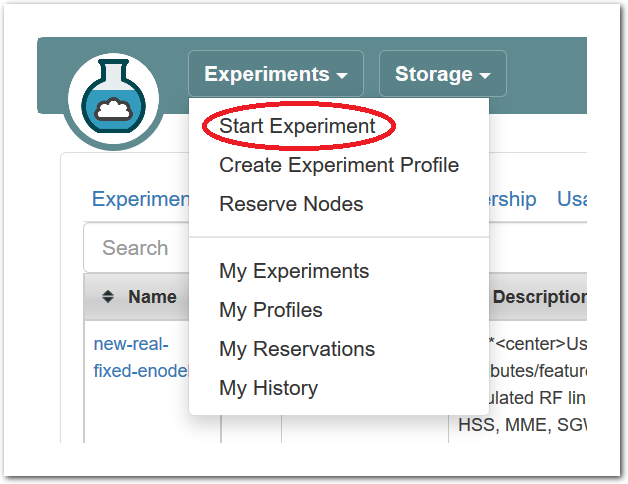

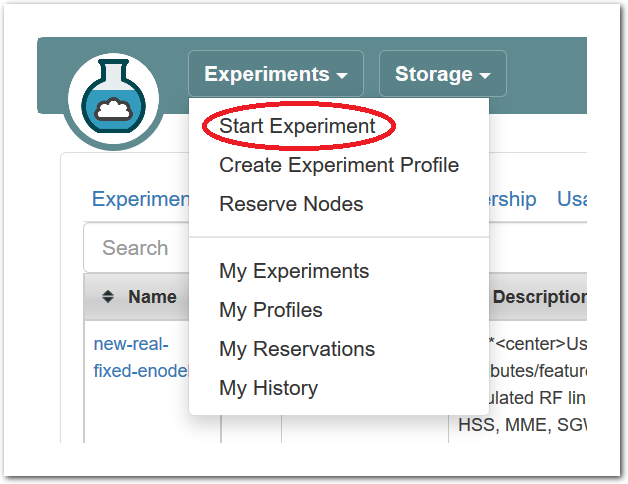

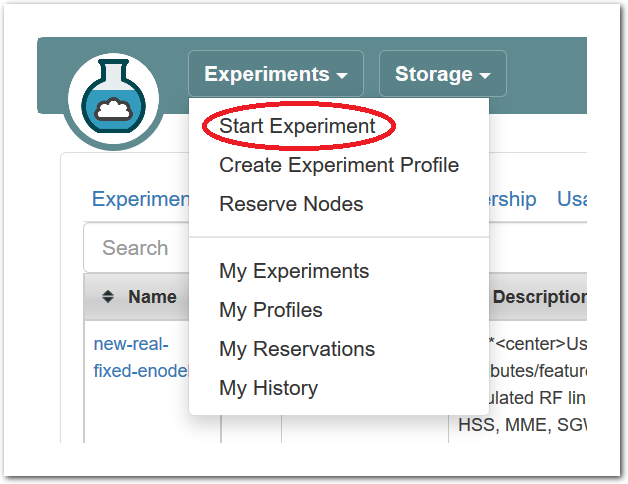

- Start ExperimentFrom the top menu, click “Experiments” and then “Start Experiment” to begin.

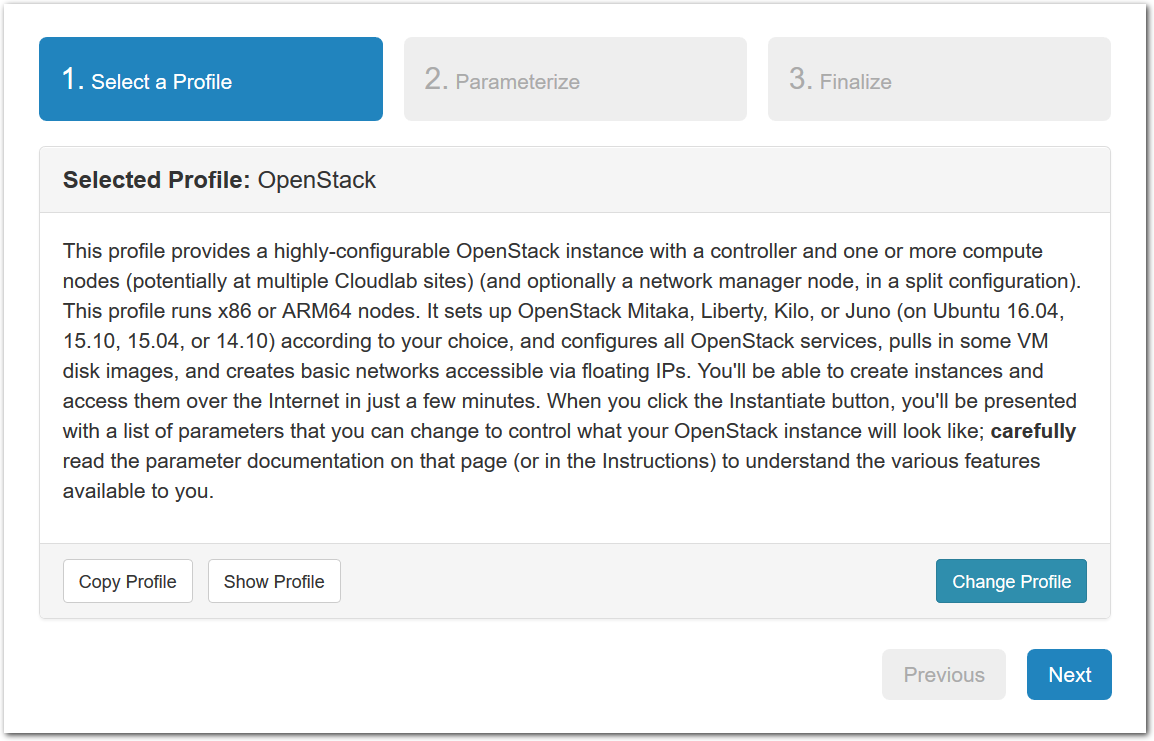

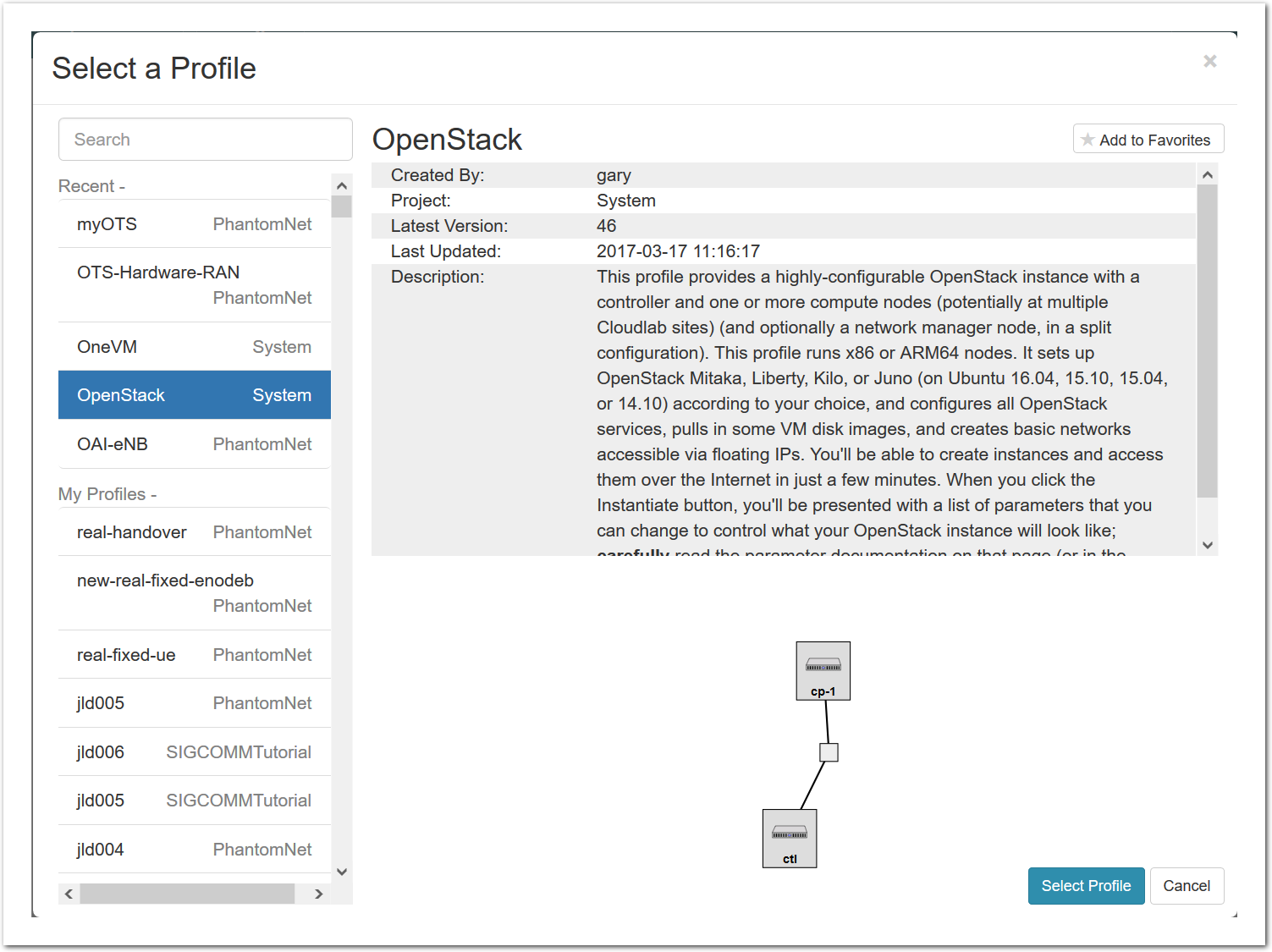

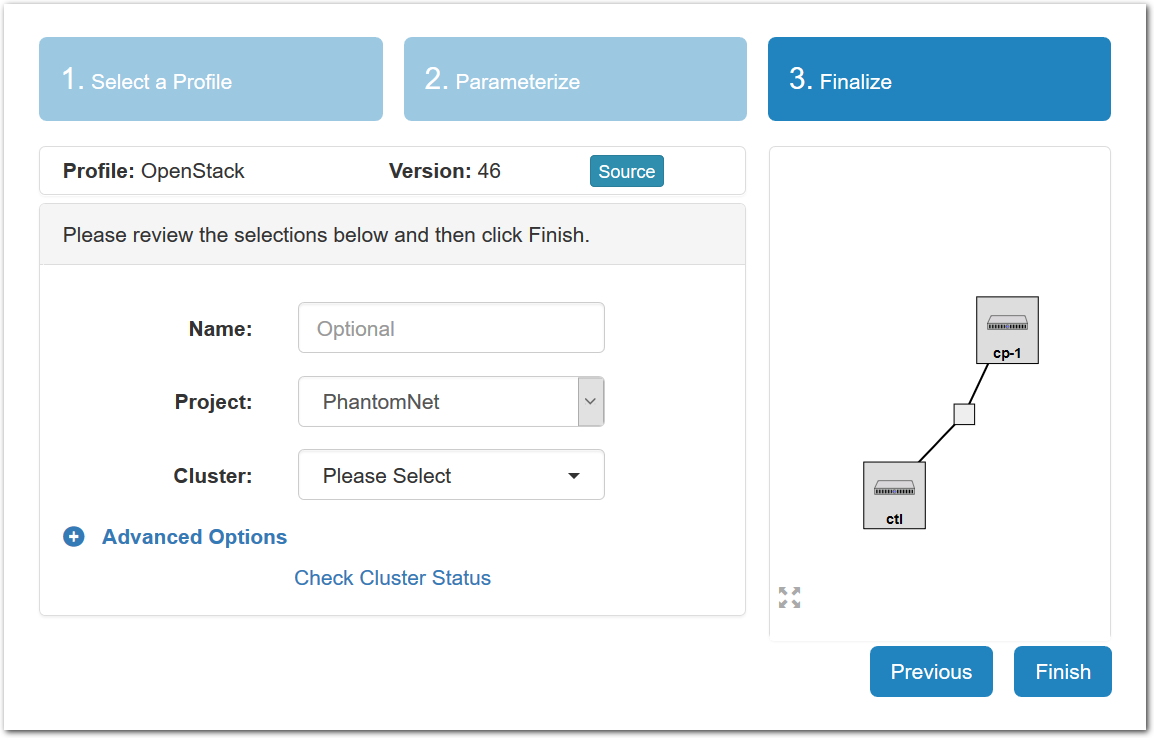

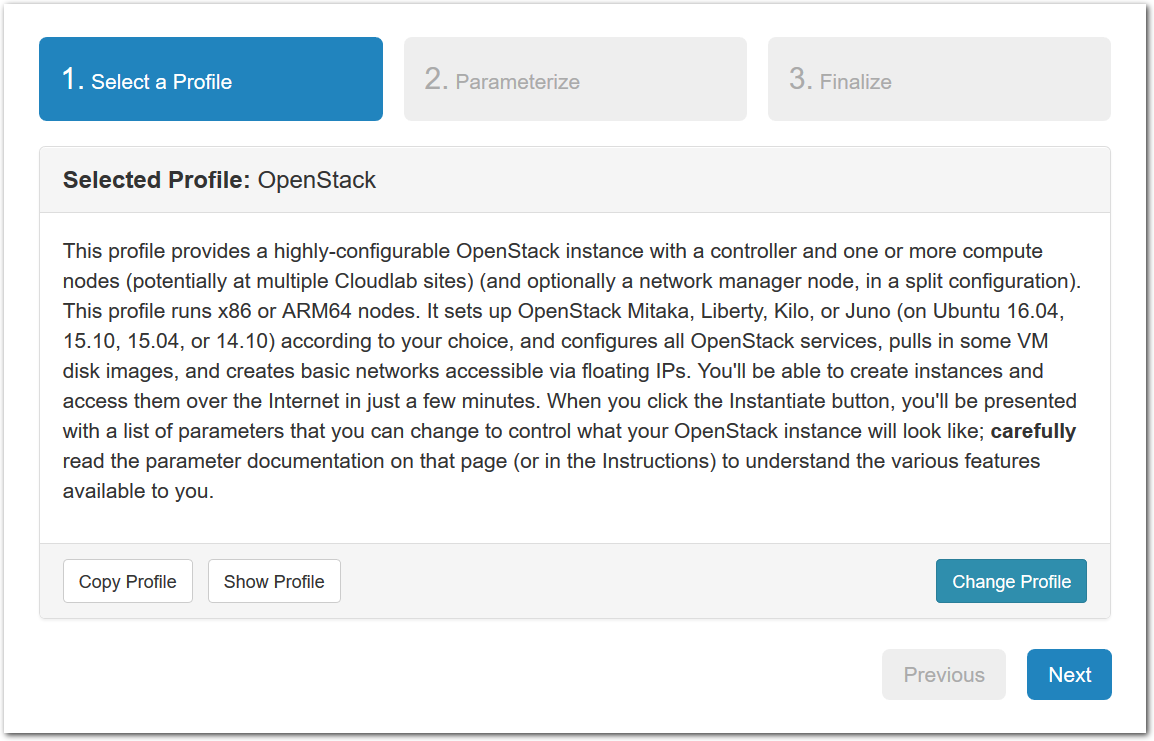

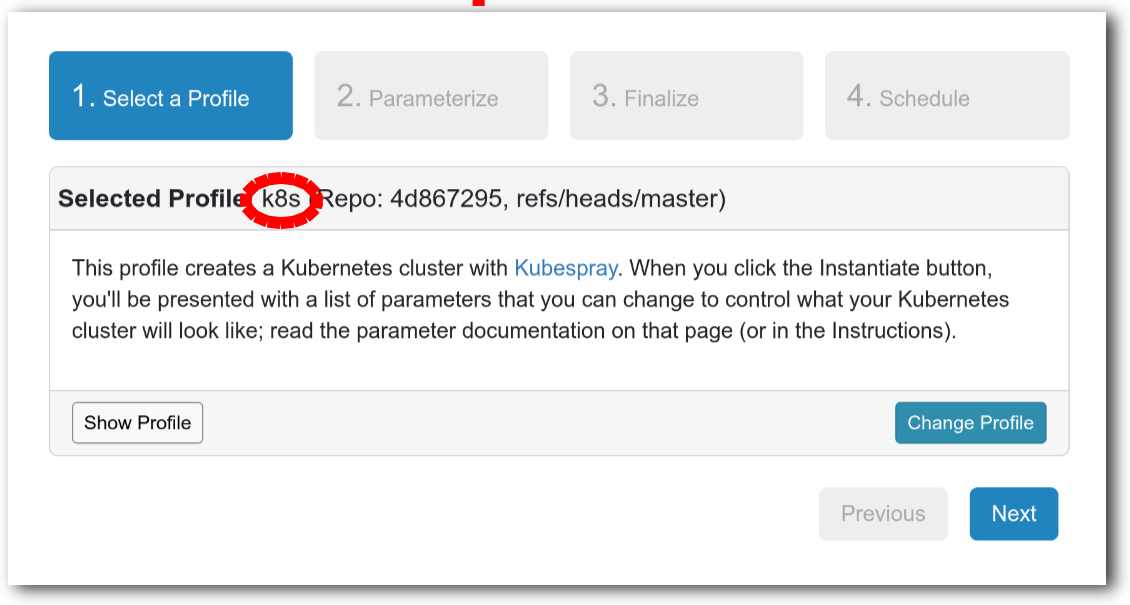

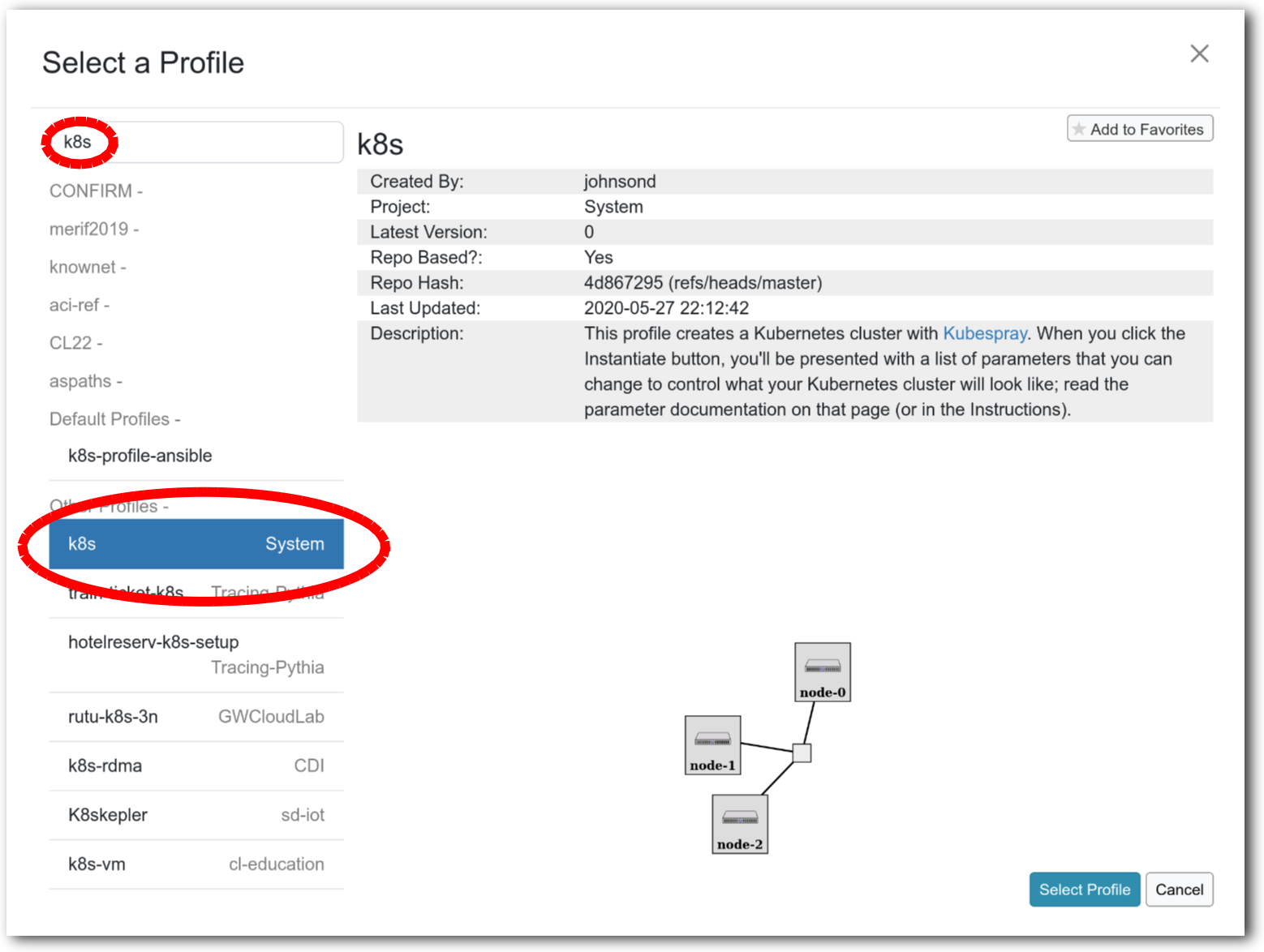

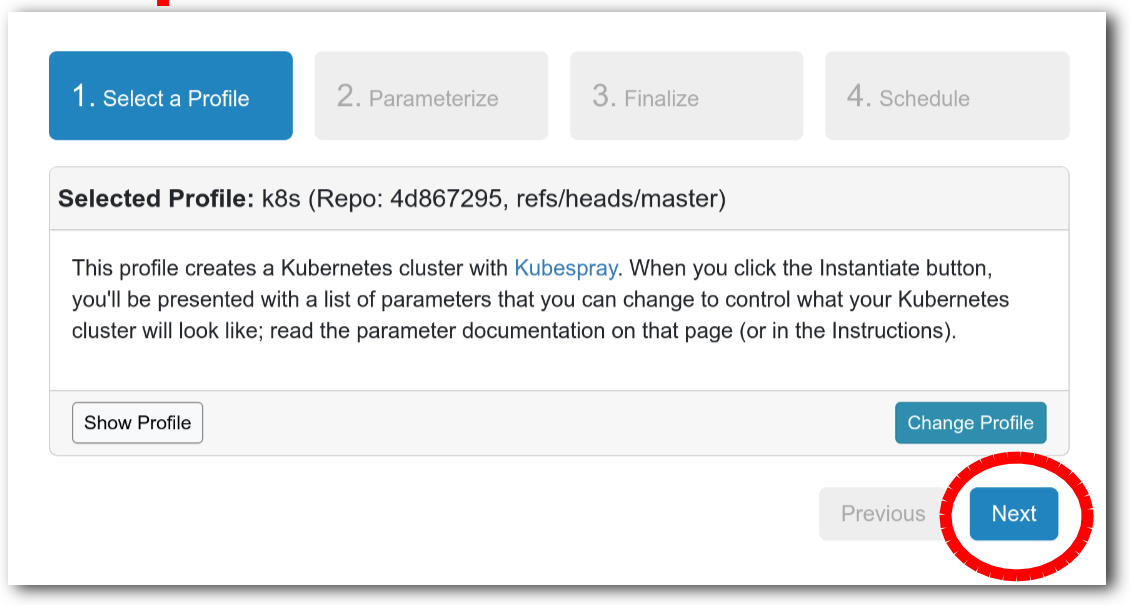

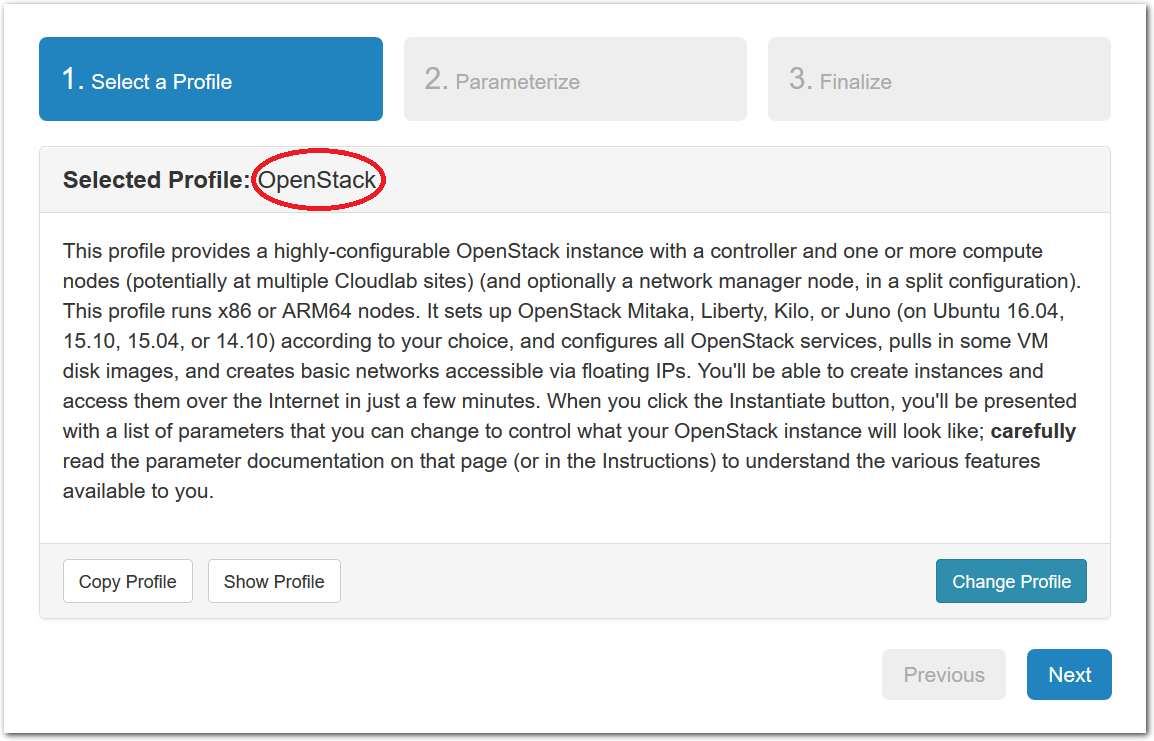

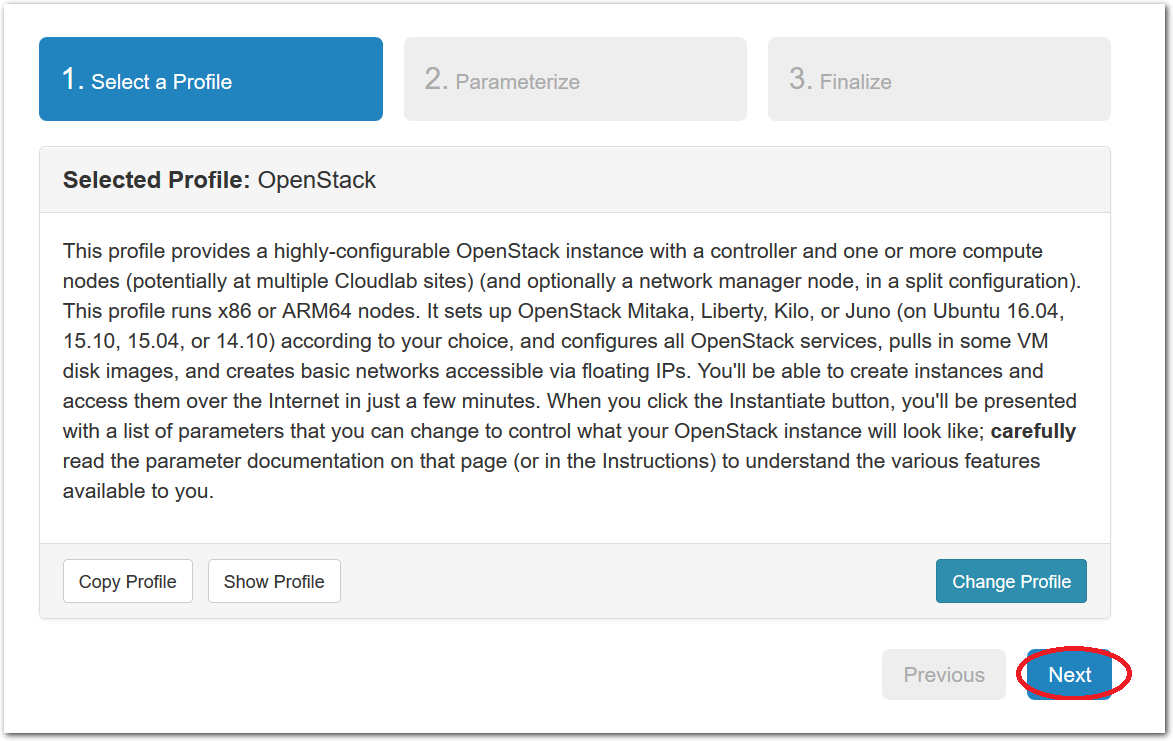

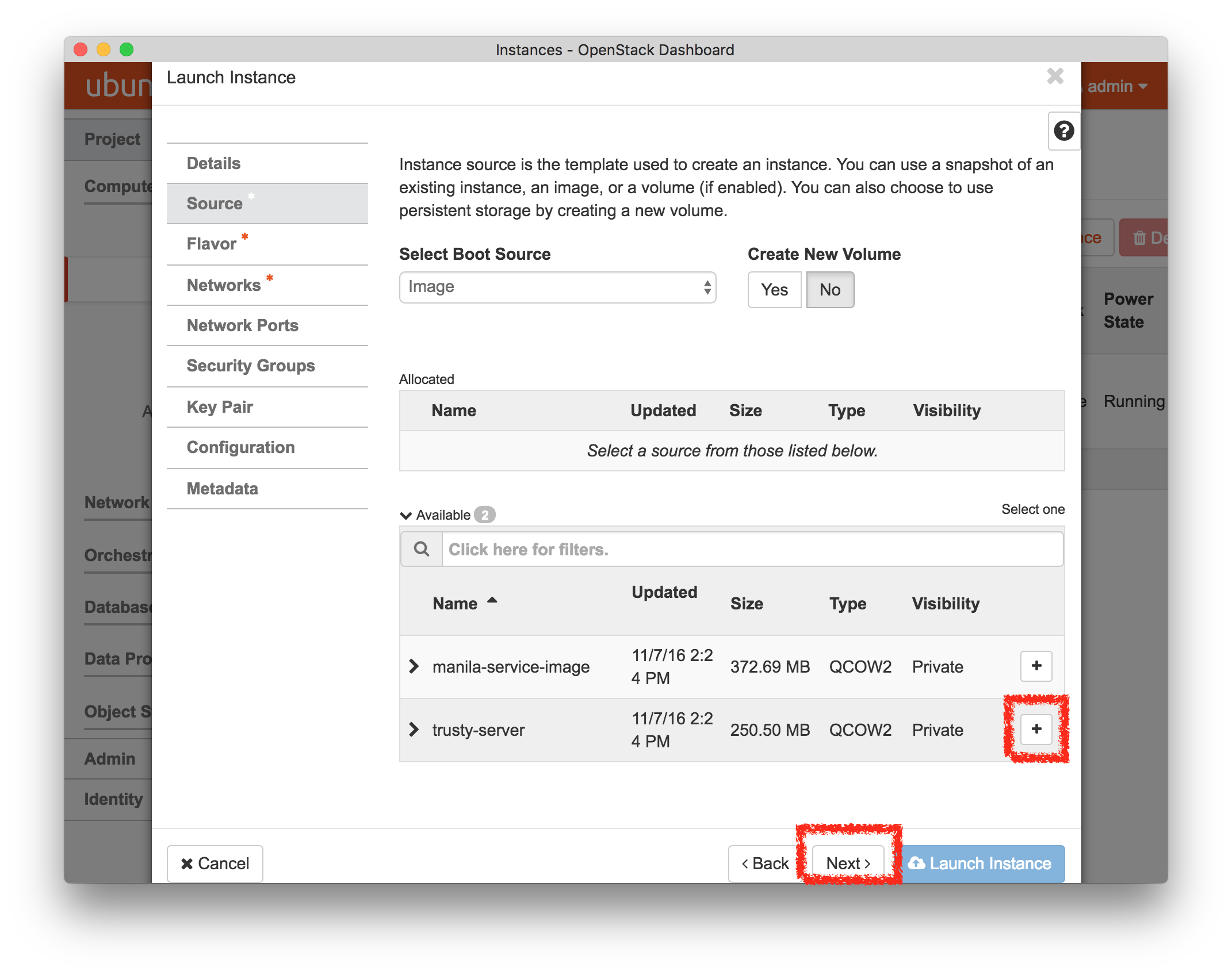

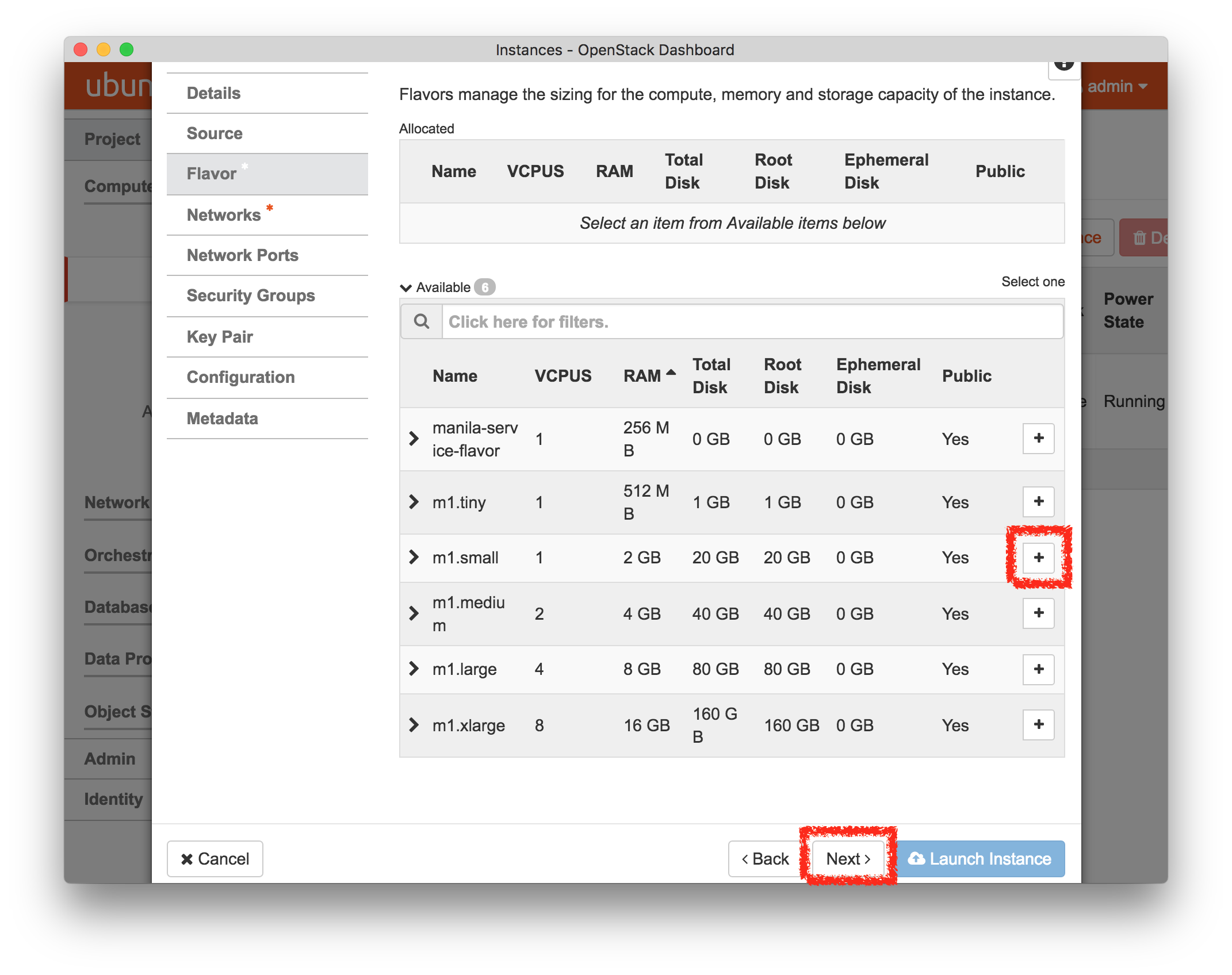

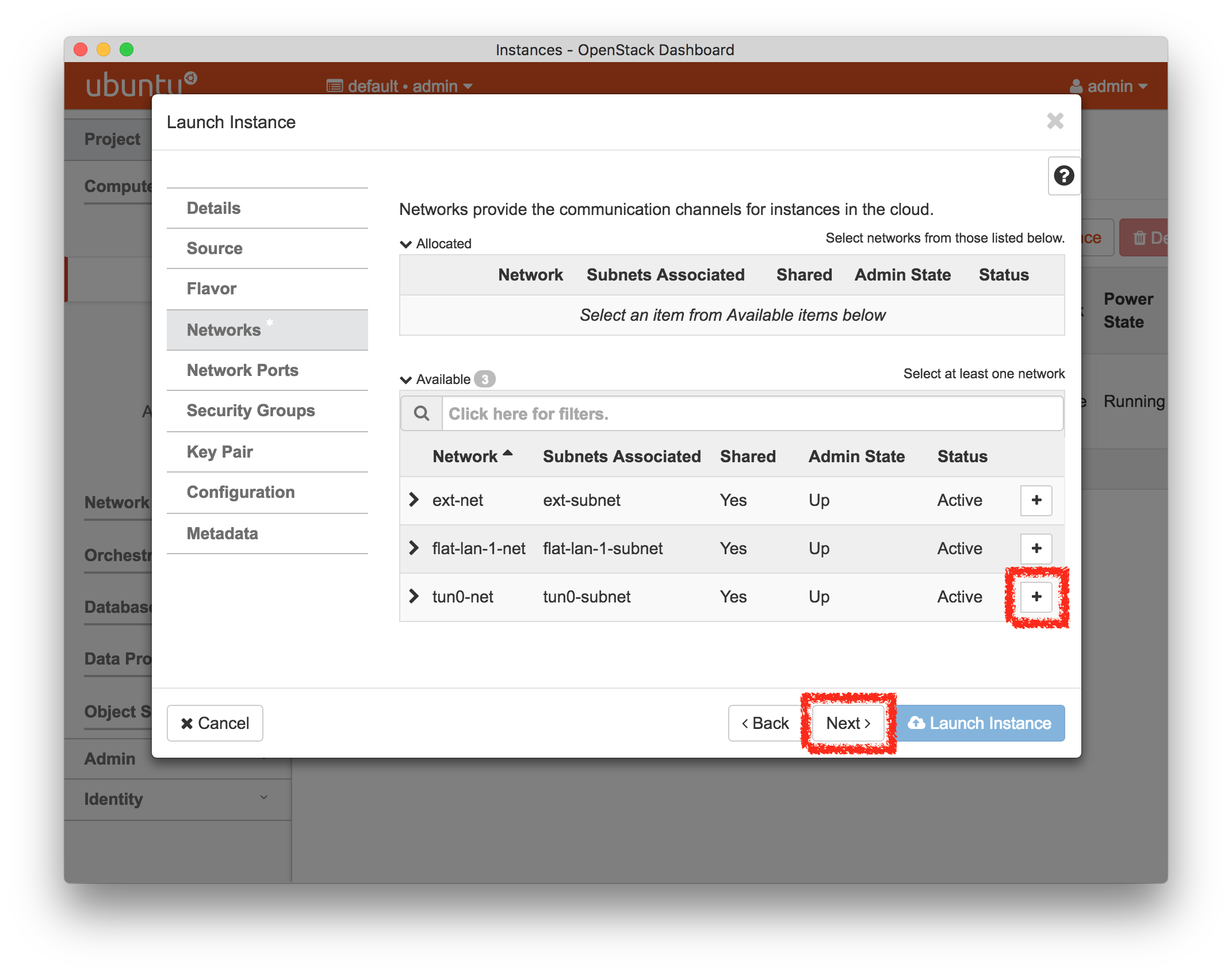

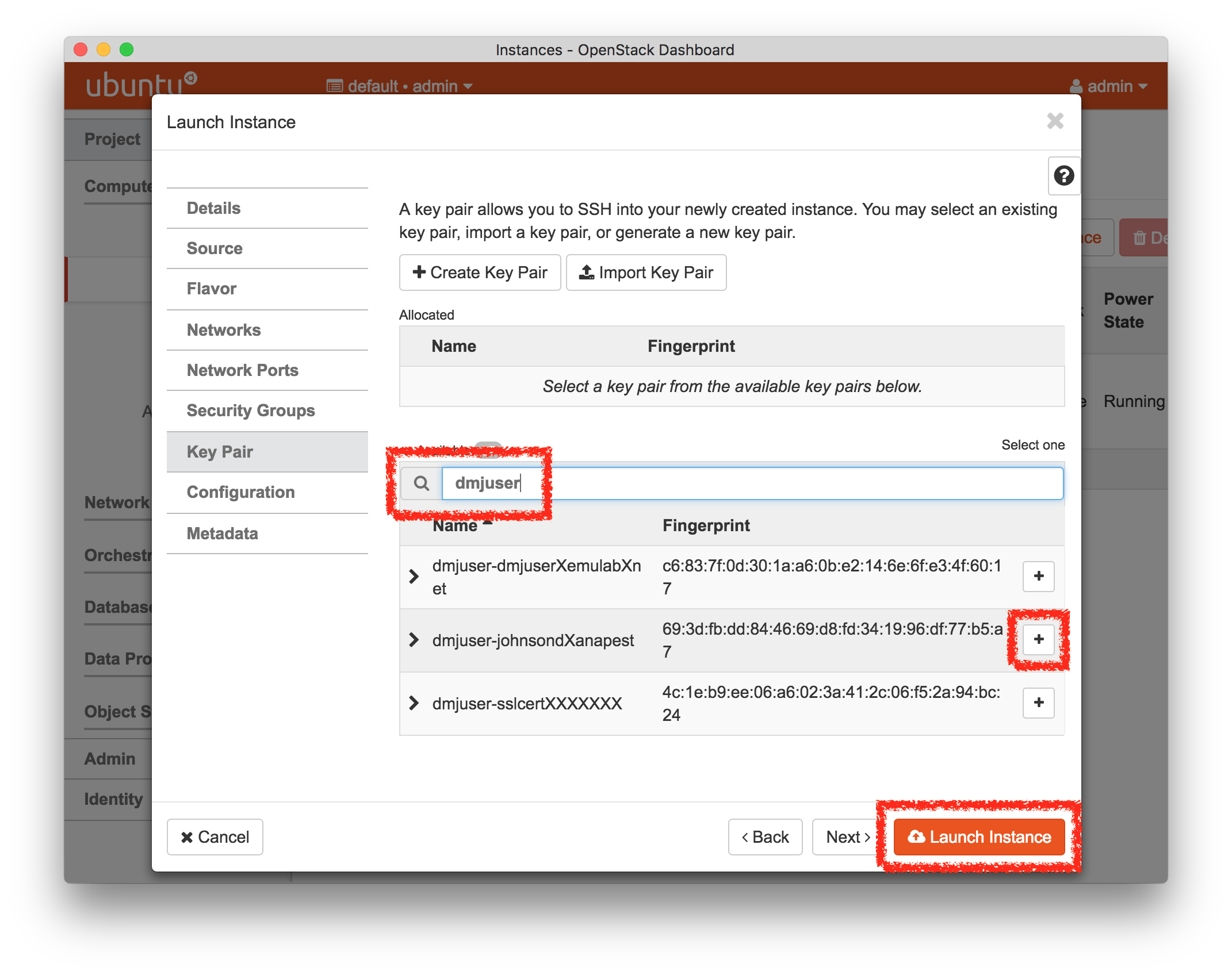

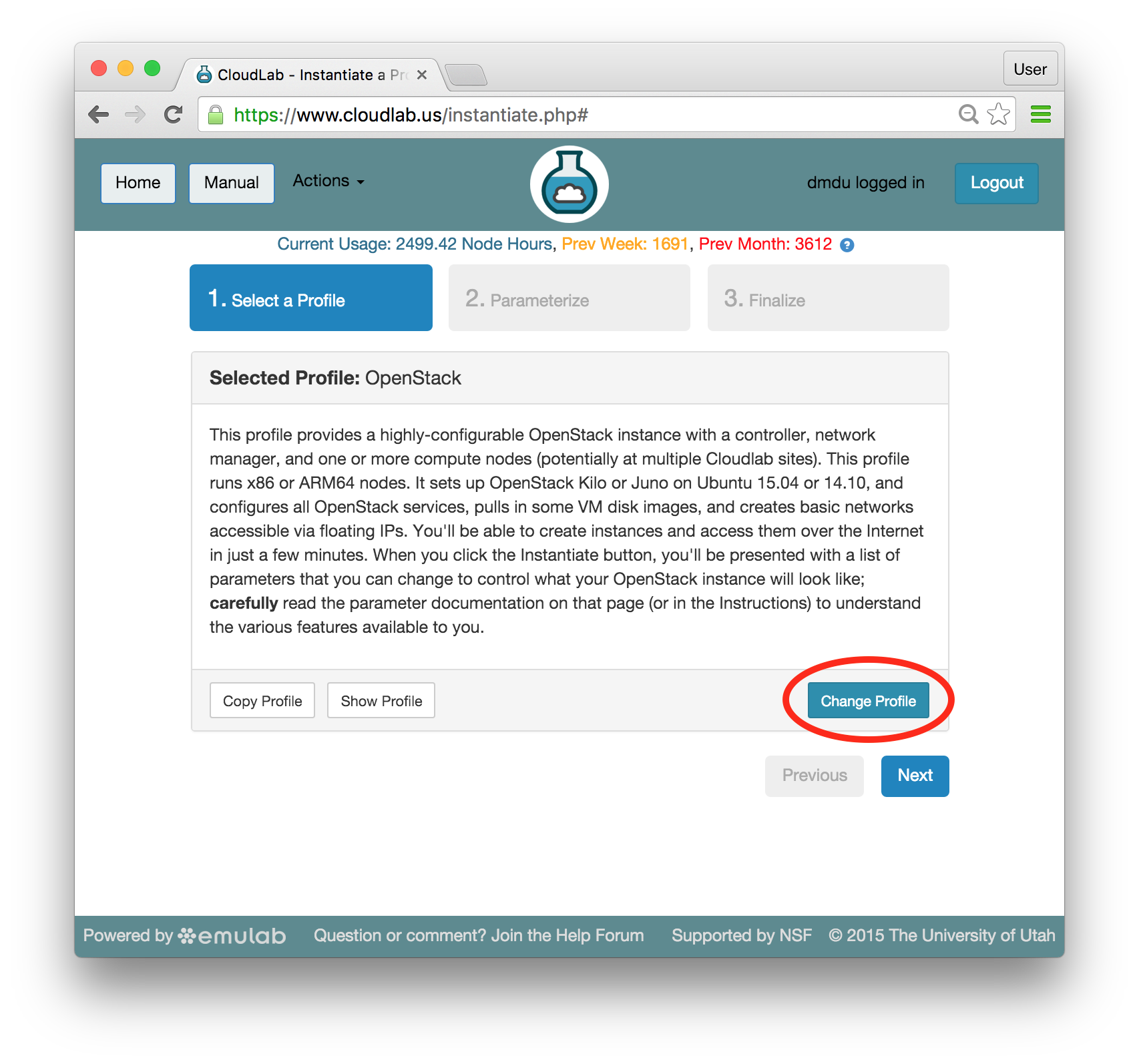

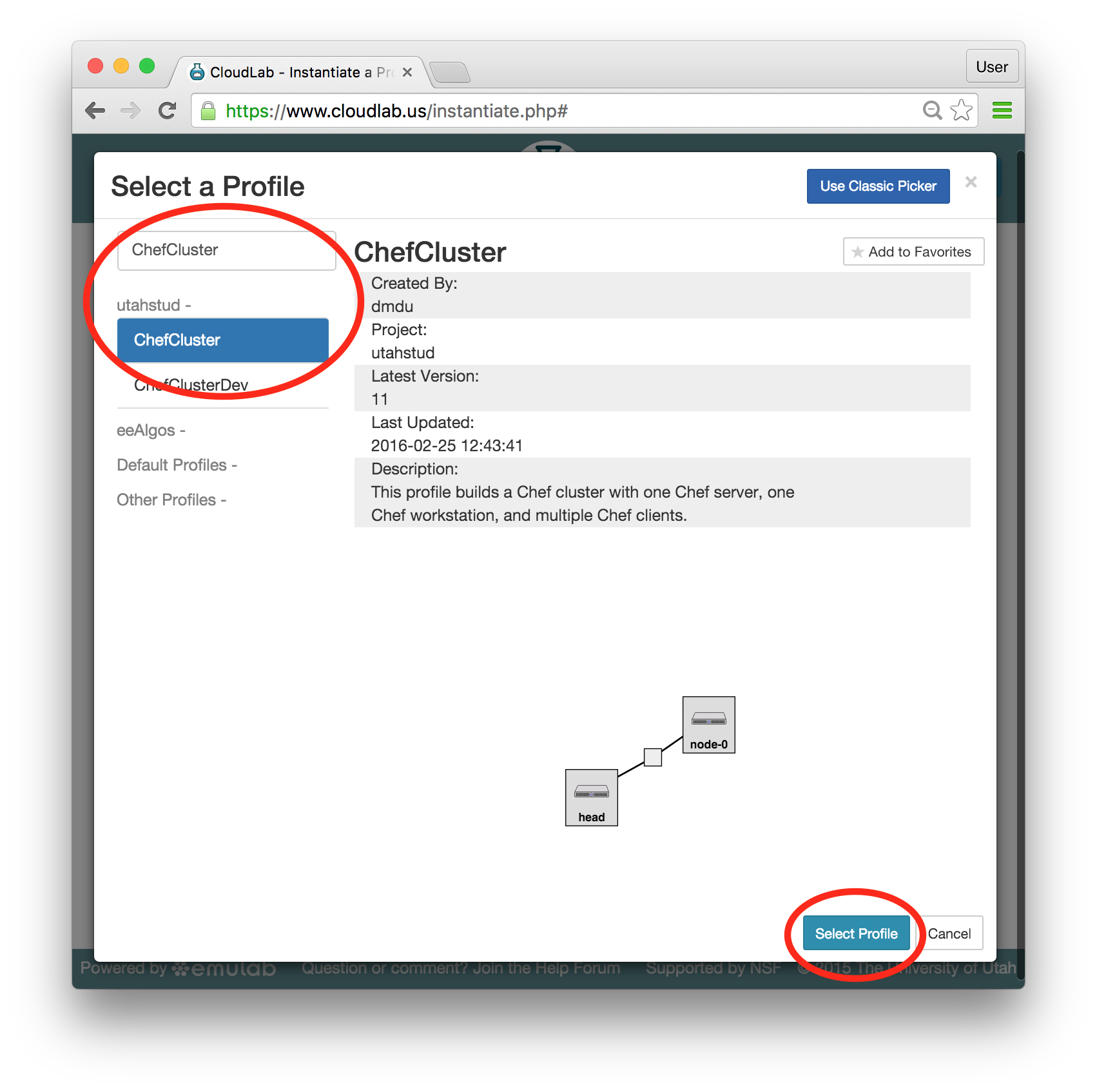

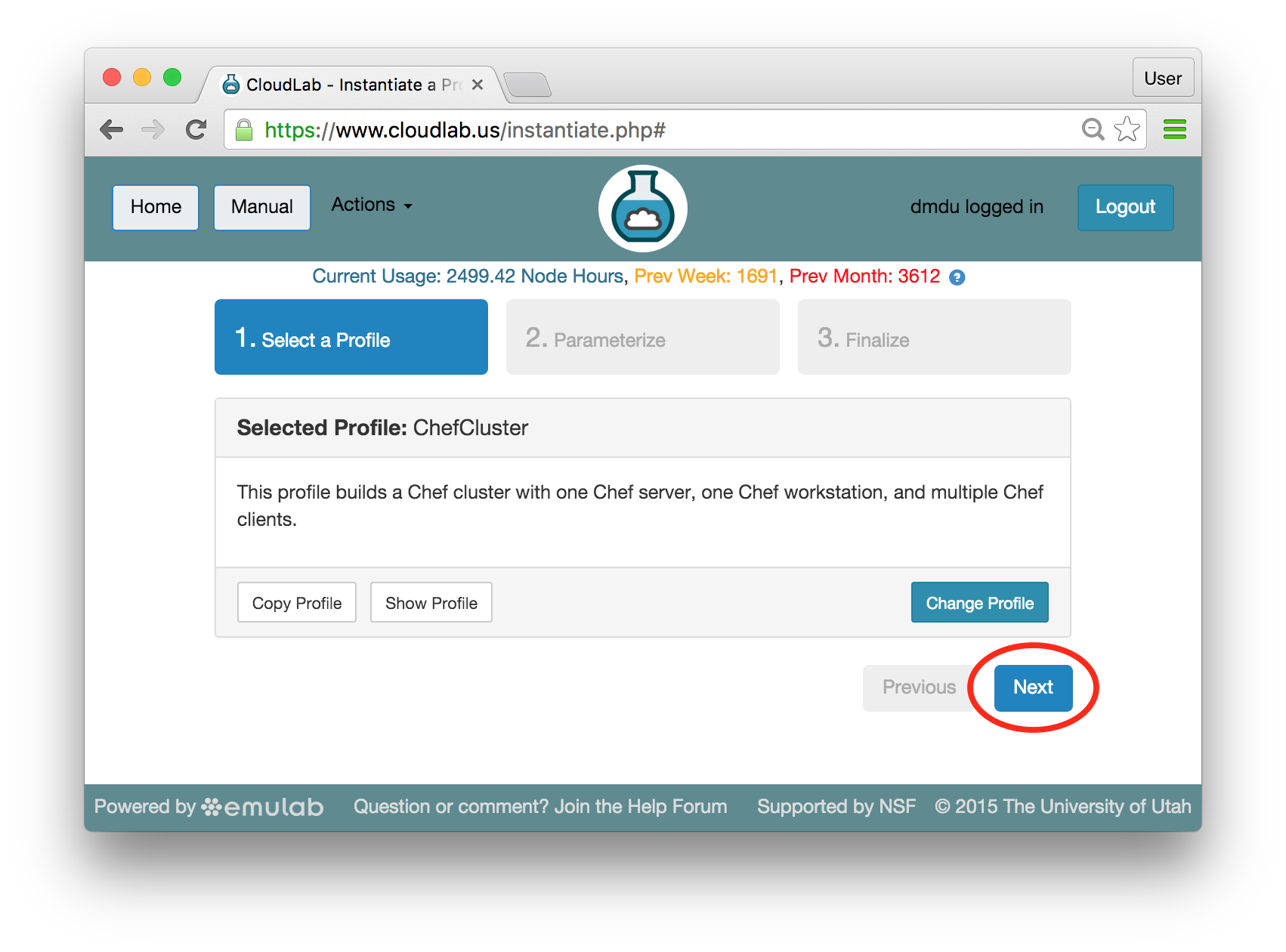

- Experiment WizardExperiments must be configured before they can be instantiated. A short wizard guides you through the process. The first step is to pick a profile for your experiment. A profile describes a set of resources (both hardware and software) that will be used to start your experiment. On the hardware side, the profile will control whether you get virtual machines or physical ones, how many there are, and what the network between them looks like. On the software side, the profile specifies the operating system and installed software.Profiles come from two sources. Some of them are provided by CloudLab itself, and provide standard installation of popular operating systems, software stacks, etc. Others are created by other researchers and may contain research software, artifacts and data used to gather published results, etc. Profiles represent a powerful way to enable repeatable research.Clicking the “Change Profile” button will let you select the profile that your experiment will be built from.

- Select a profileOn the left side is the profile selector which lists the profiles you can choose. The list contains both globally accessible profiles and profiles accessible to the projects you are part of.The large display in this dialog box shows the network topology of the profile, and a short description sits below the topology view.The OpenStack profile will give you a small OpenStack installation with one master node and one compute node. It provides a simple example of how complex software stacks can be packaged up within CloudLab. If you’d prefer to start from bare metal, look for one of the profiles that installs a stock operating system on physical machines.

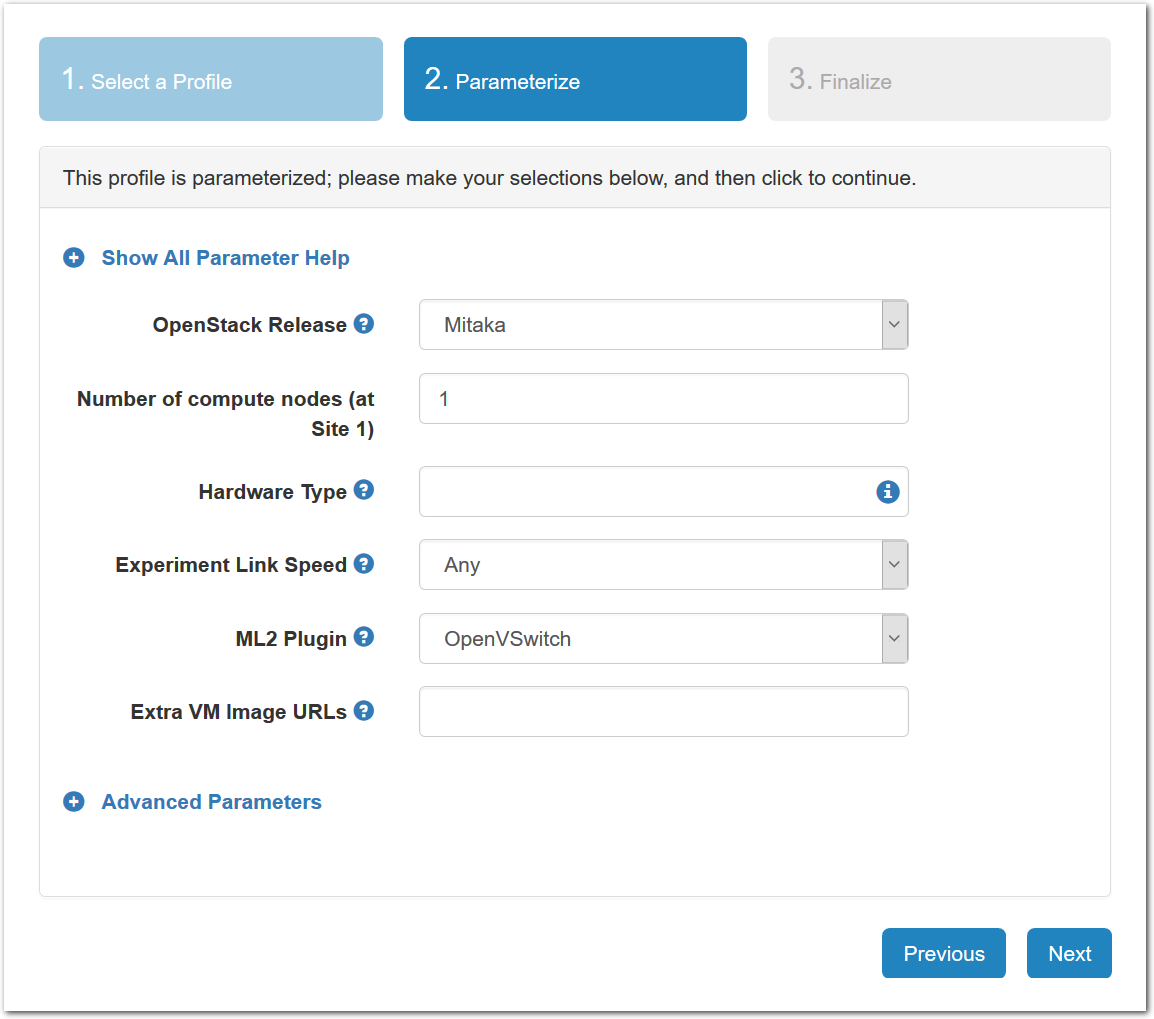

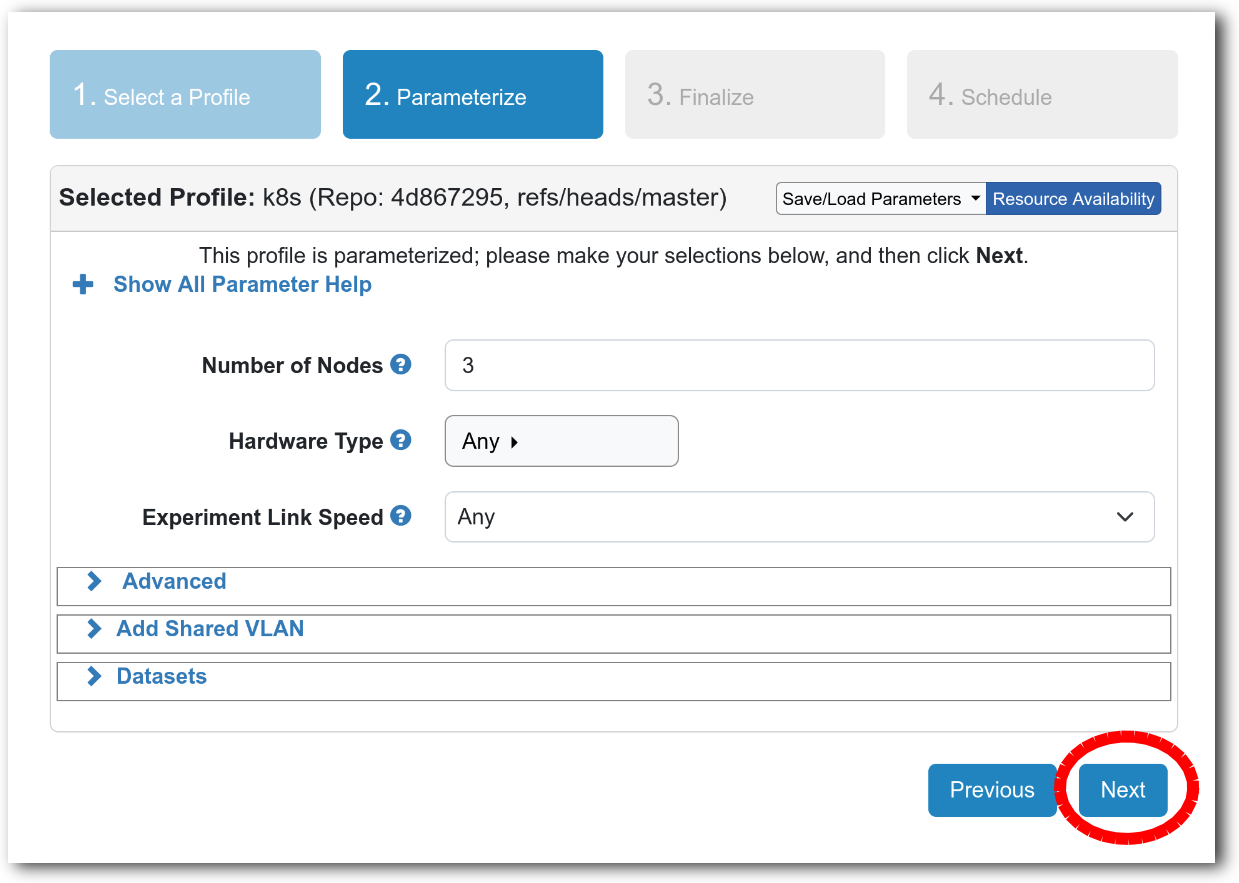

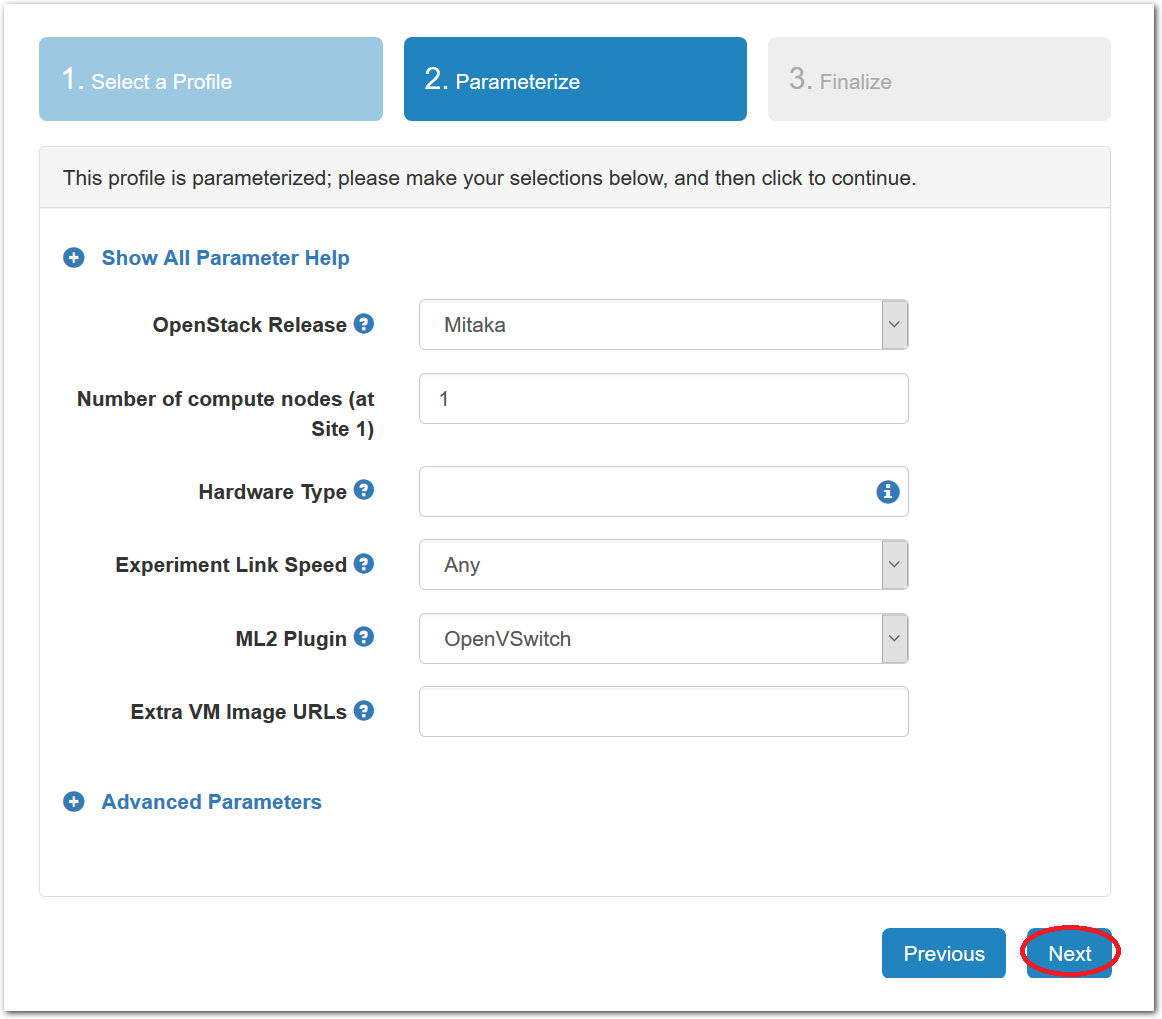

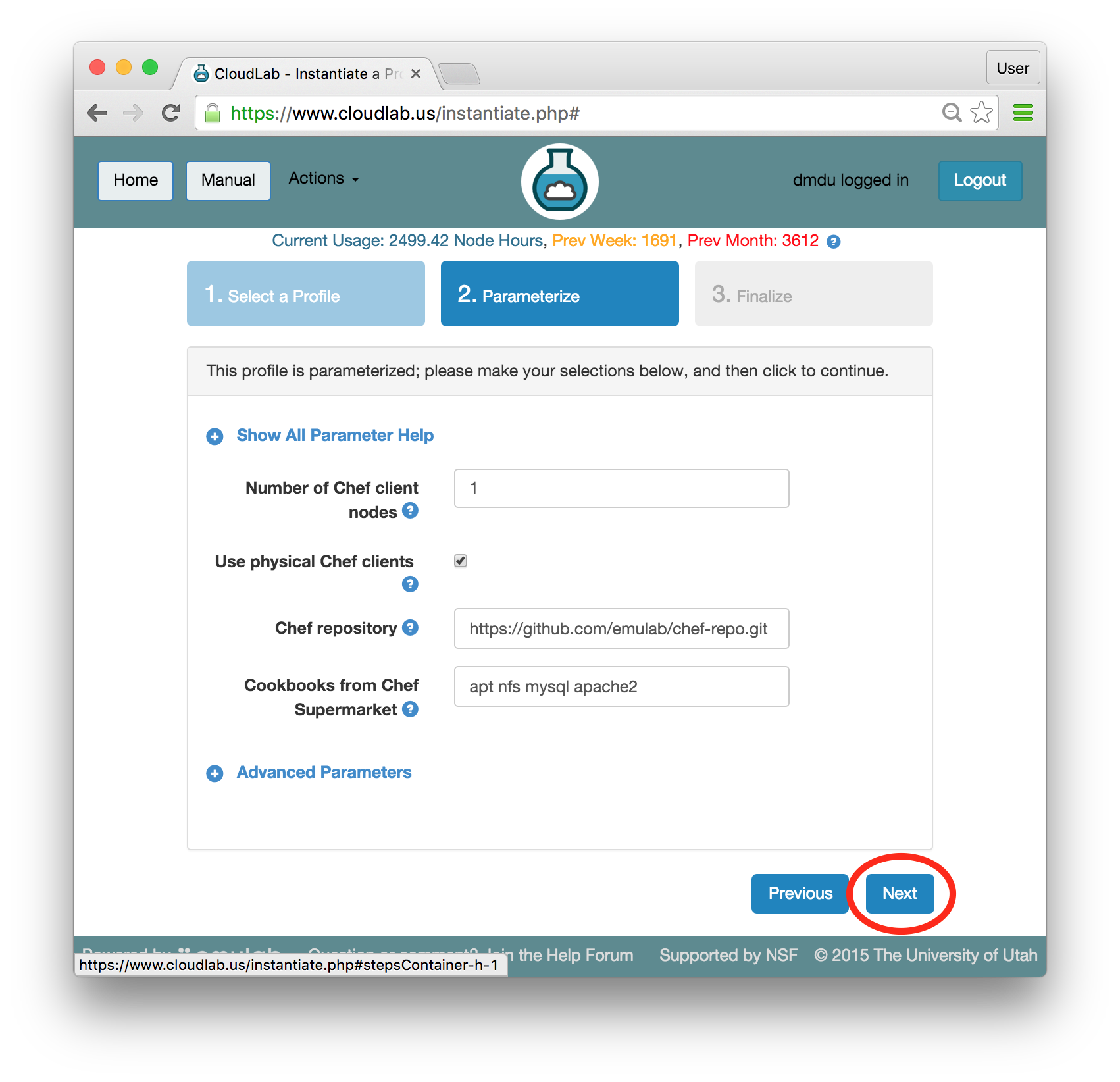

- Choose ParametersSome profiles are simple and provide the same topology every time they are instantiated. But others, like the OpenStack profile, are parameterized and allow users to make choices about how they are instantiated. The OpenStack profile allows you to pick the number of compute nodes, the hardware to use, and many more options. The creator of the profile chooses which options to allow and provides information on what those options mean. Just mouse over a blue ’?’ to see a description of an option. For now, stick with the default options and click “Next” to continue.

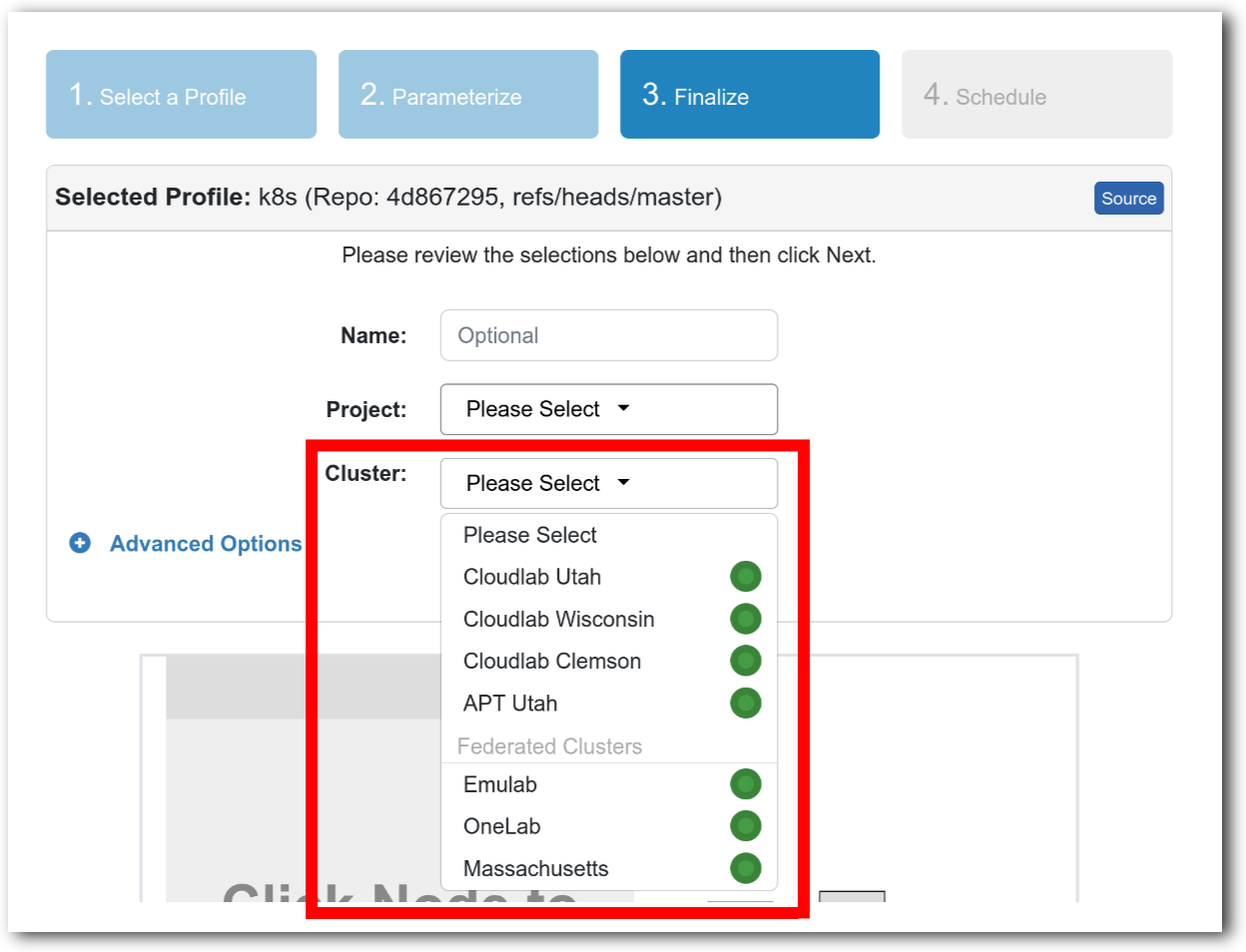

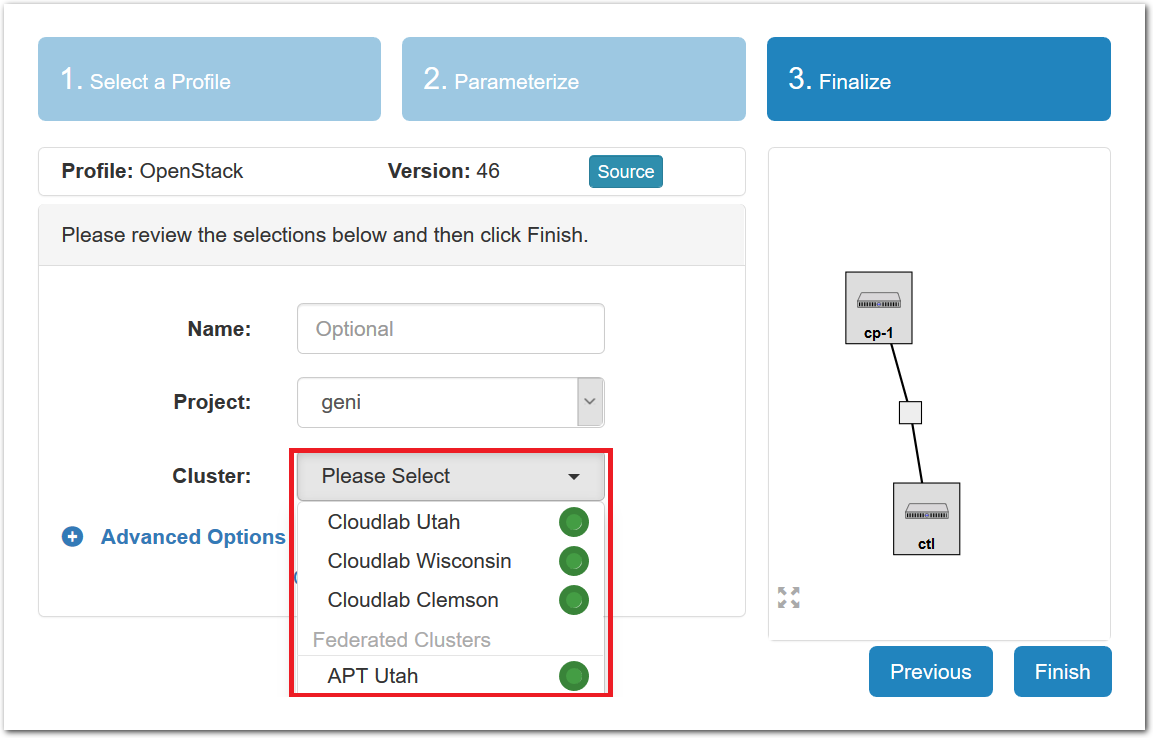

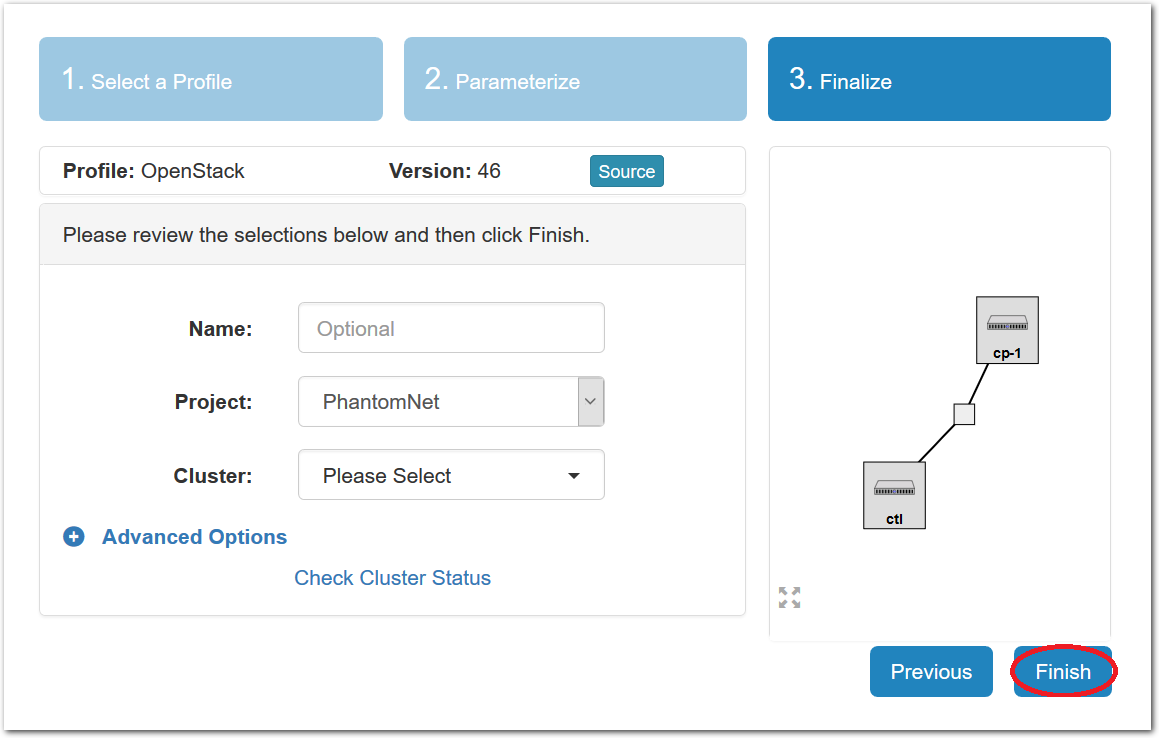

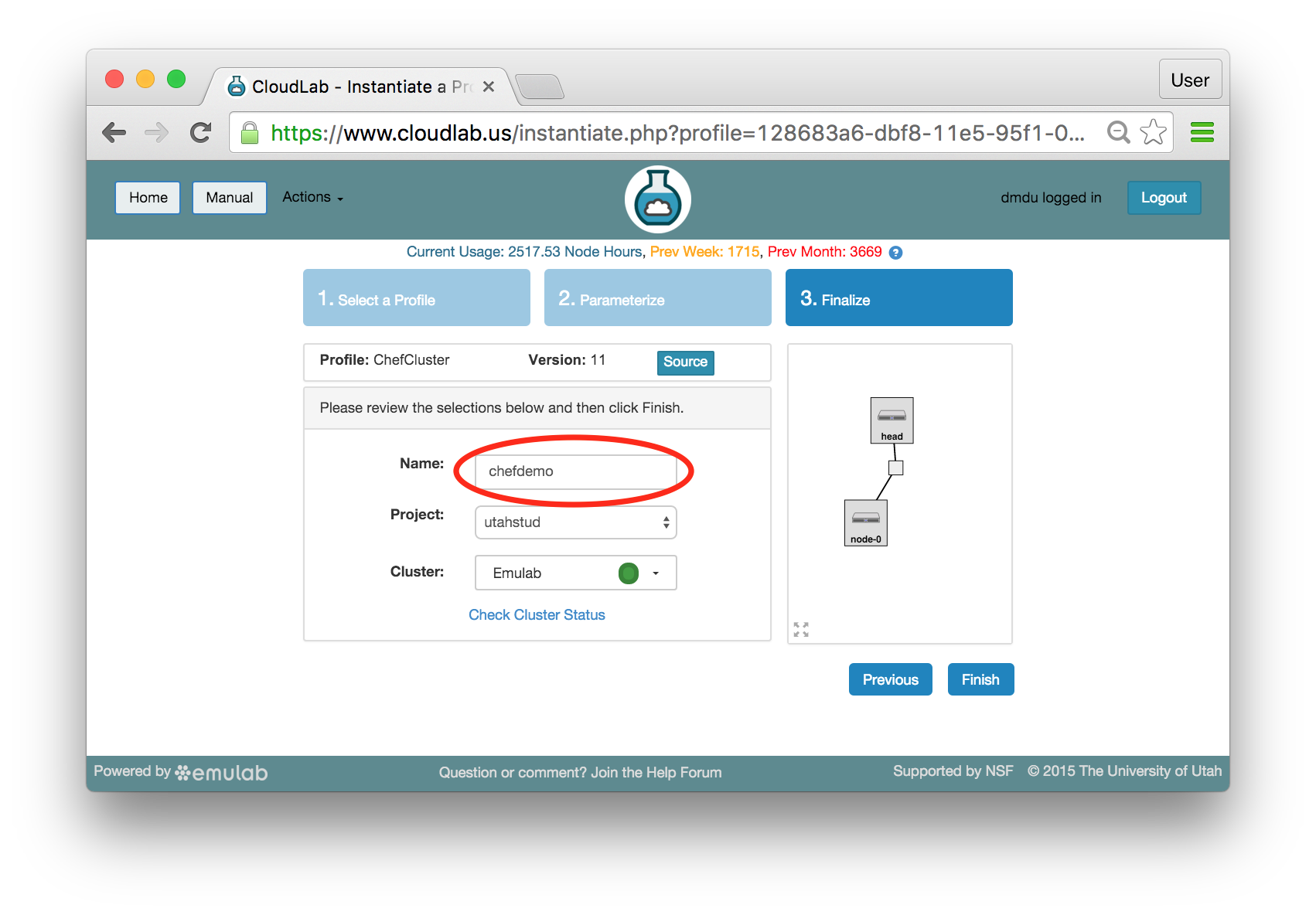

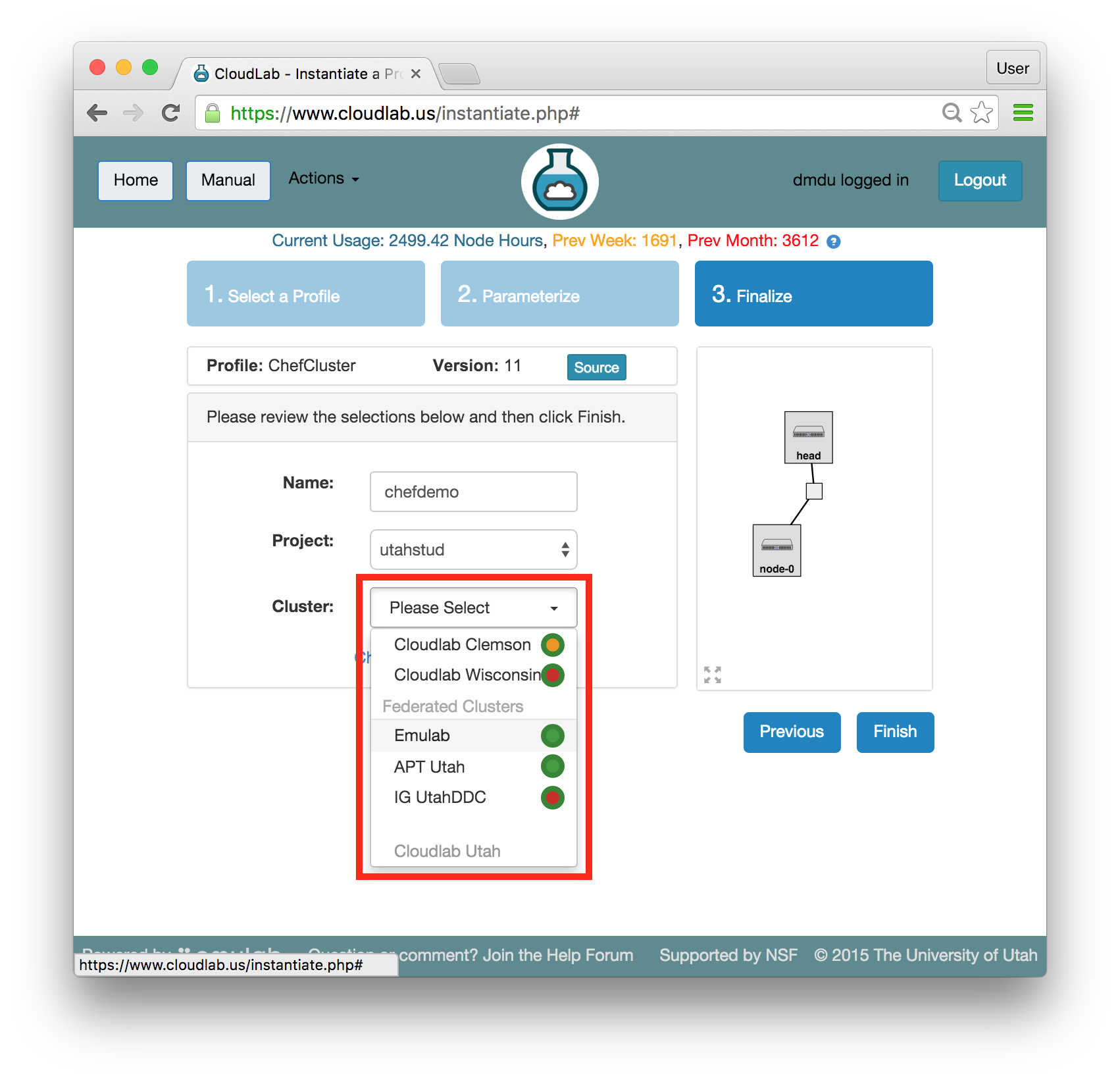

- Pick a clusterCloudLab can instantiate profiles on several different backend clusters. The cluster selector is located right above the “Create” button; the the cluster most suited to the profile you’ve chosen will be selected by default.

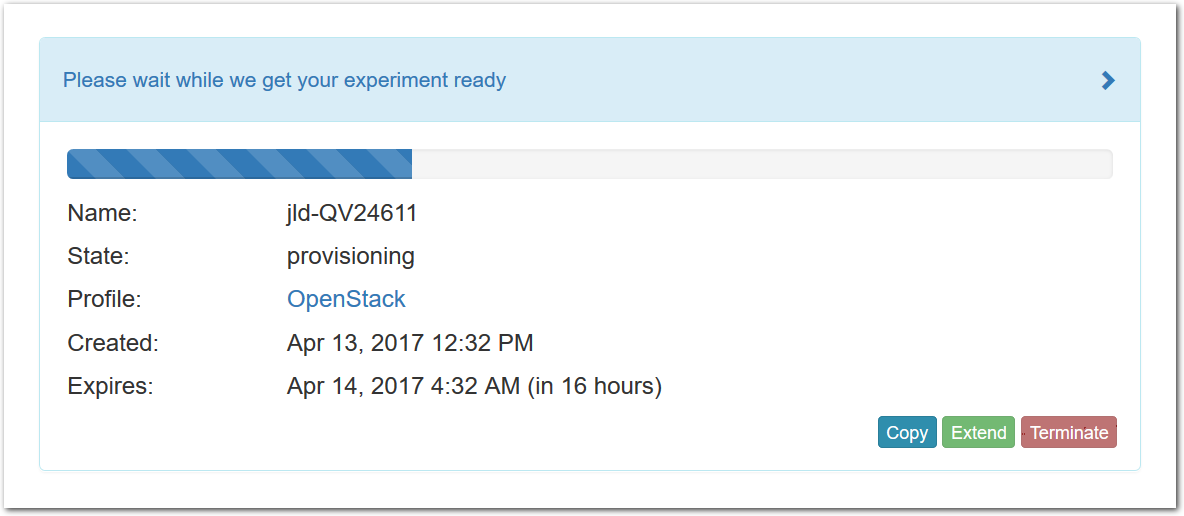

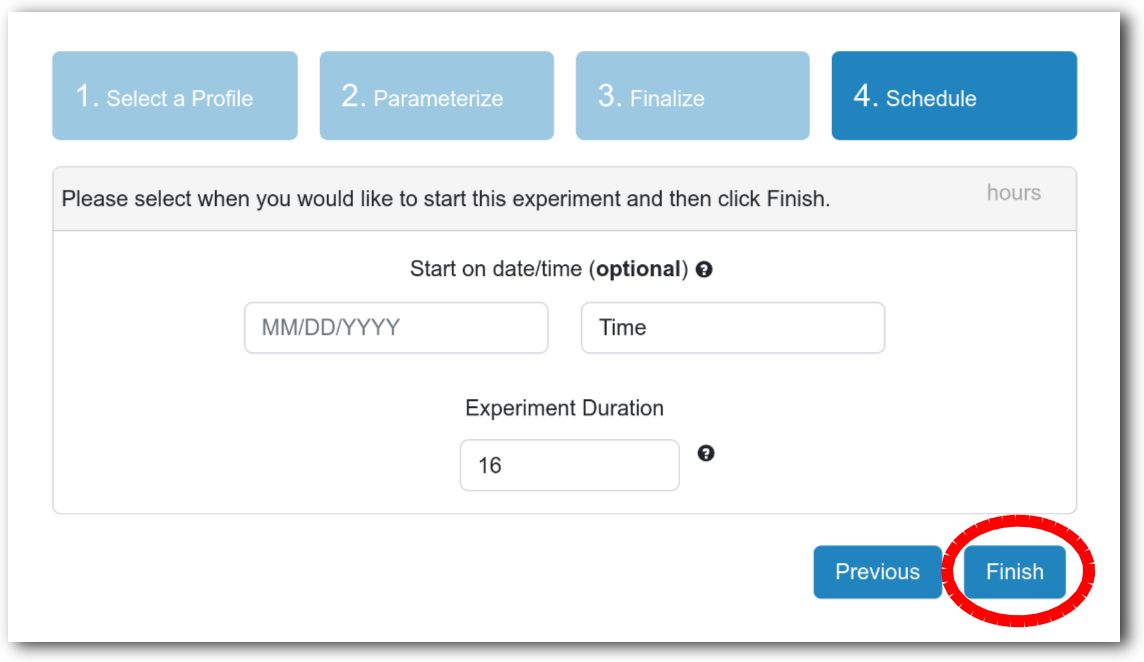

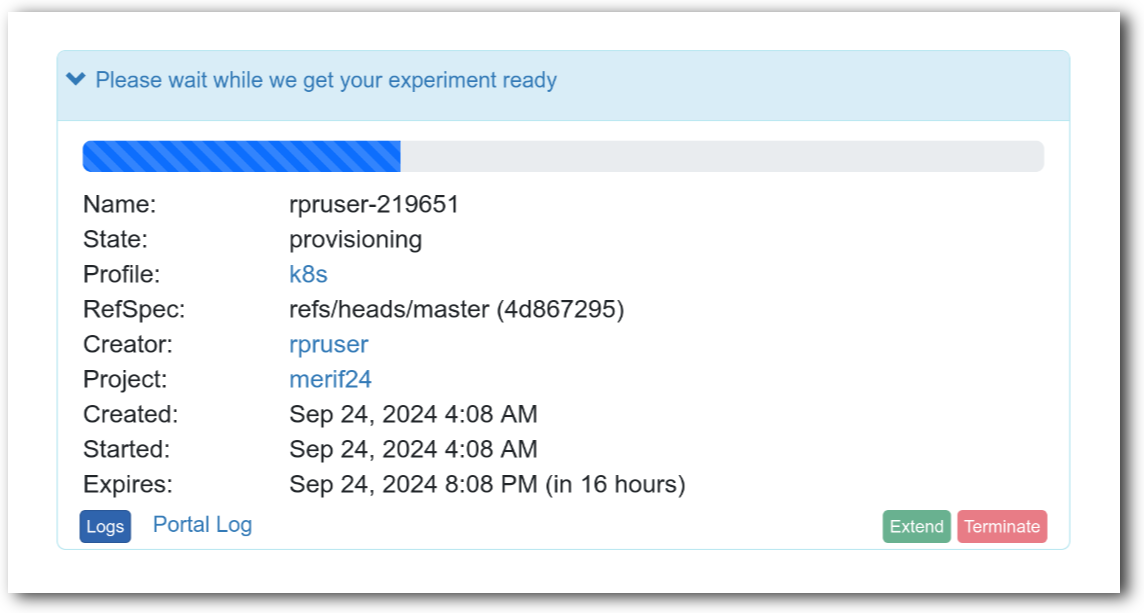

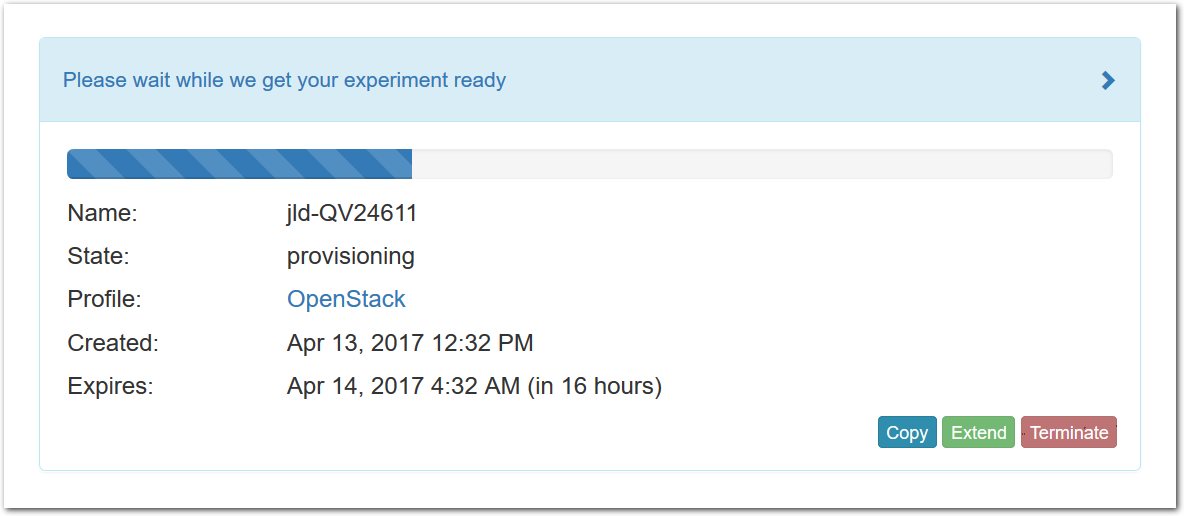

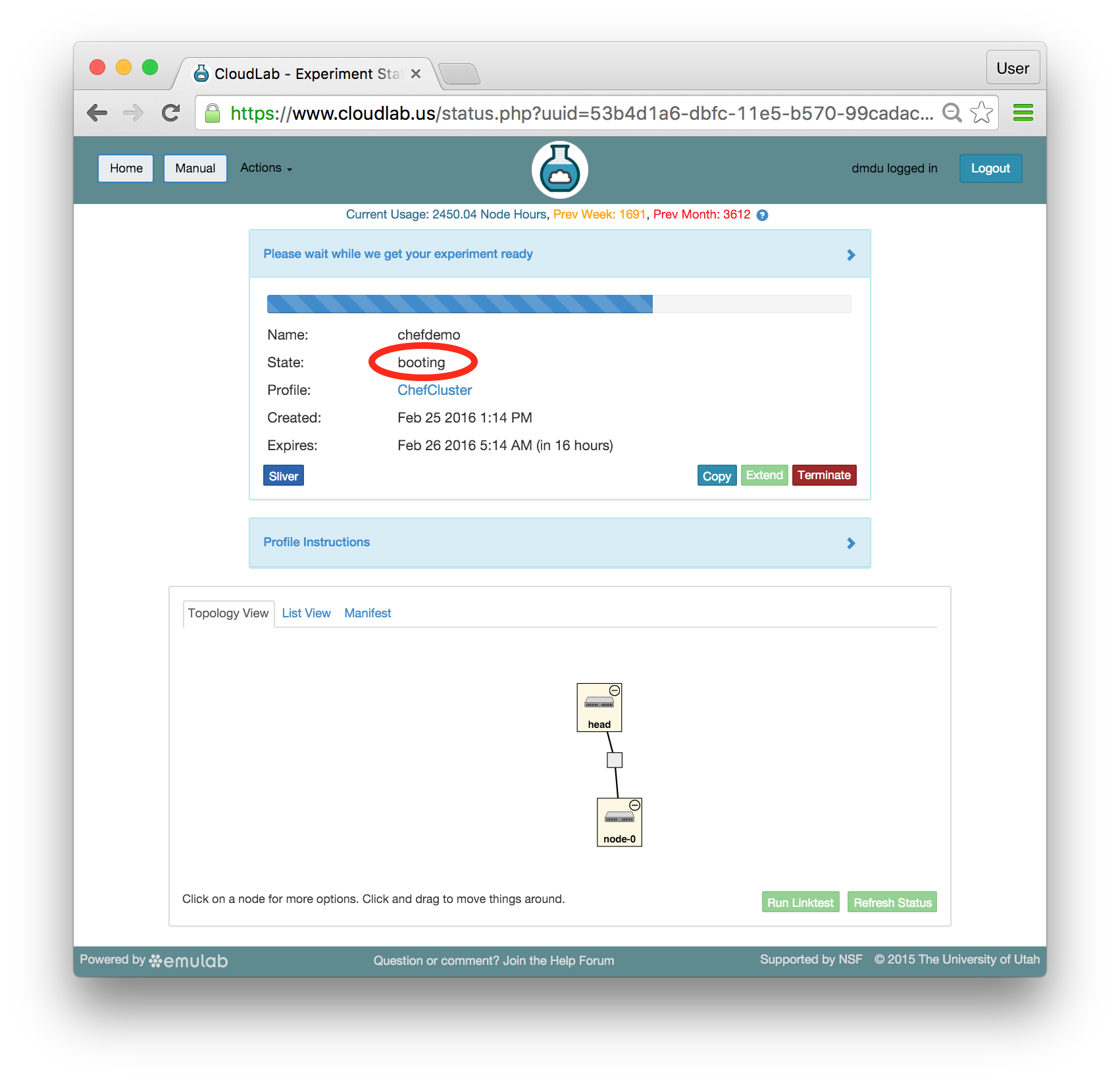

- Click Create!When you click the “Create” button, CloudLab will start preparing your experiment by selecting nodes, installing software, etc. as described in the profile. What’s going on behind the scenes is that on one (or more) of the machines in one of the CloudLab clusters, a disk is being imaged, VMs and/or physical machines booted, accounts created for you, etc. This process usually takes a couple of minutes.

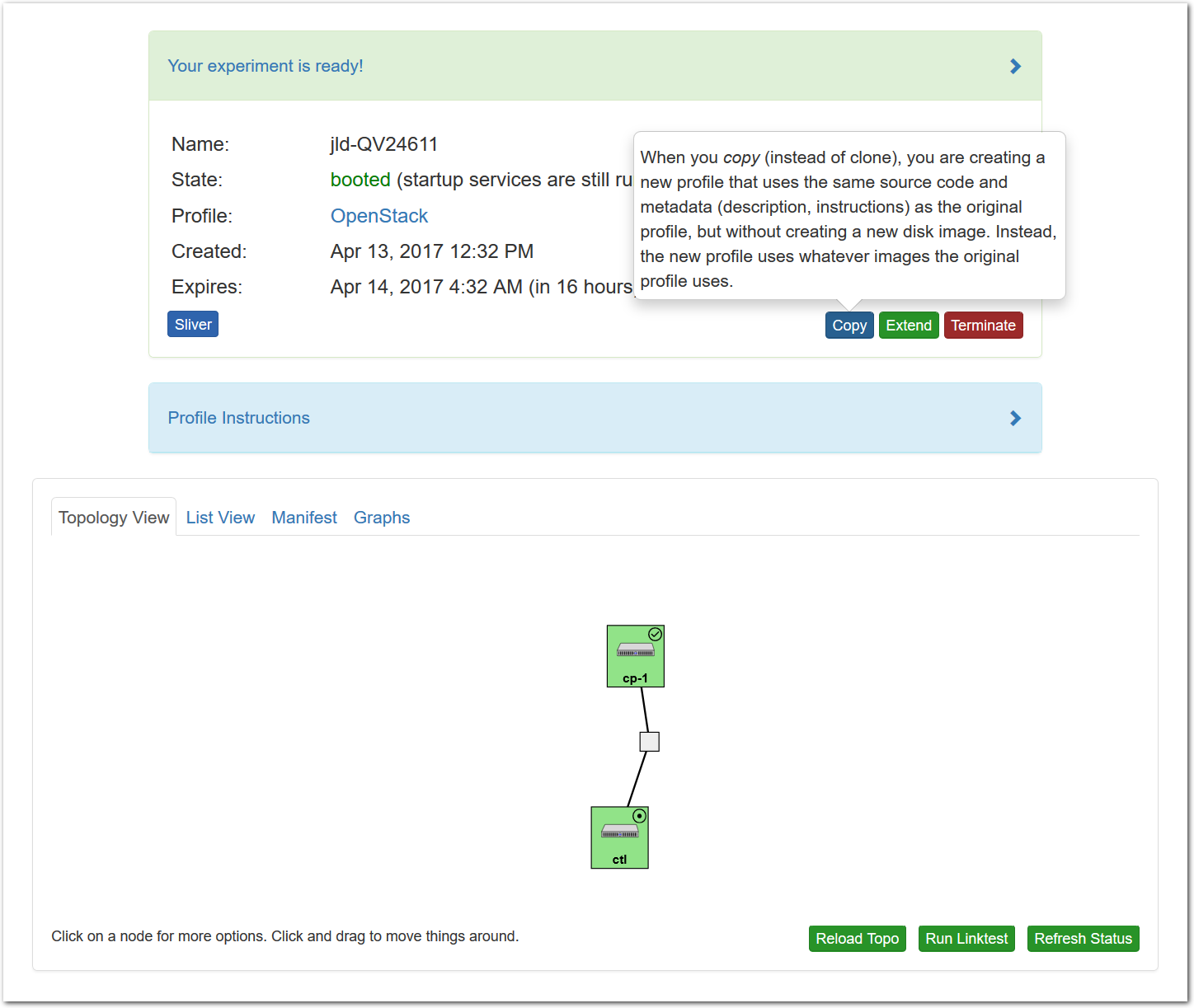

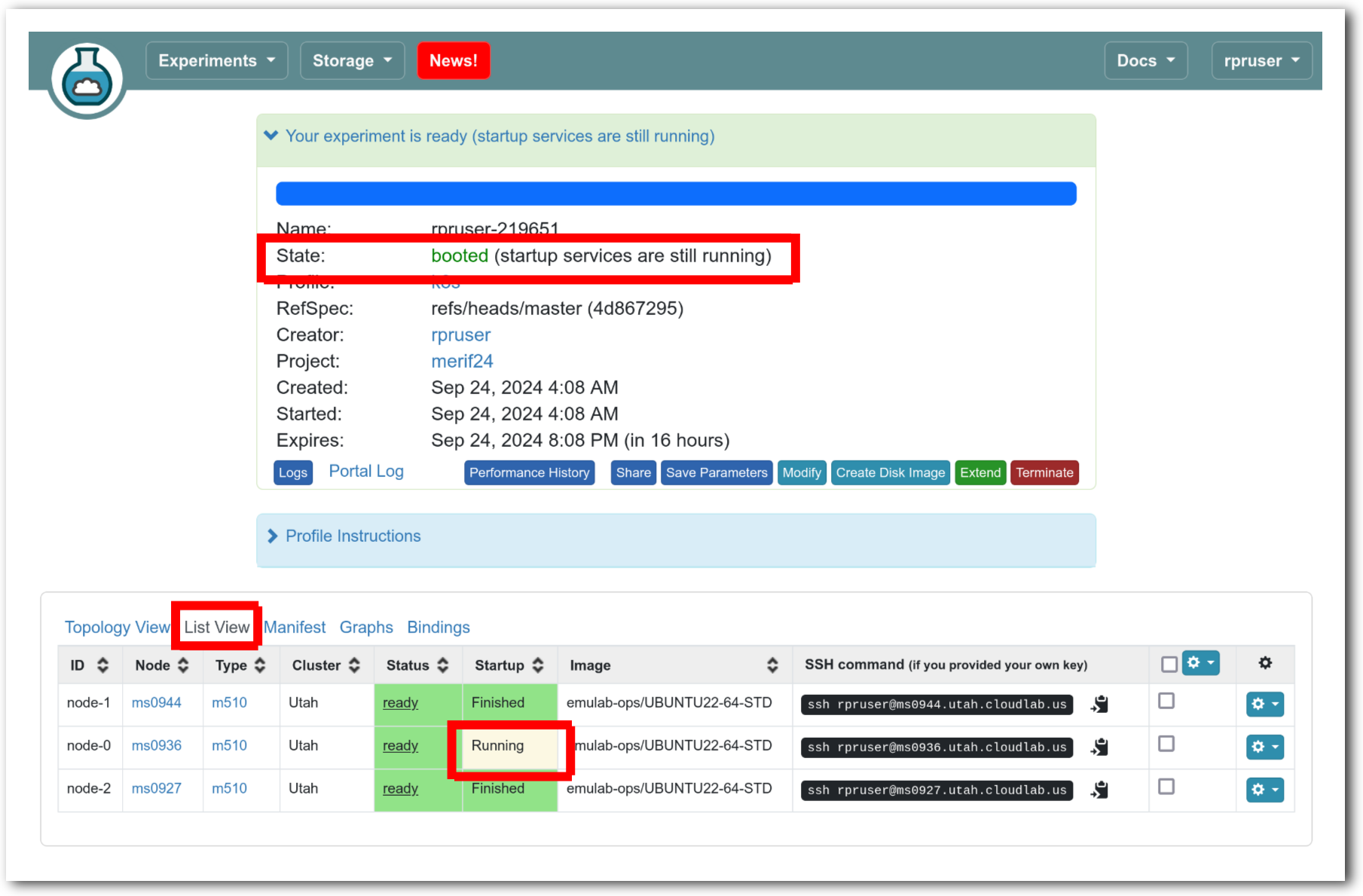

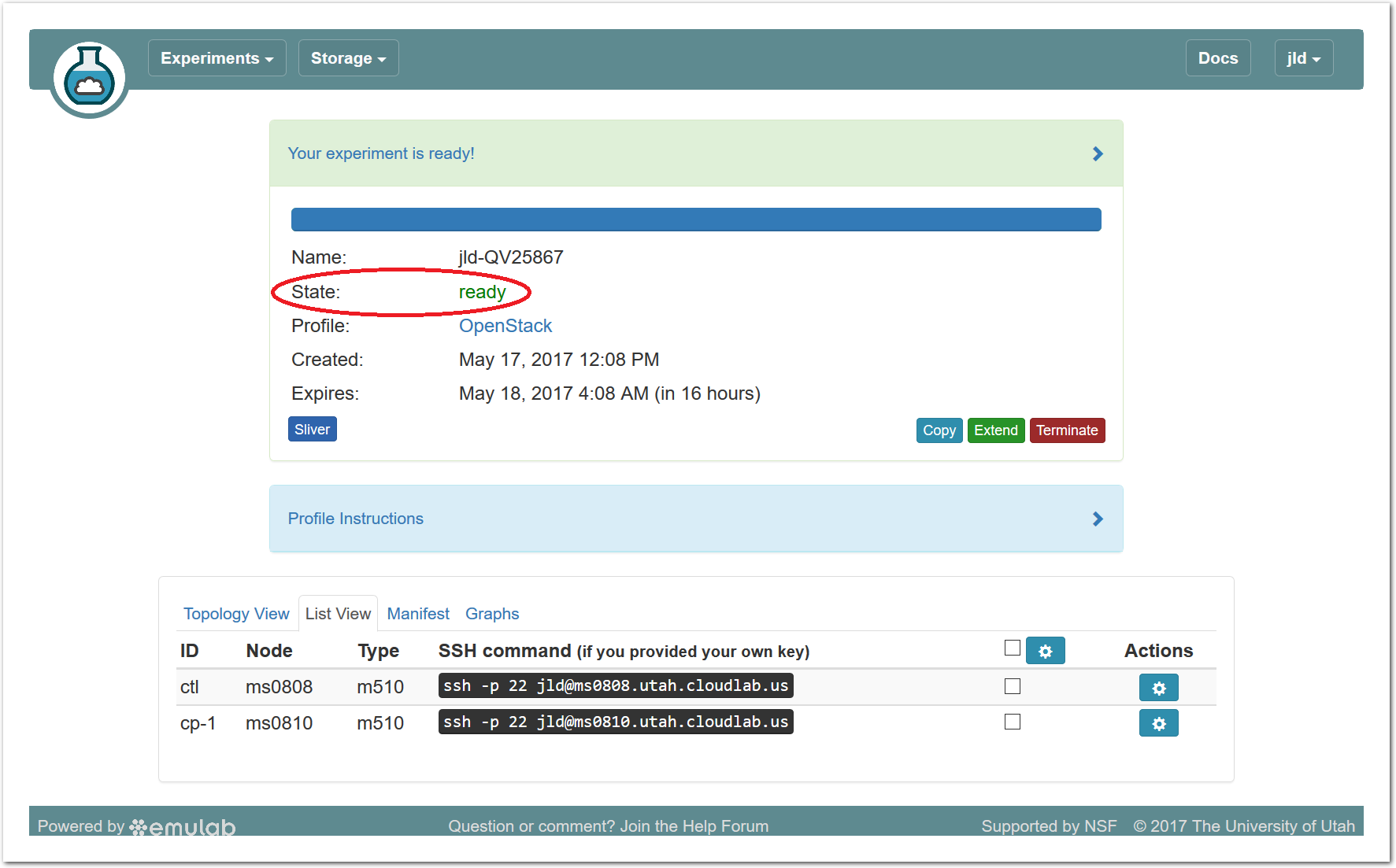

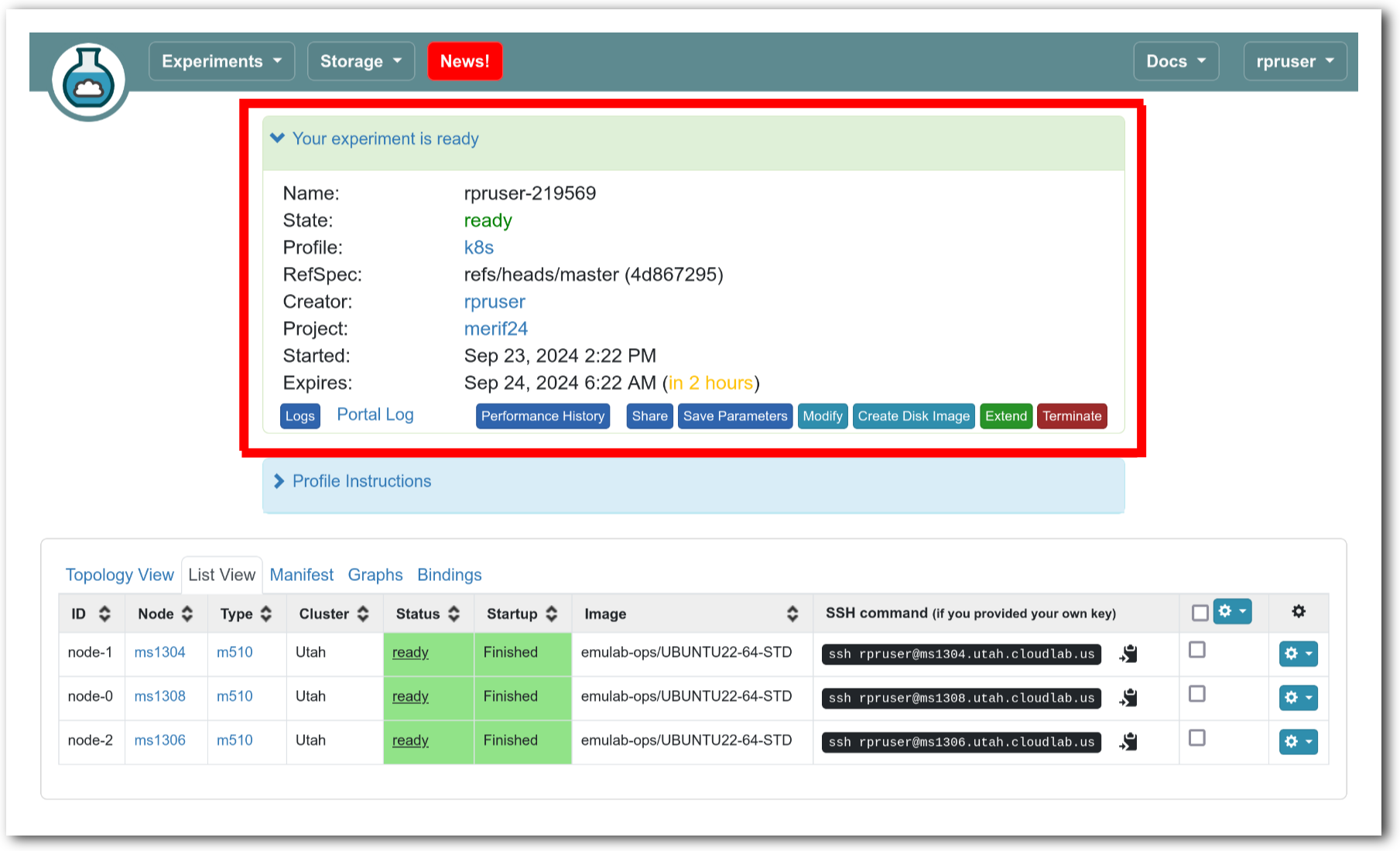

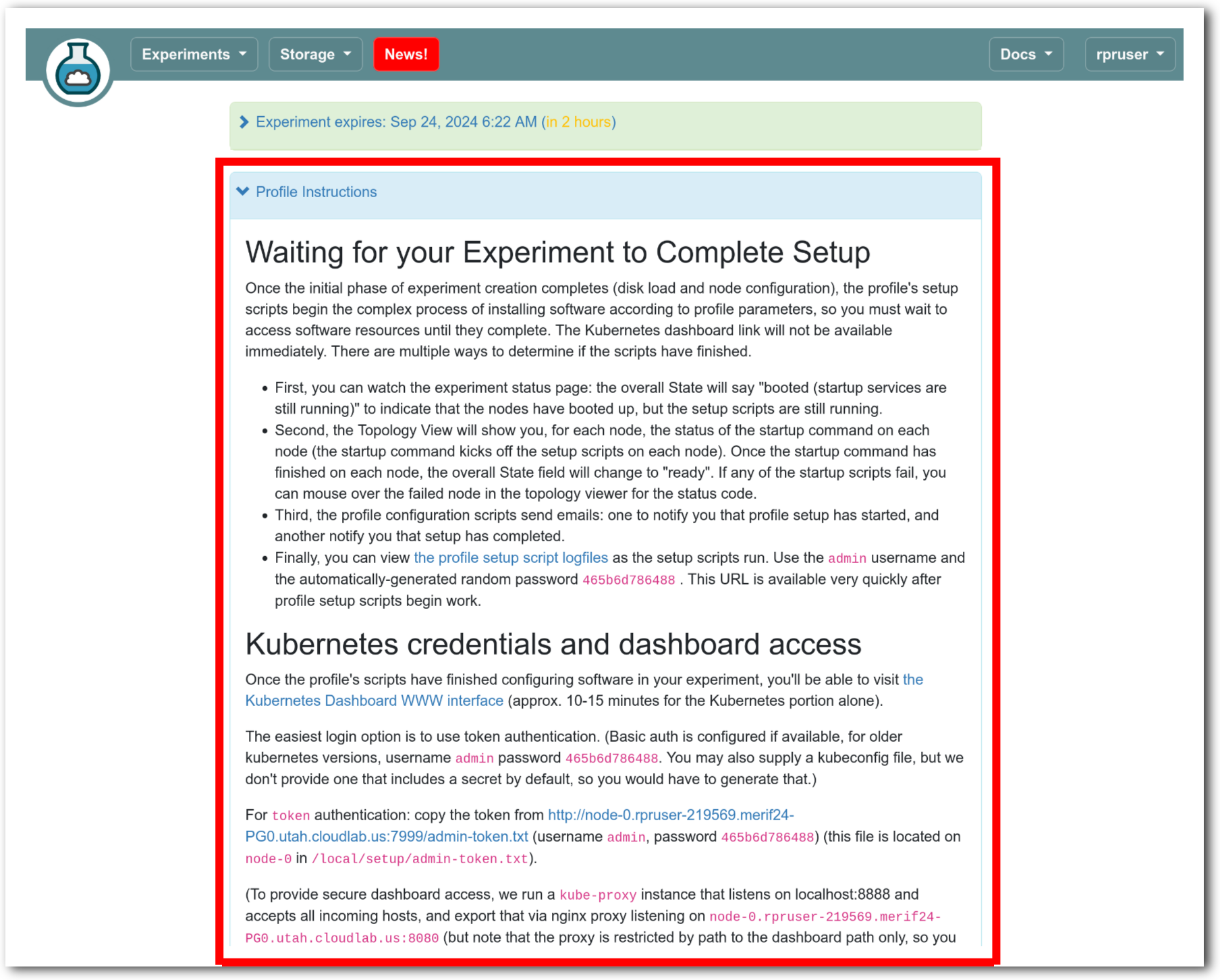

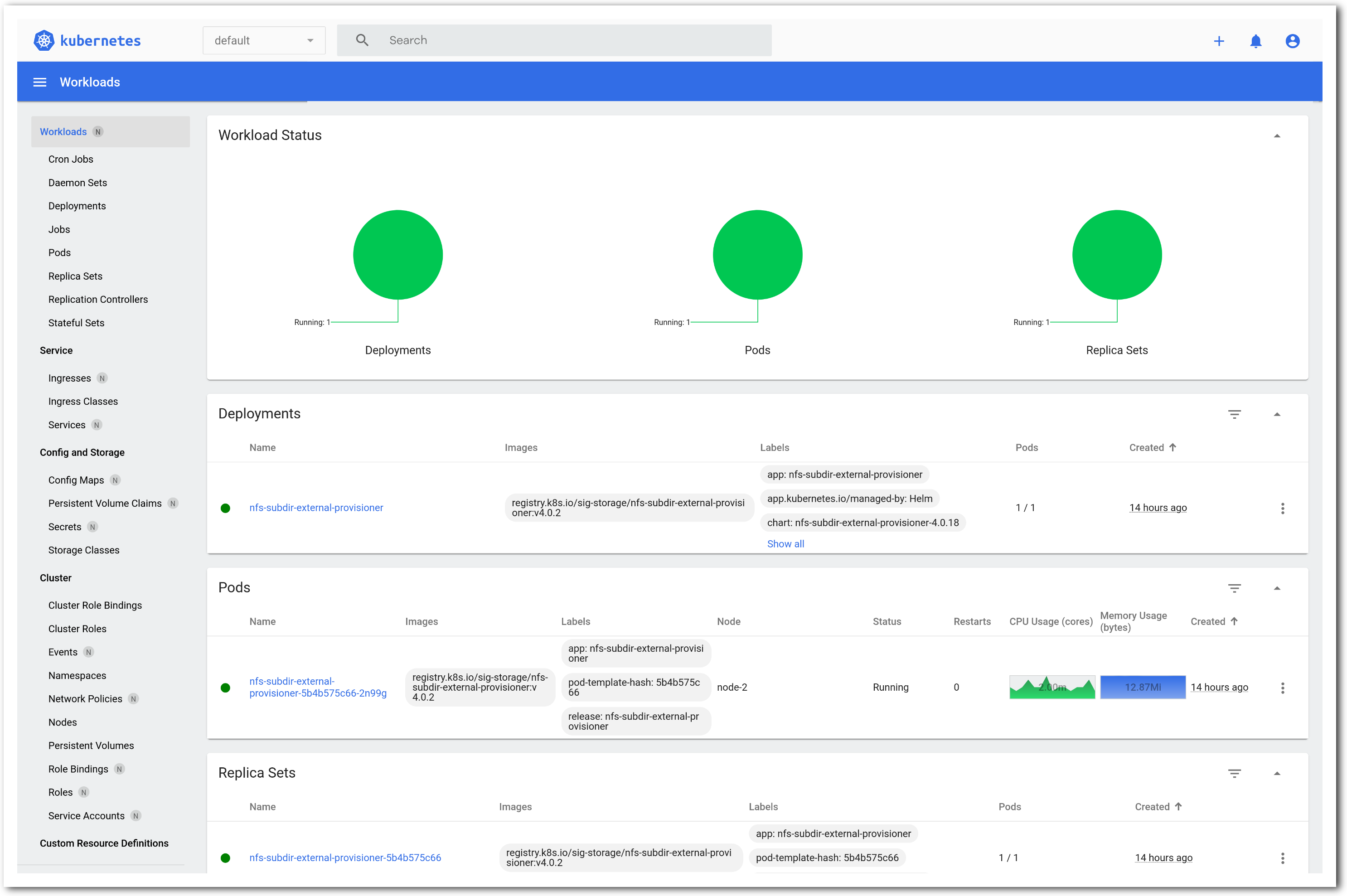

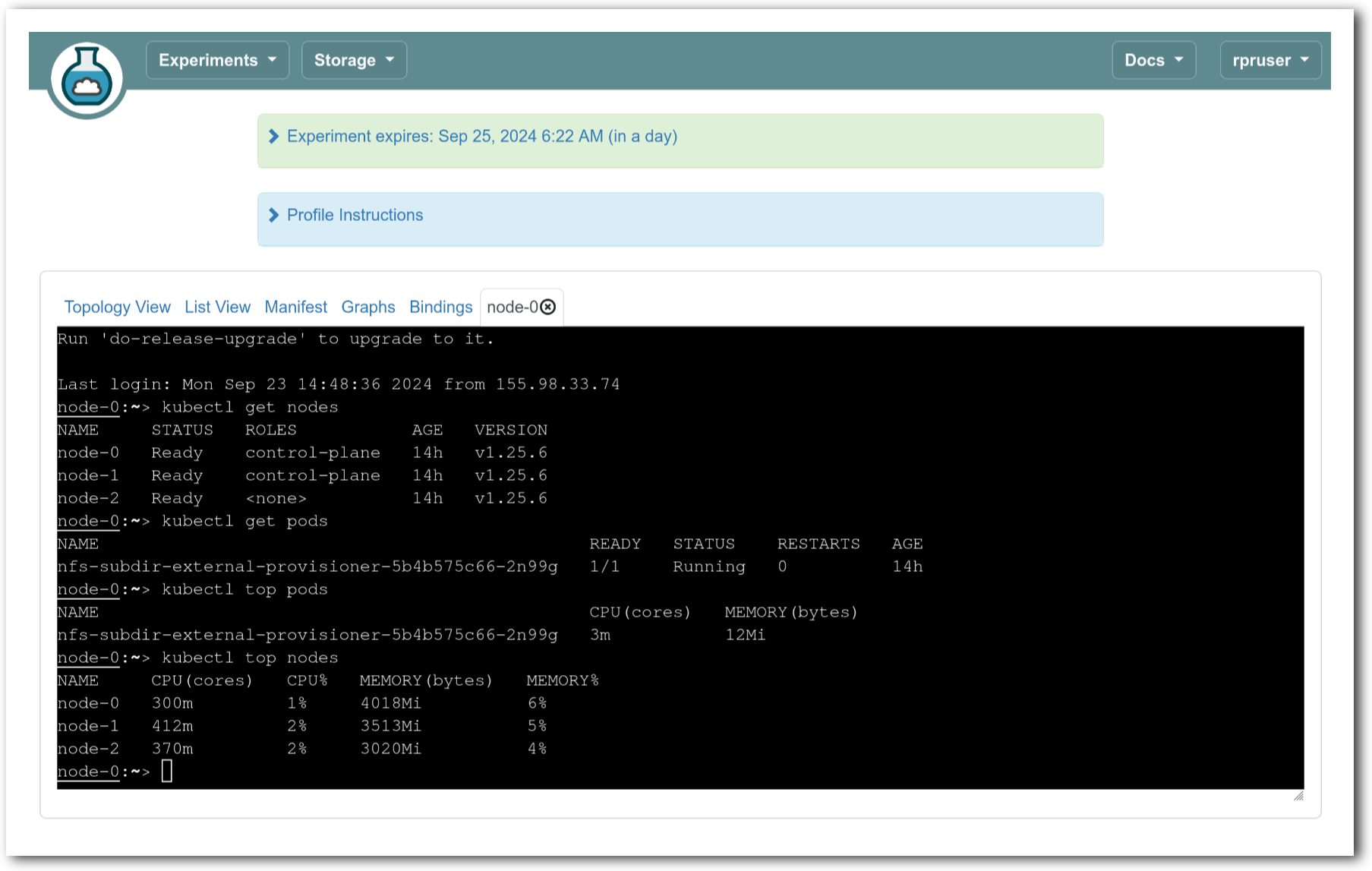

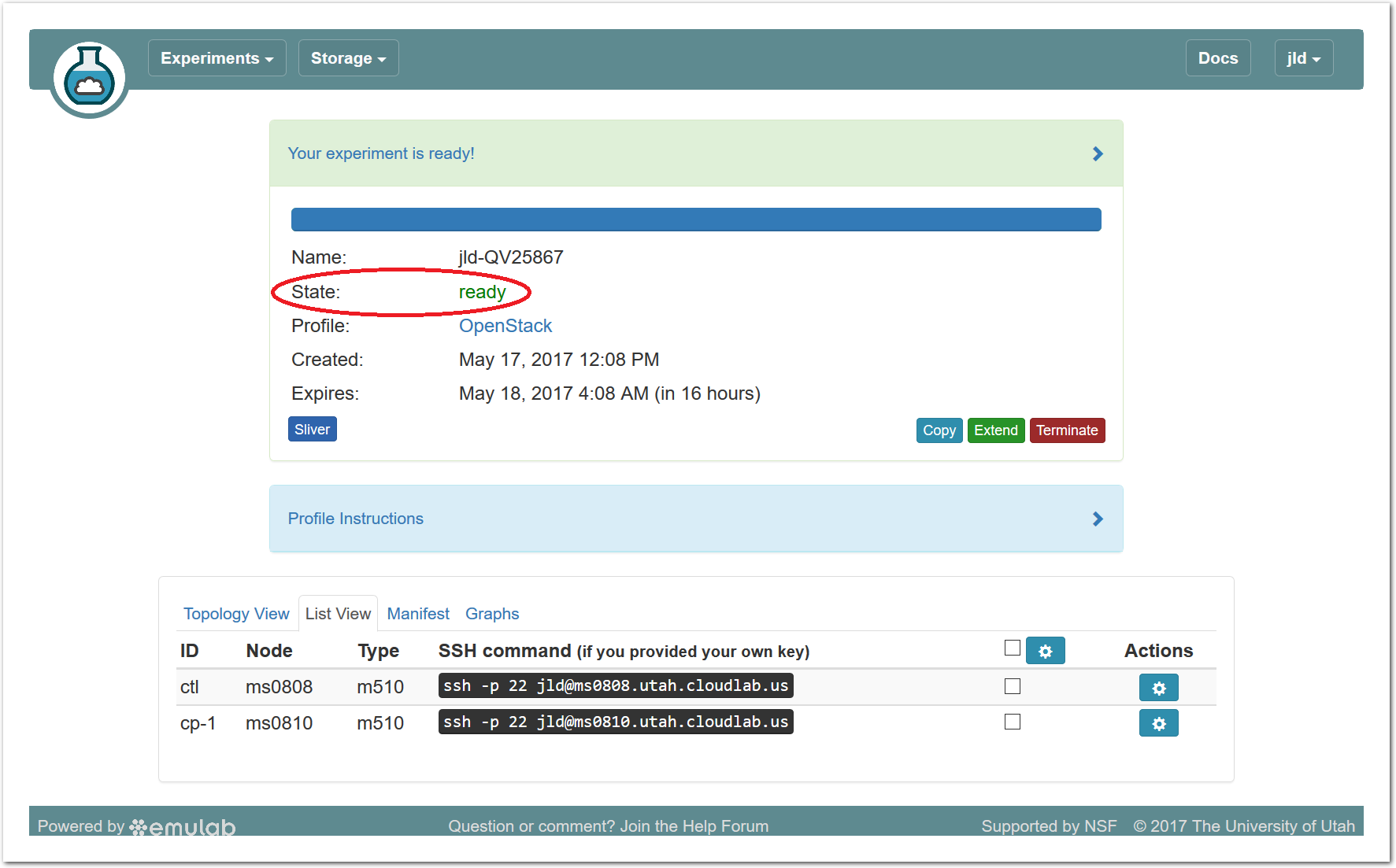

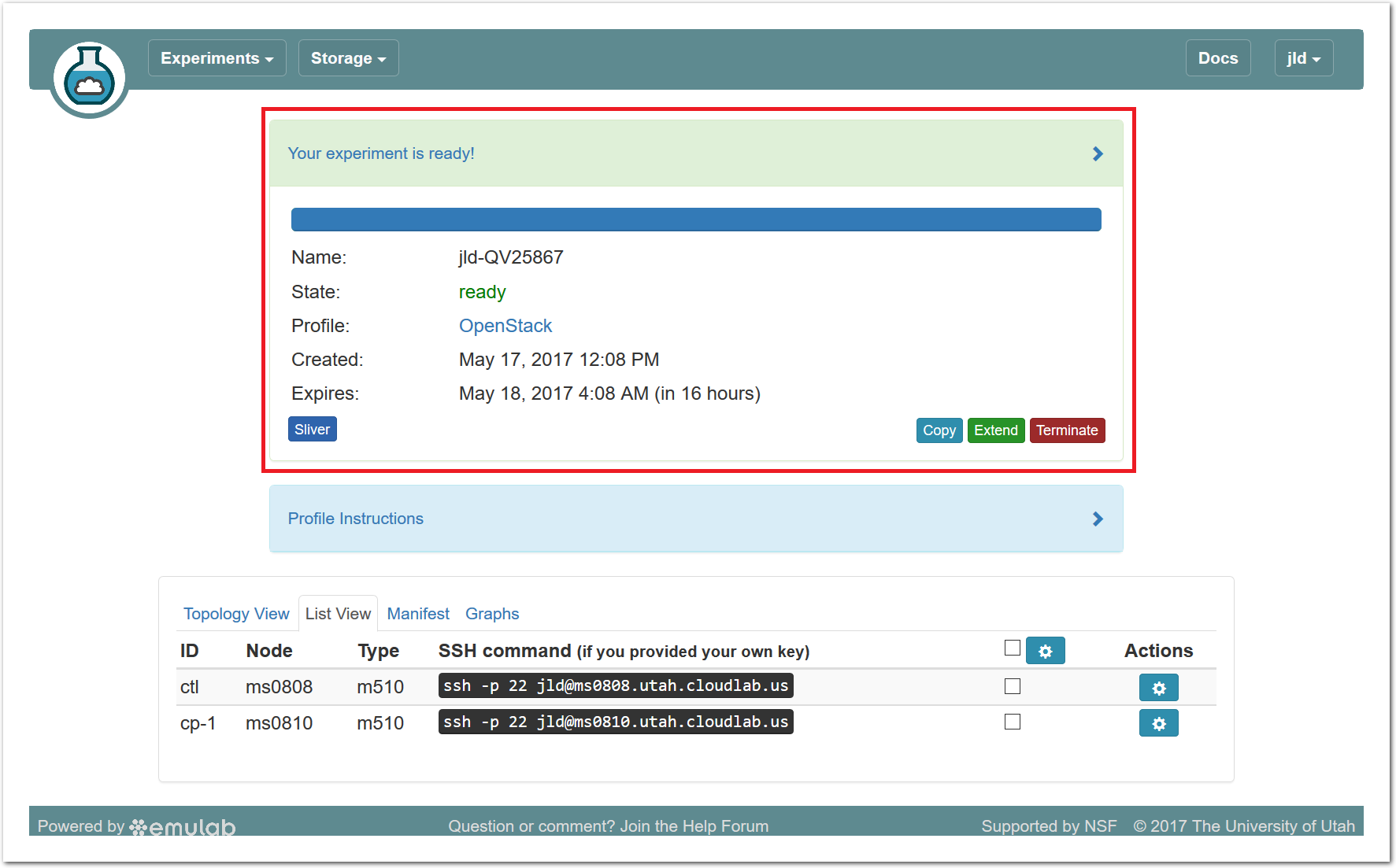

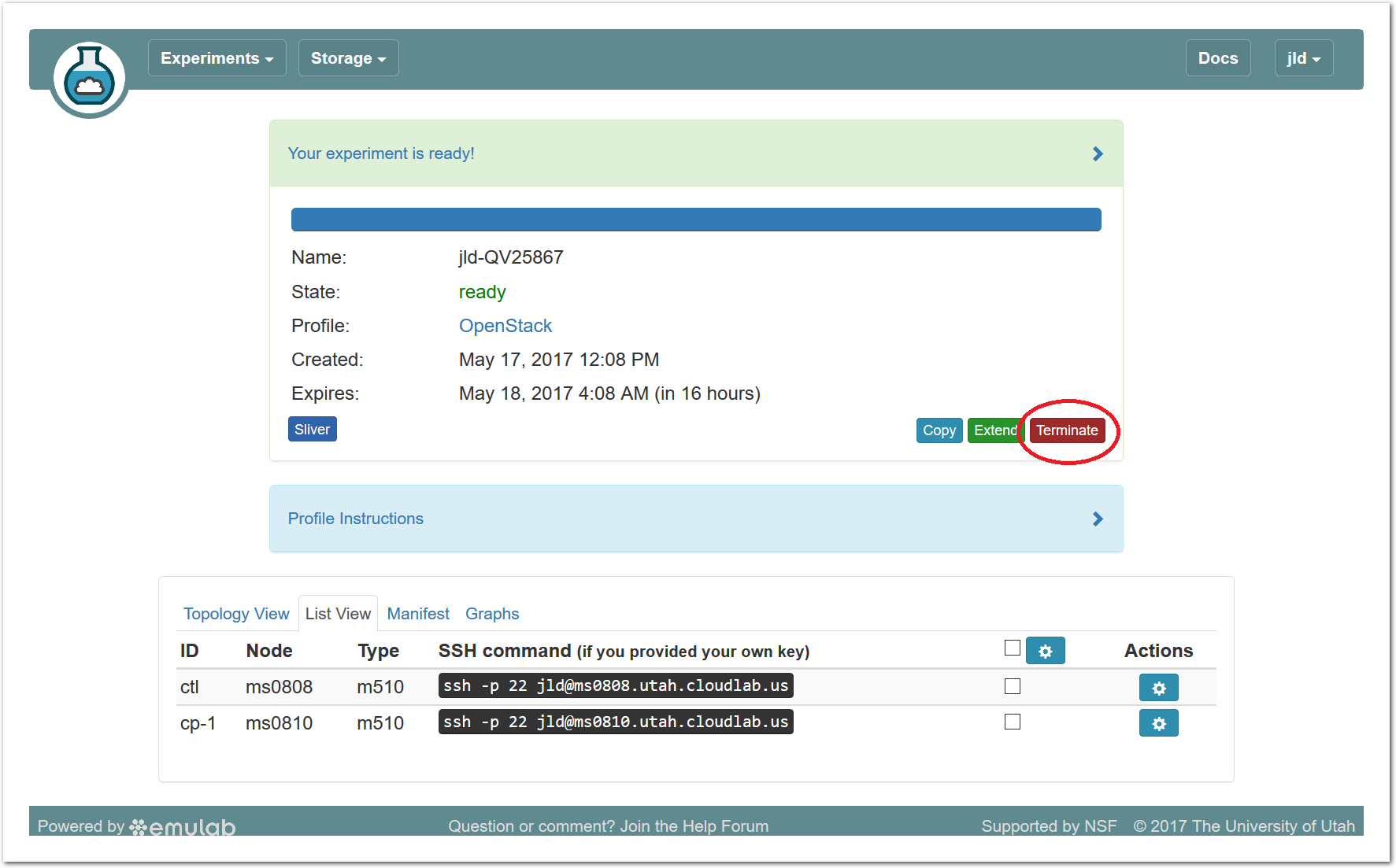

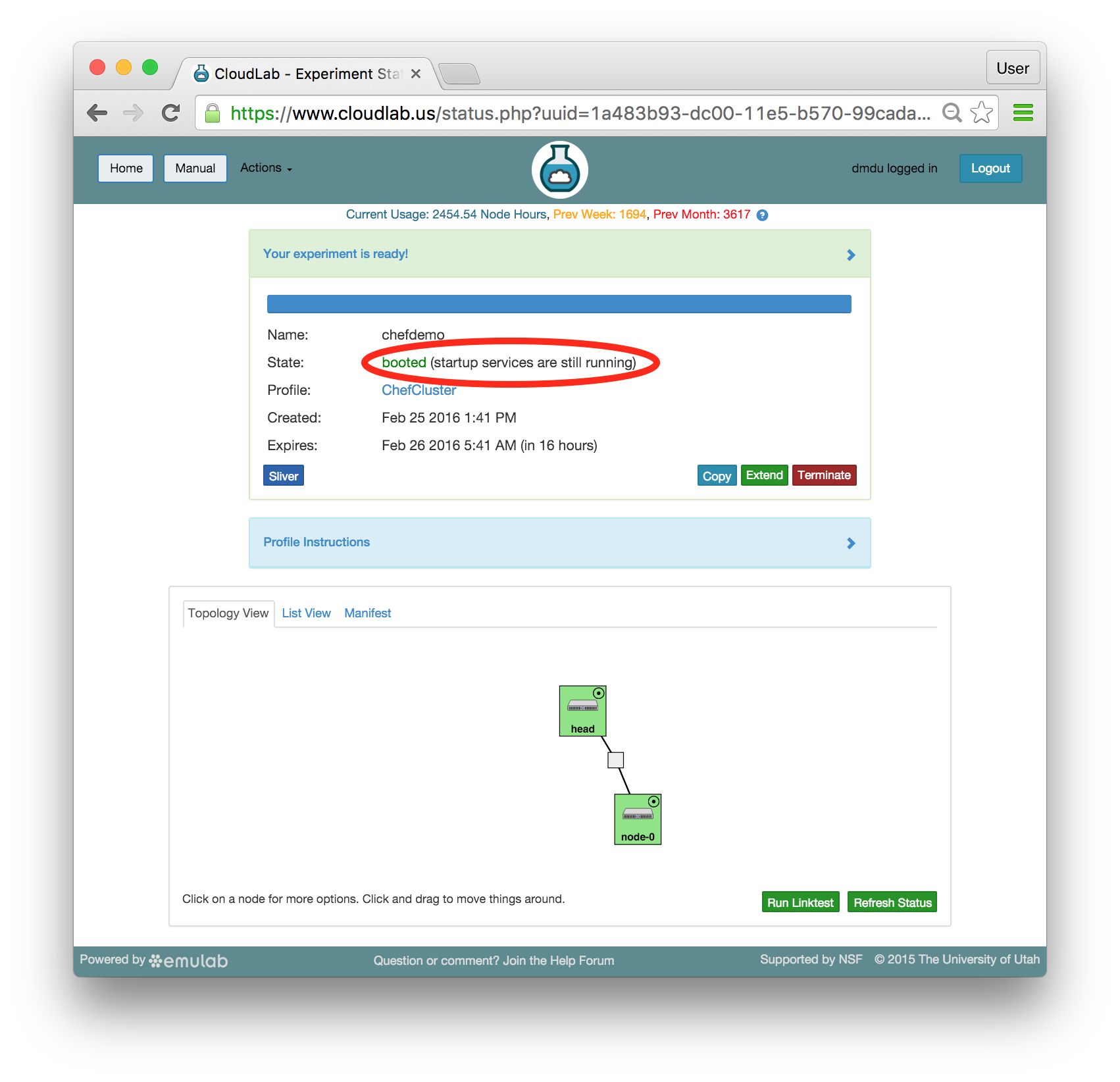

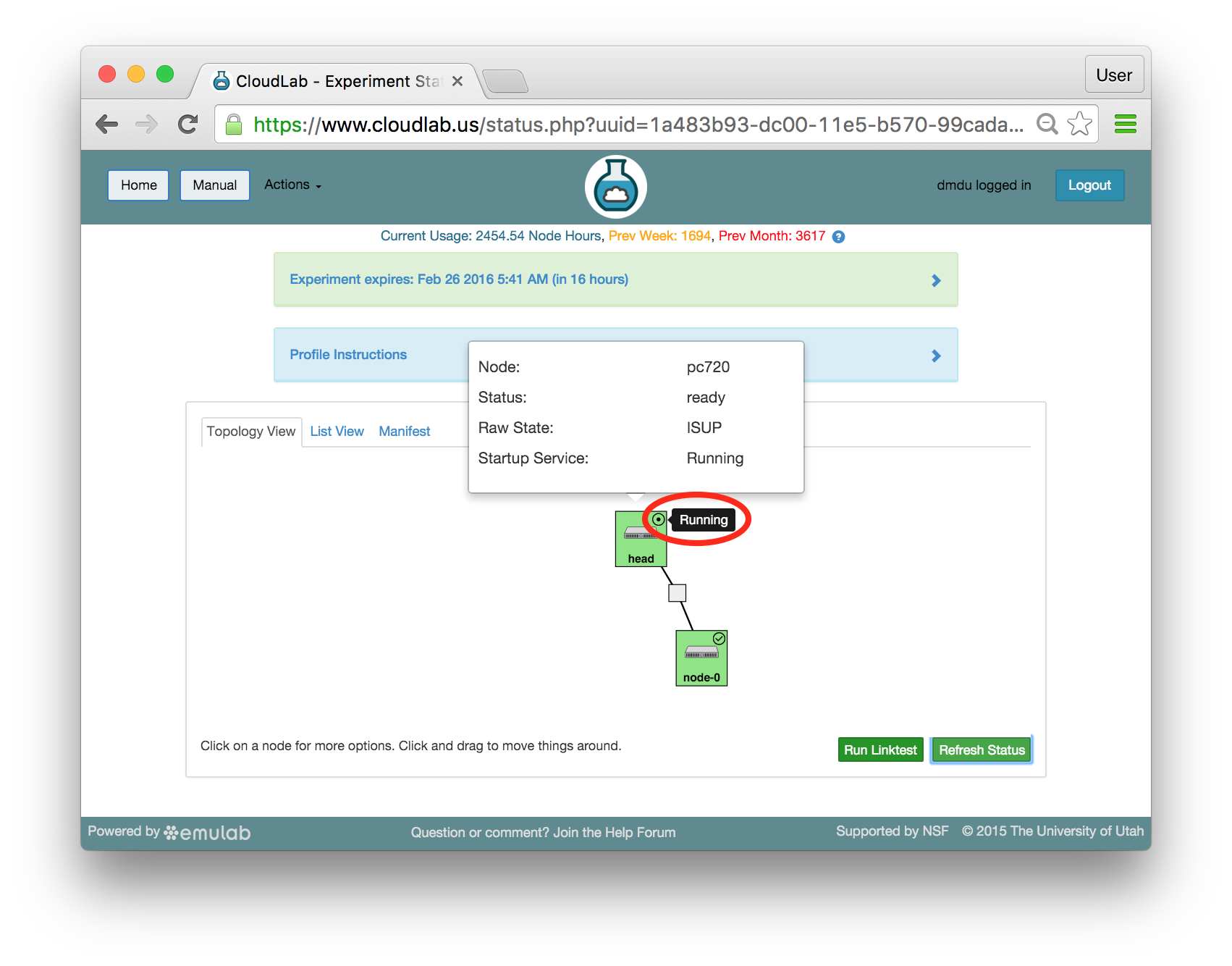

- Use your experimentWhen your experiment is ready to use, the progress bar will be complete, and you’ll be given a lot of new options at the bottom of the screen.

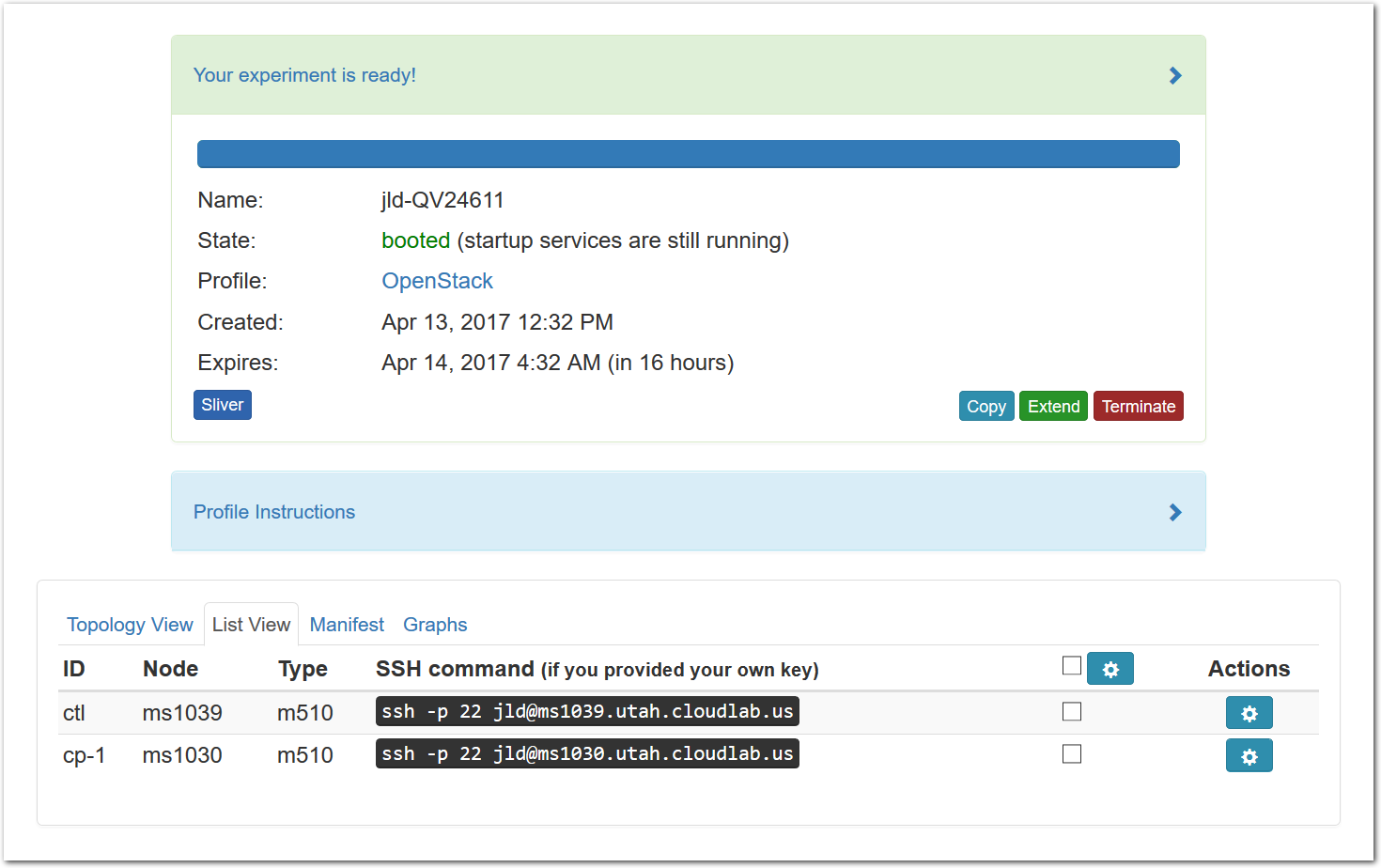

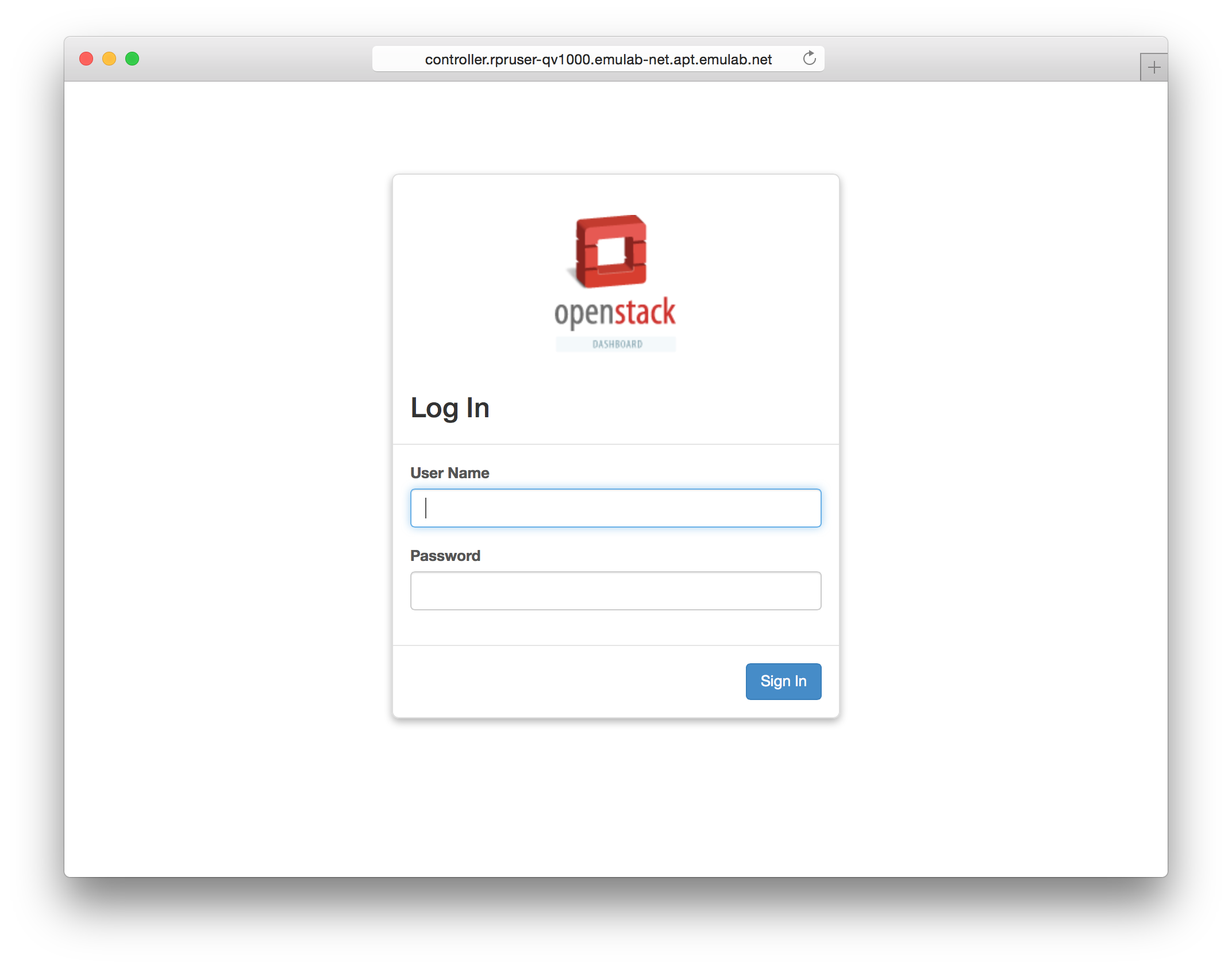

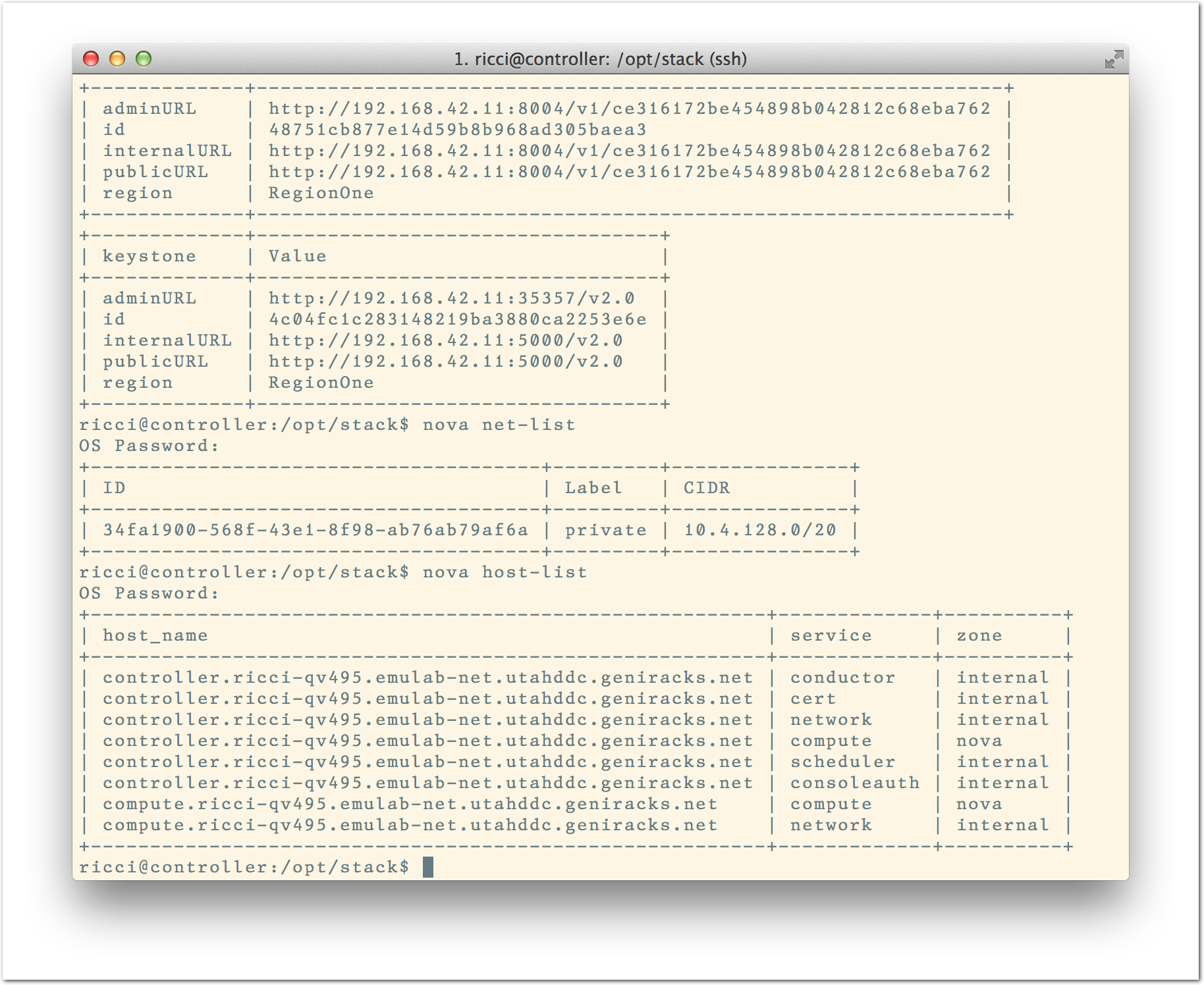

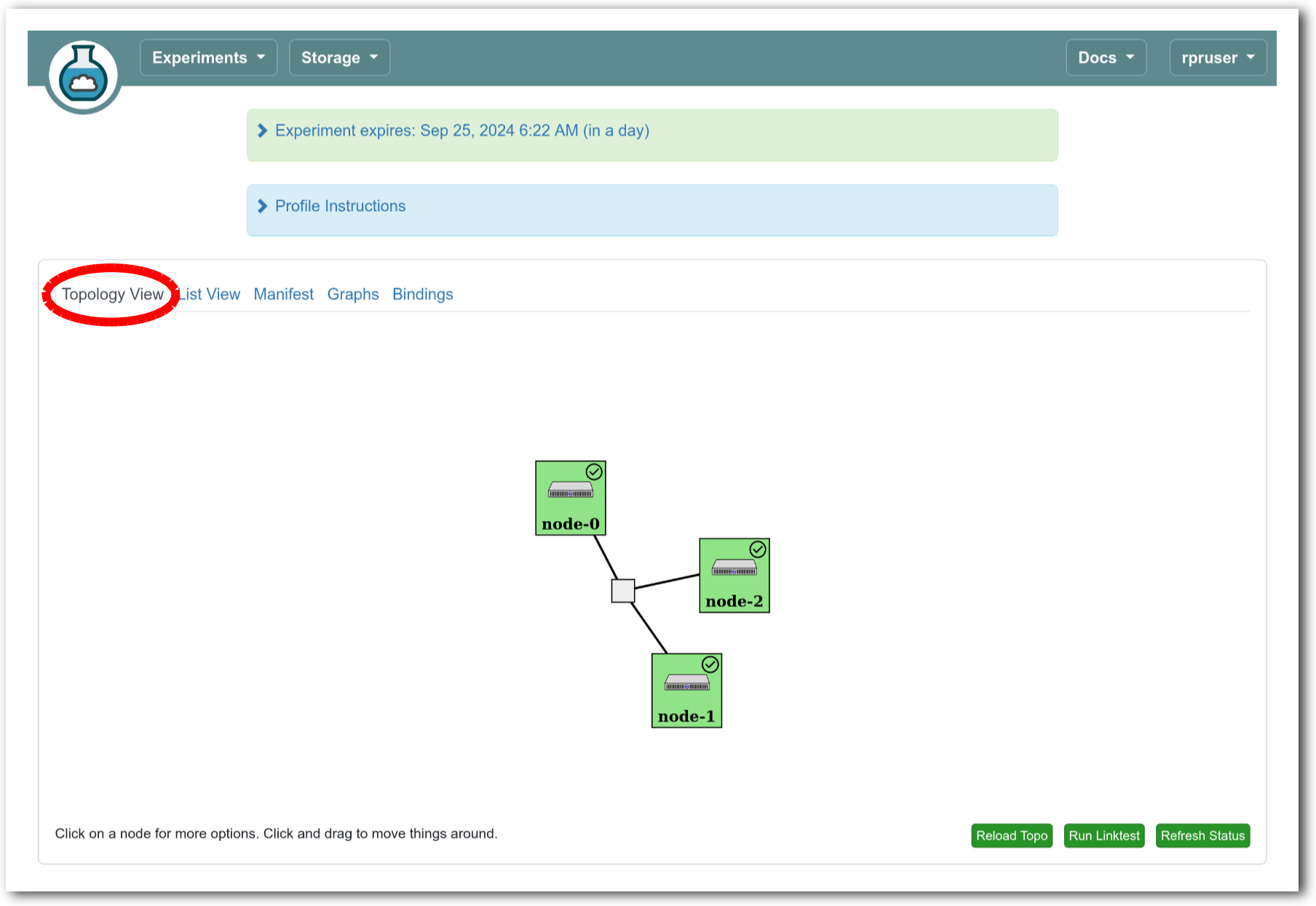

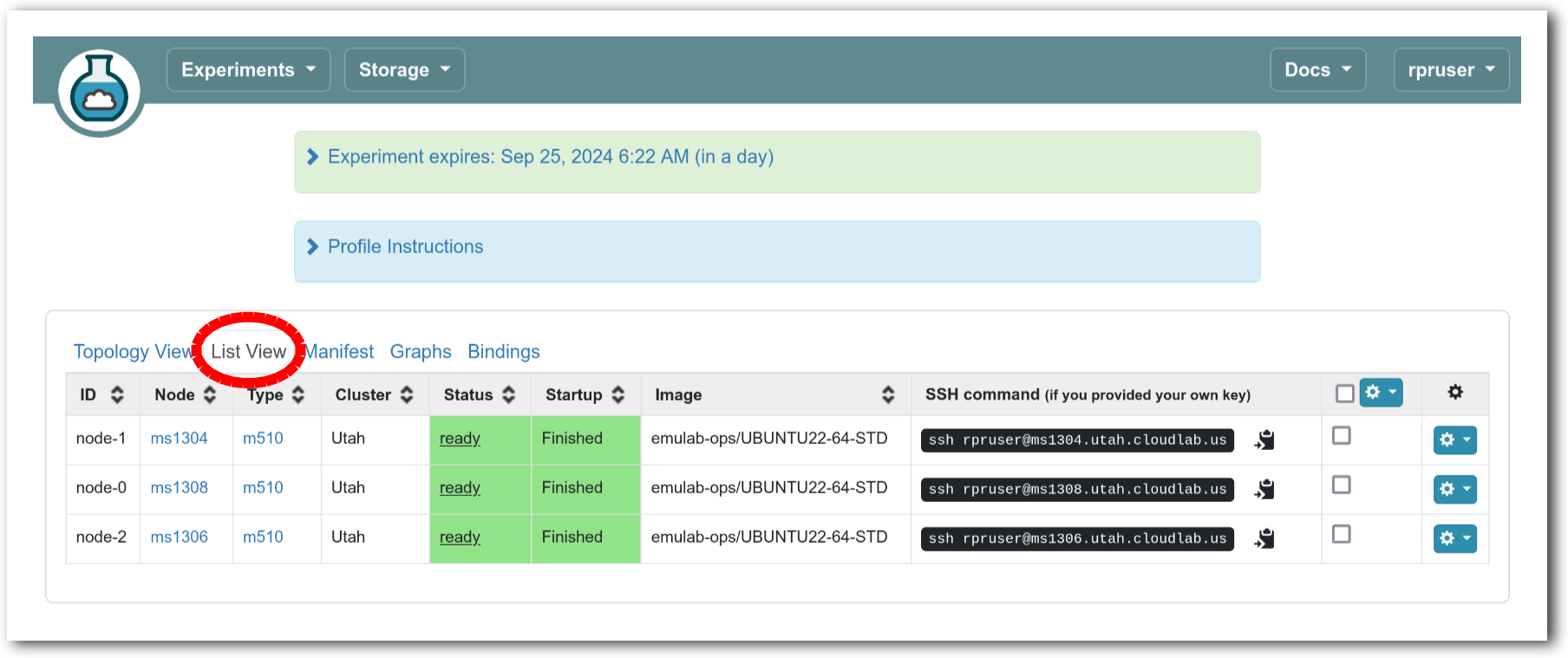

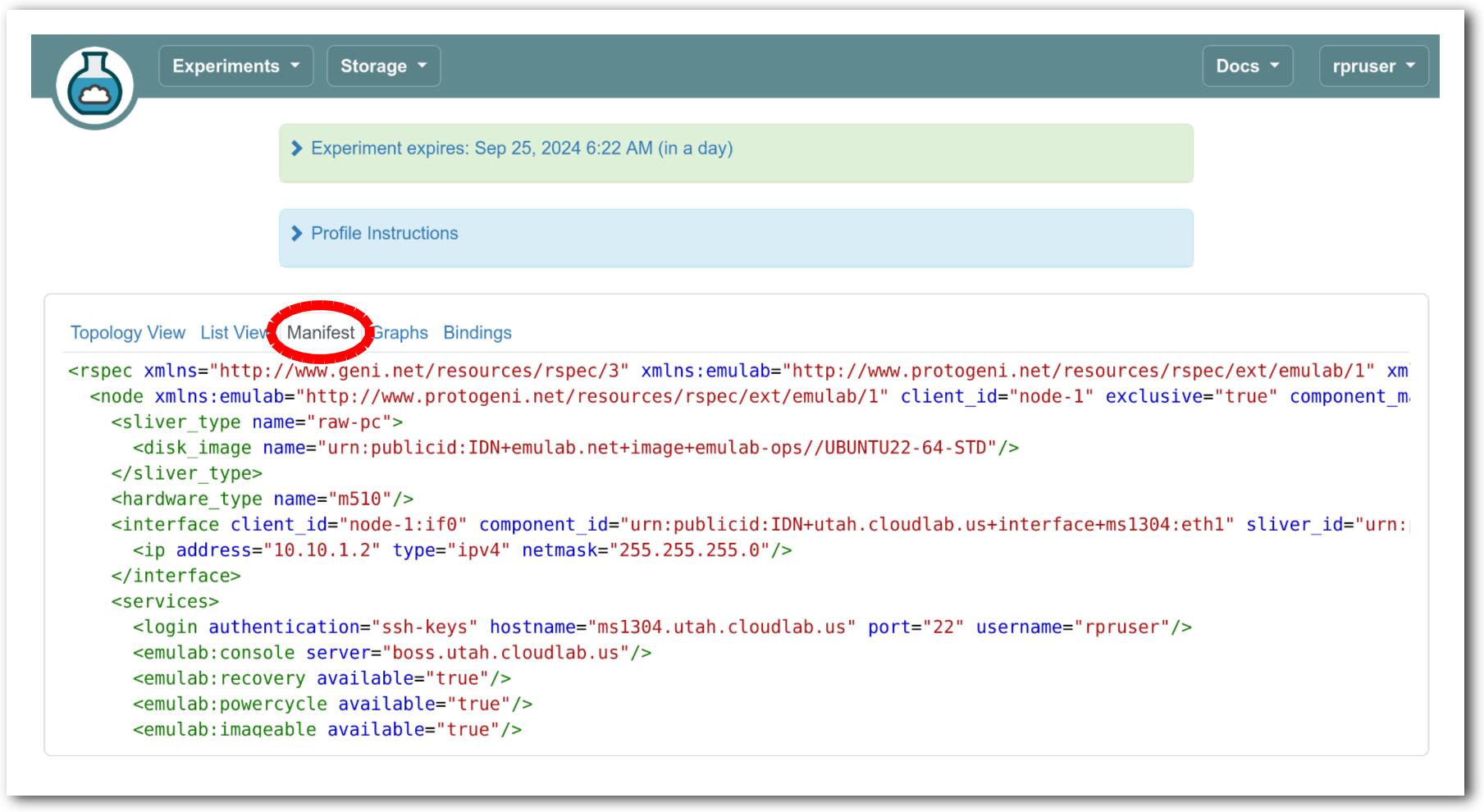

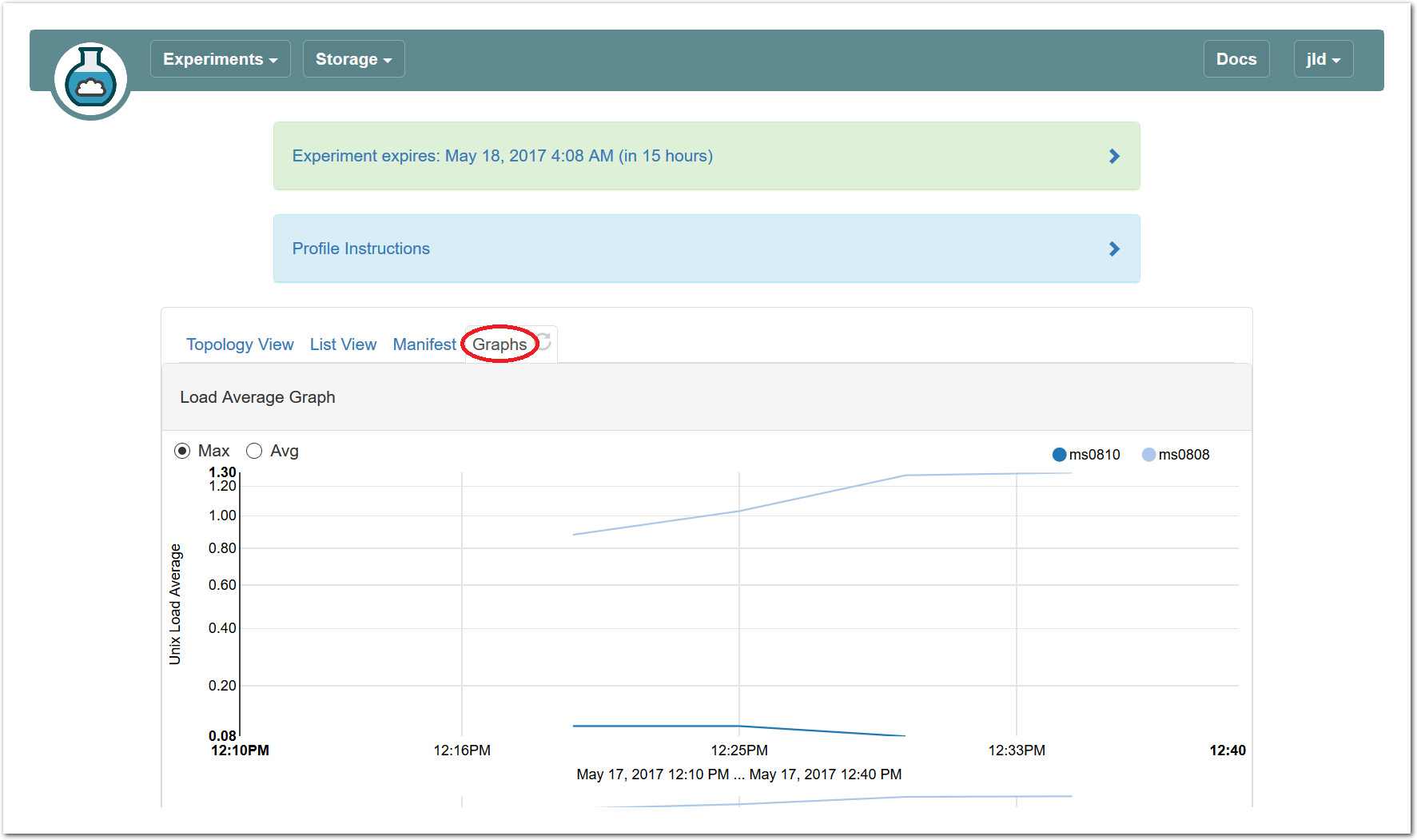

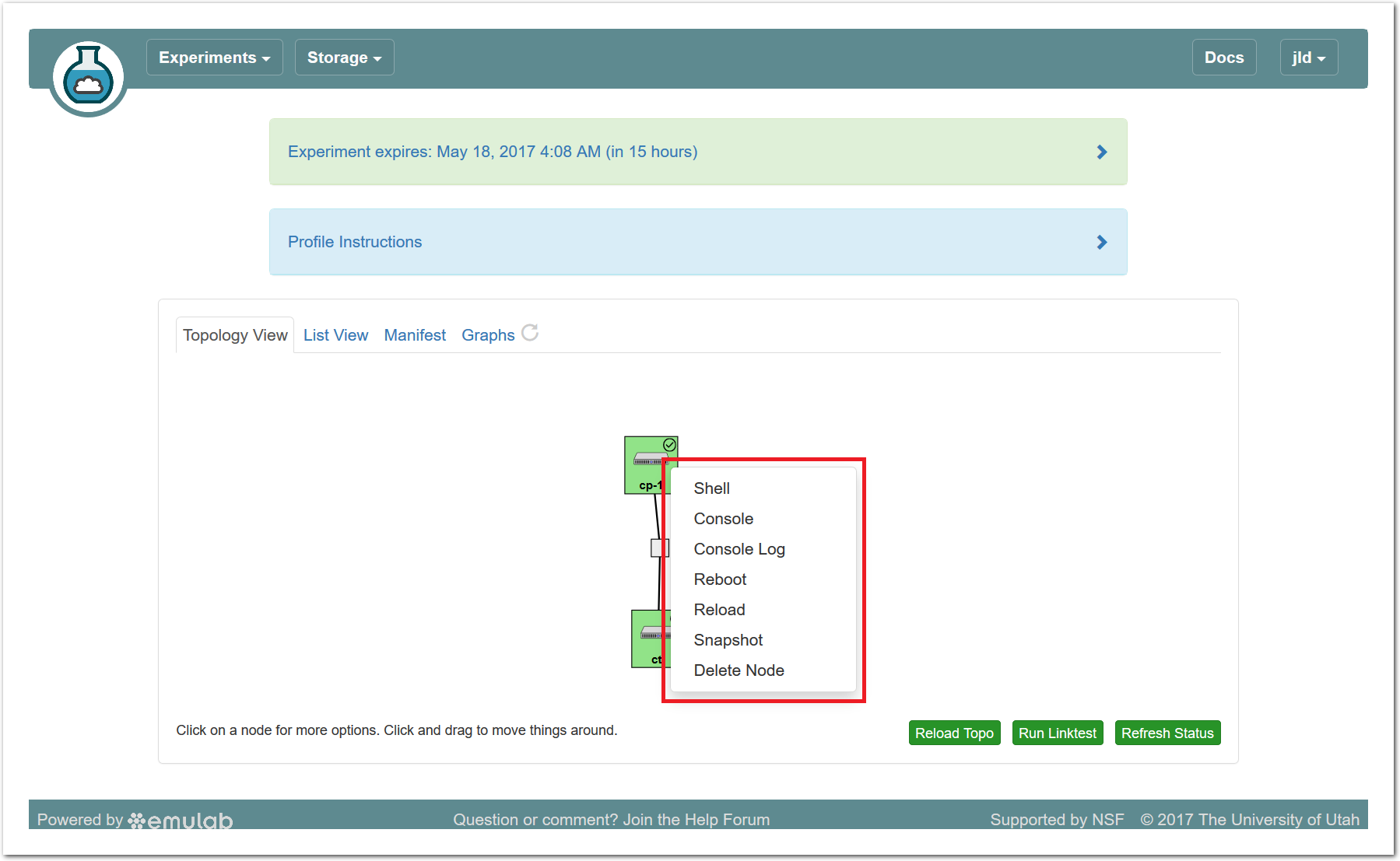

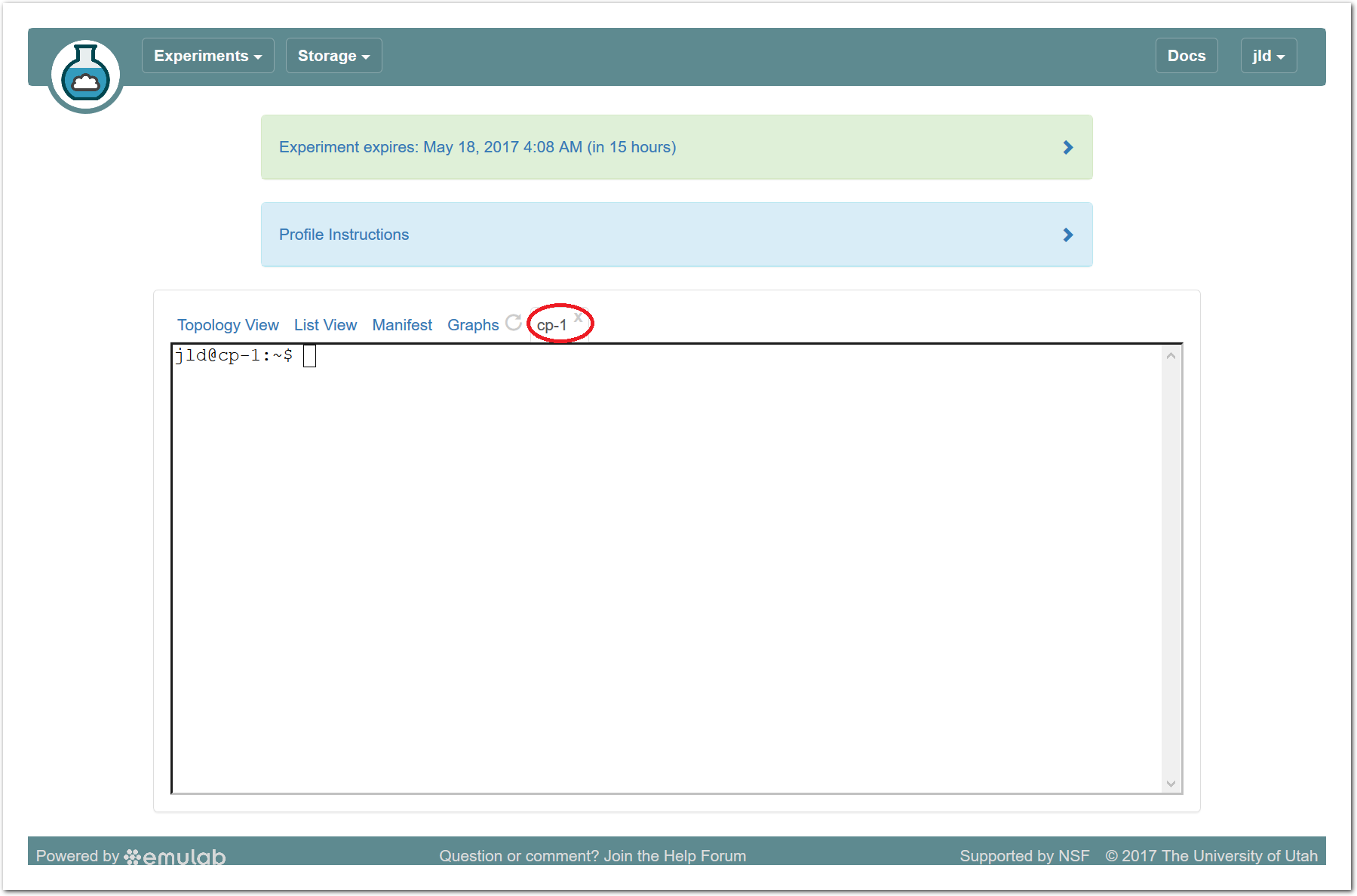

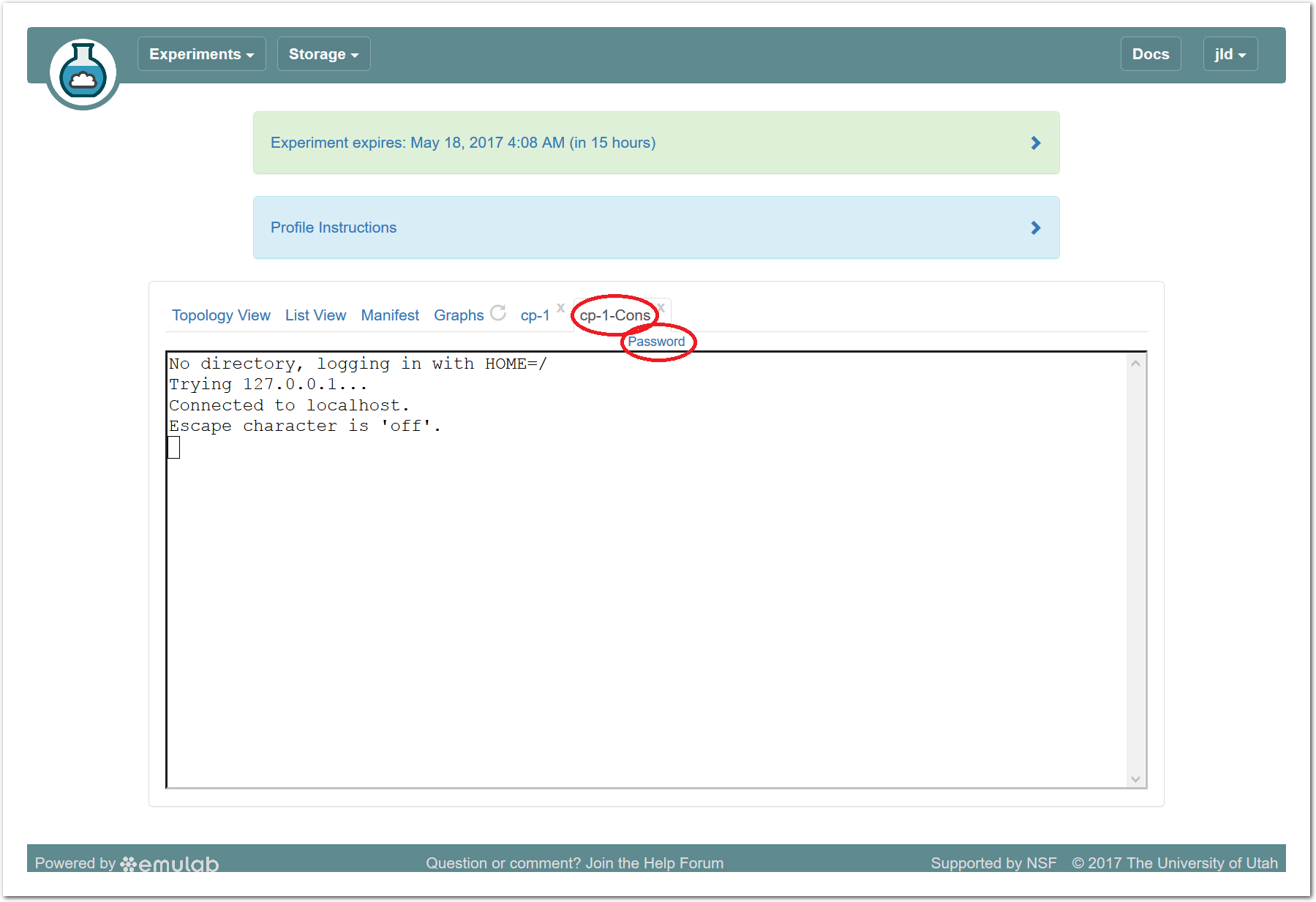

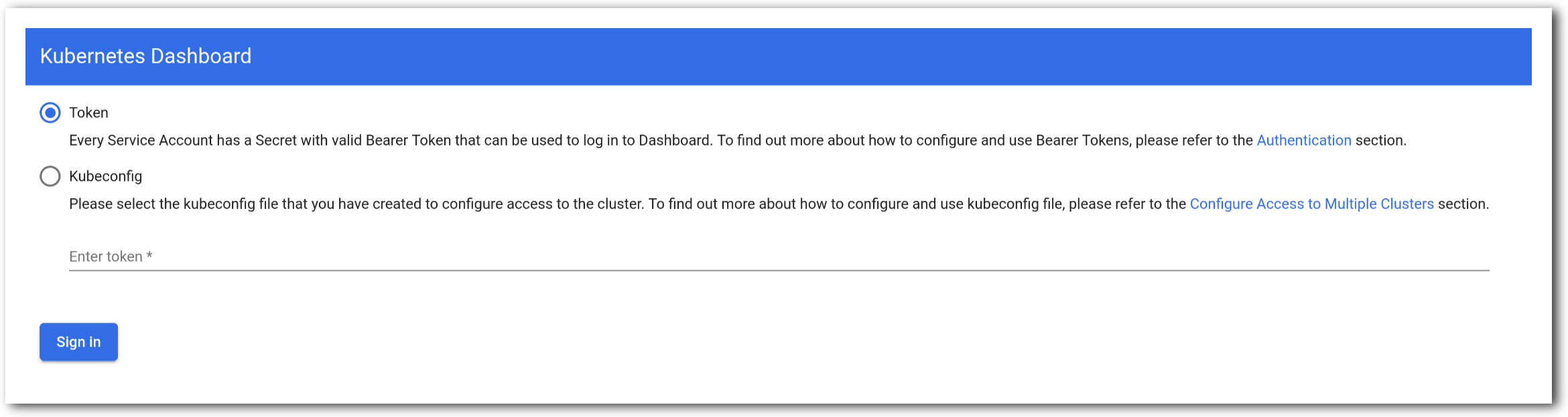

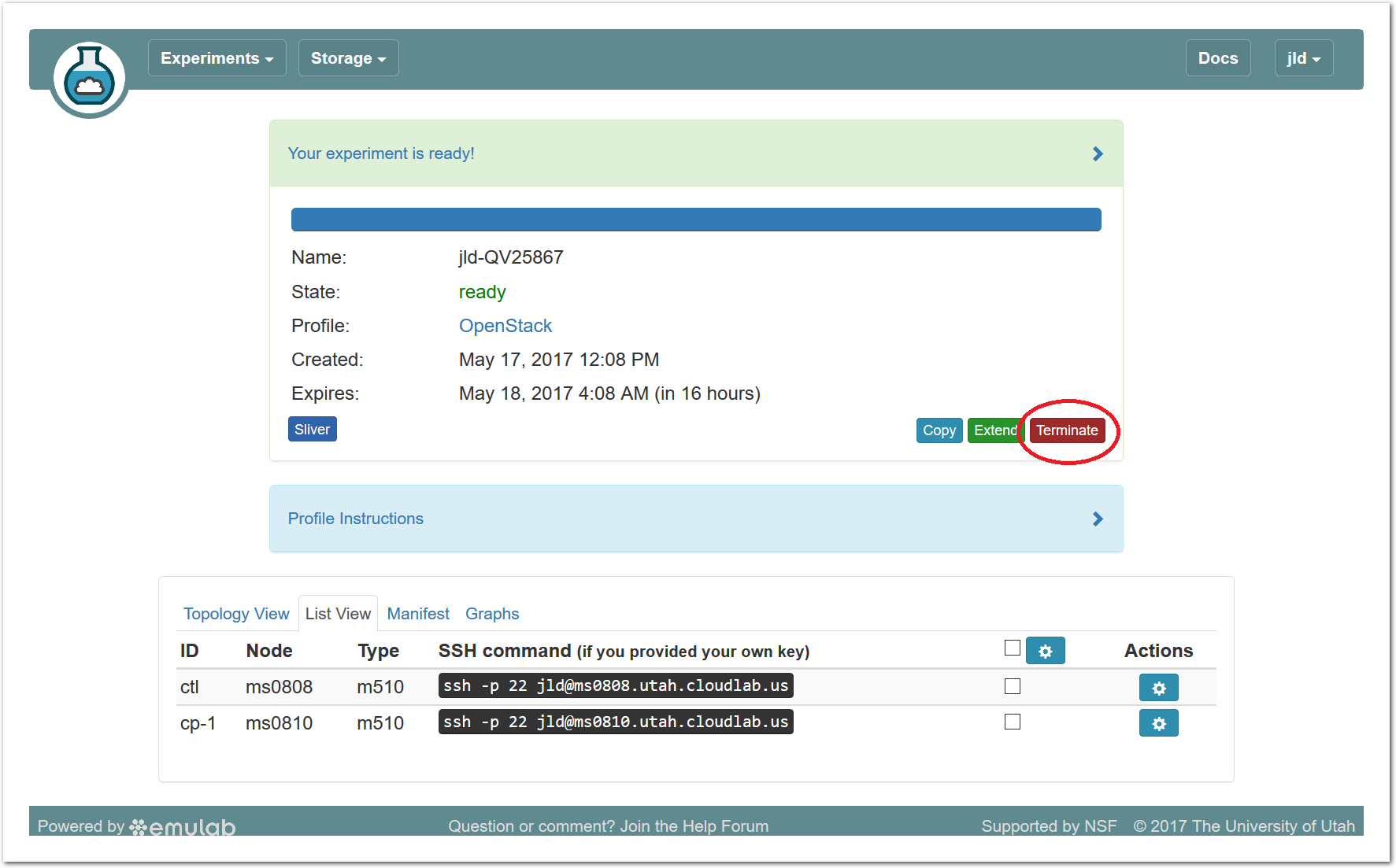

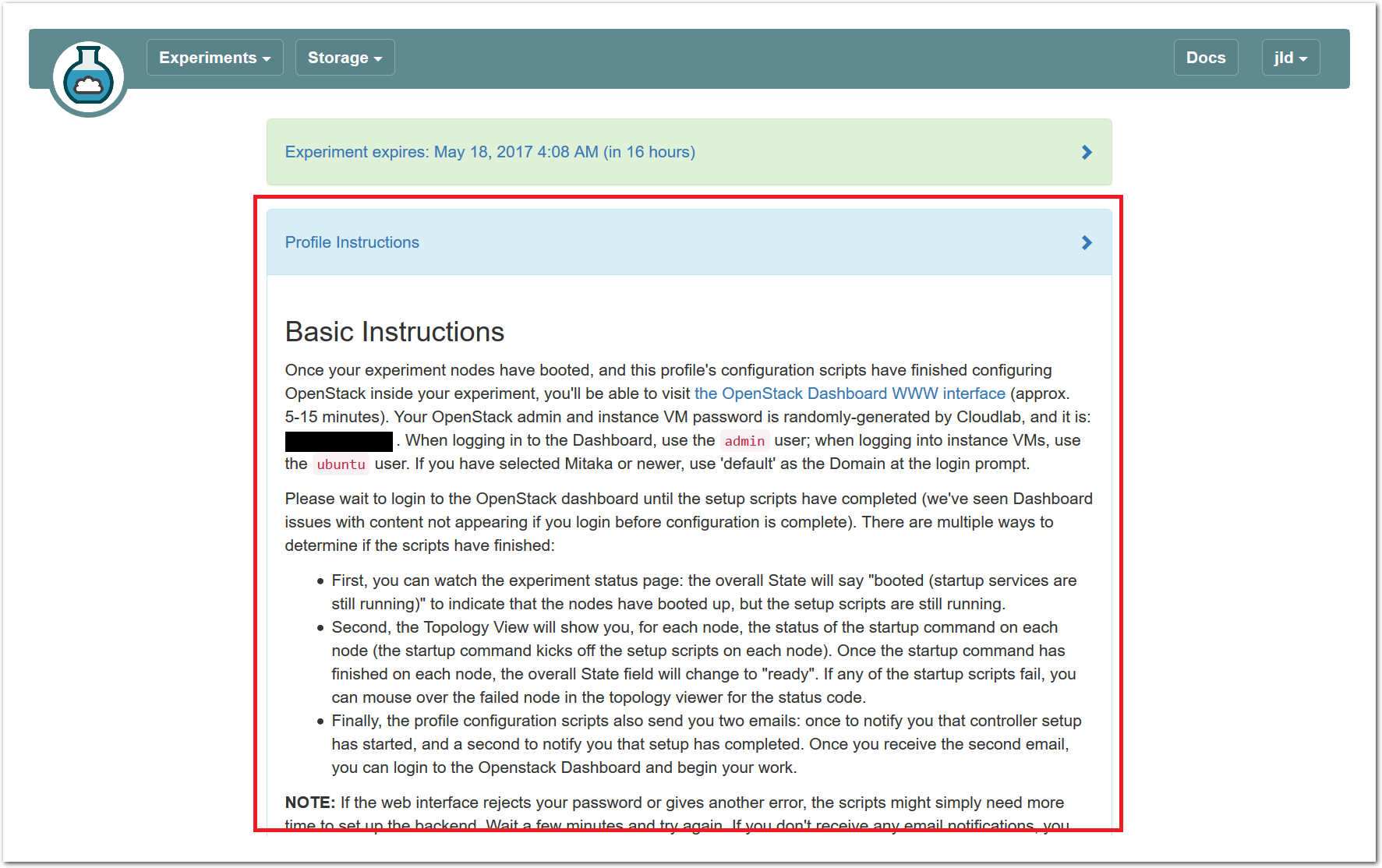

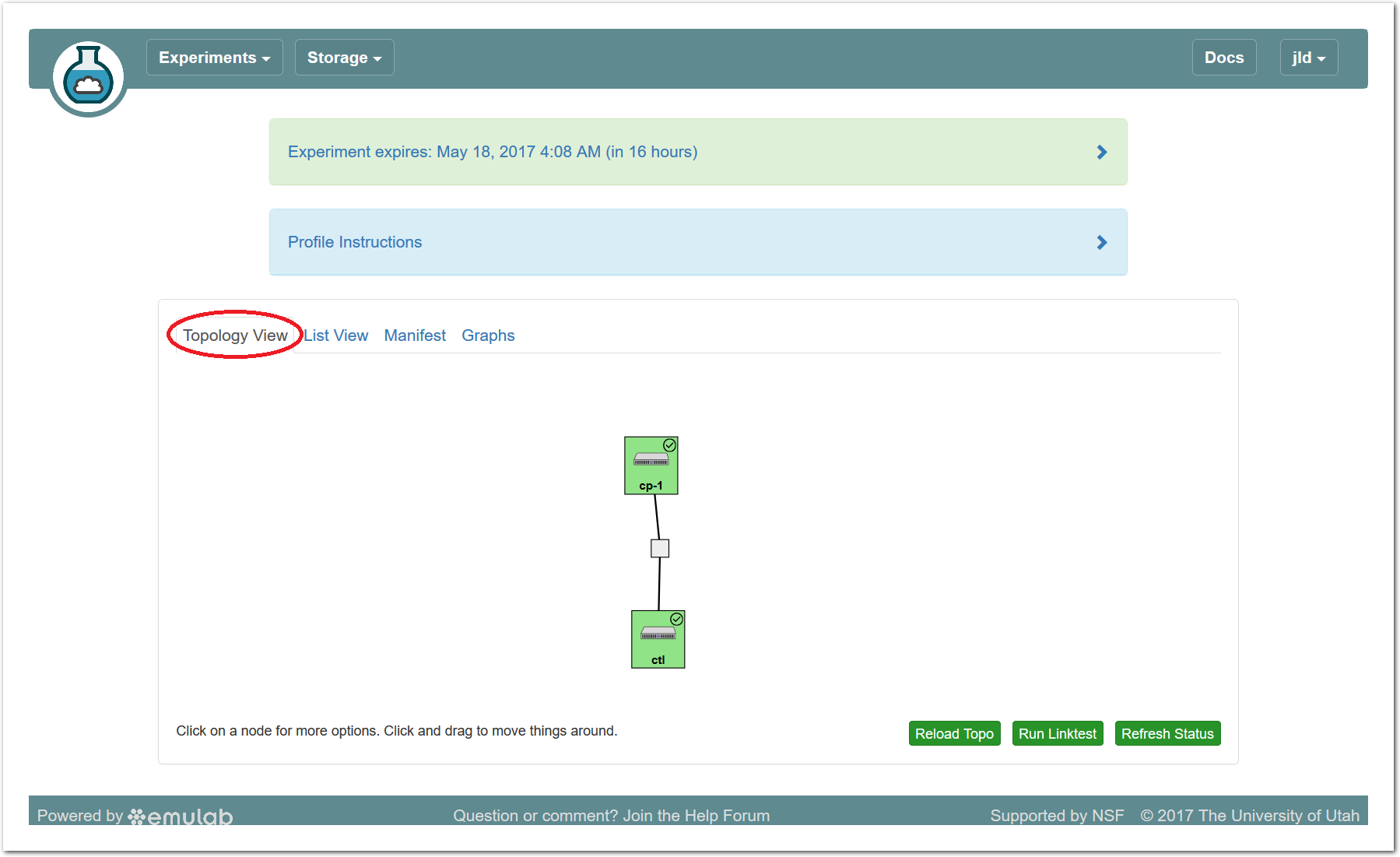

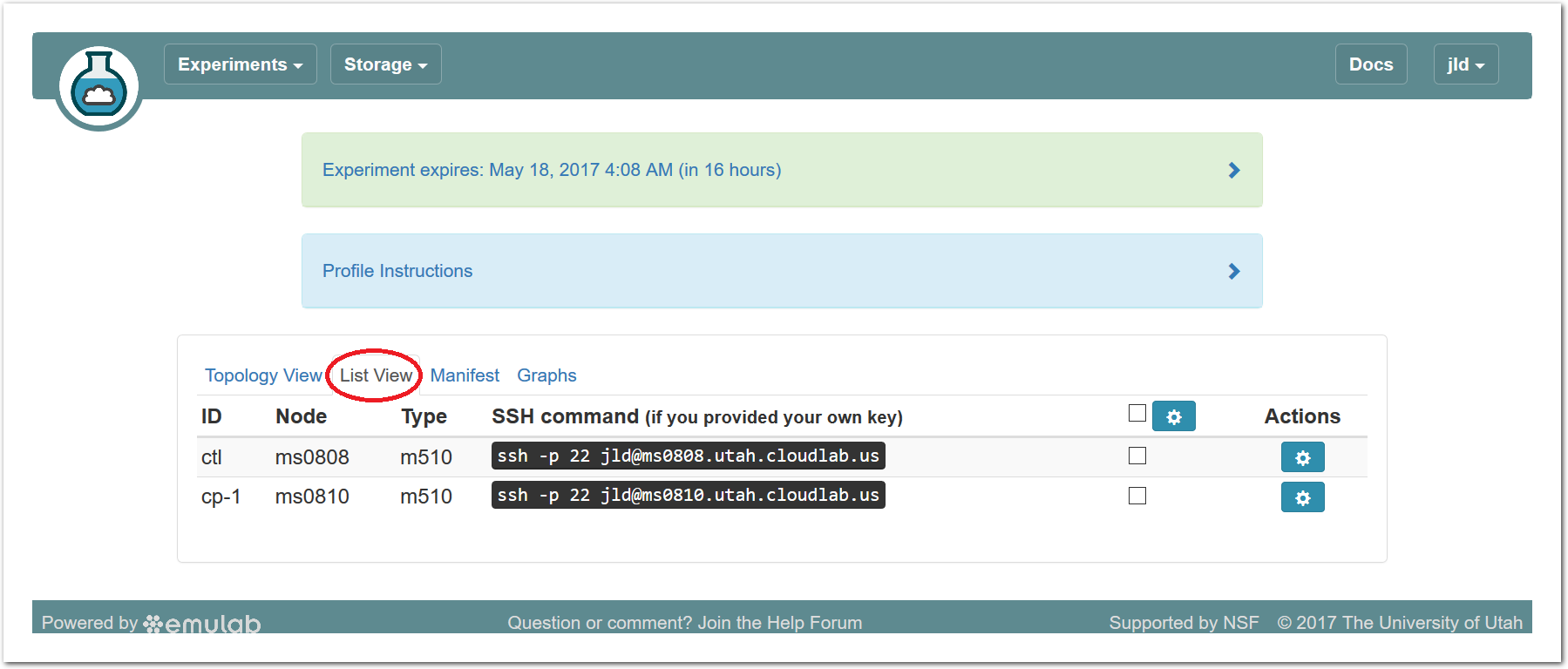

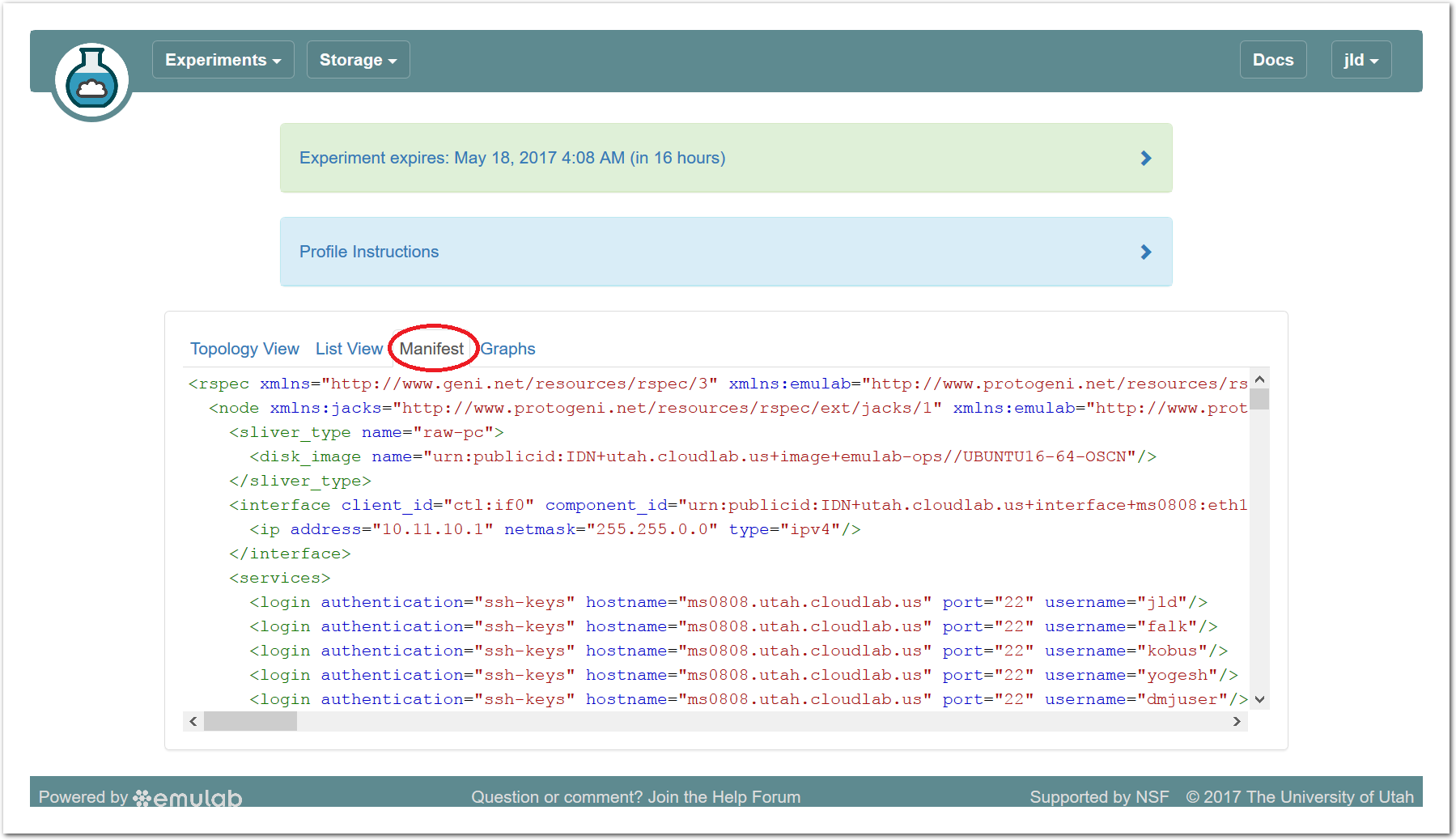

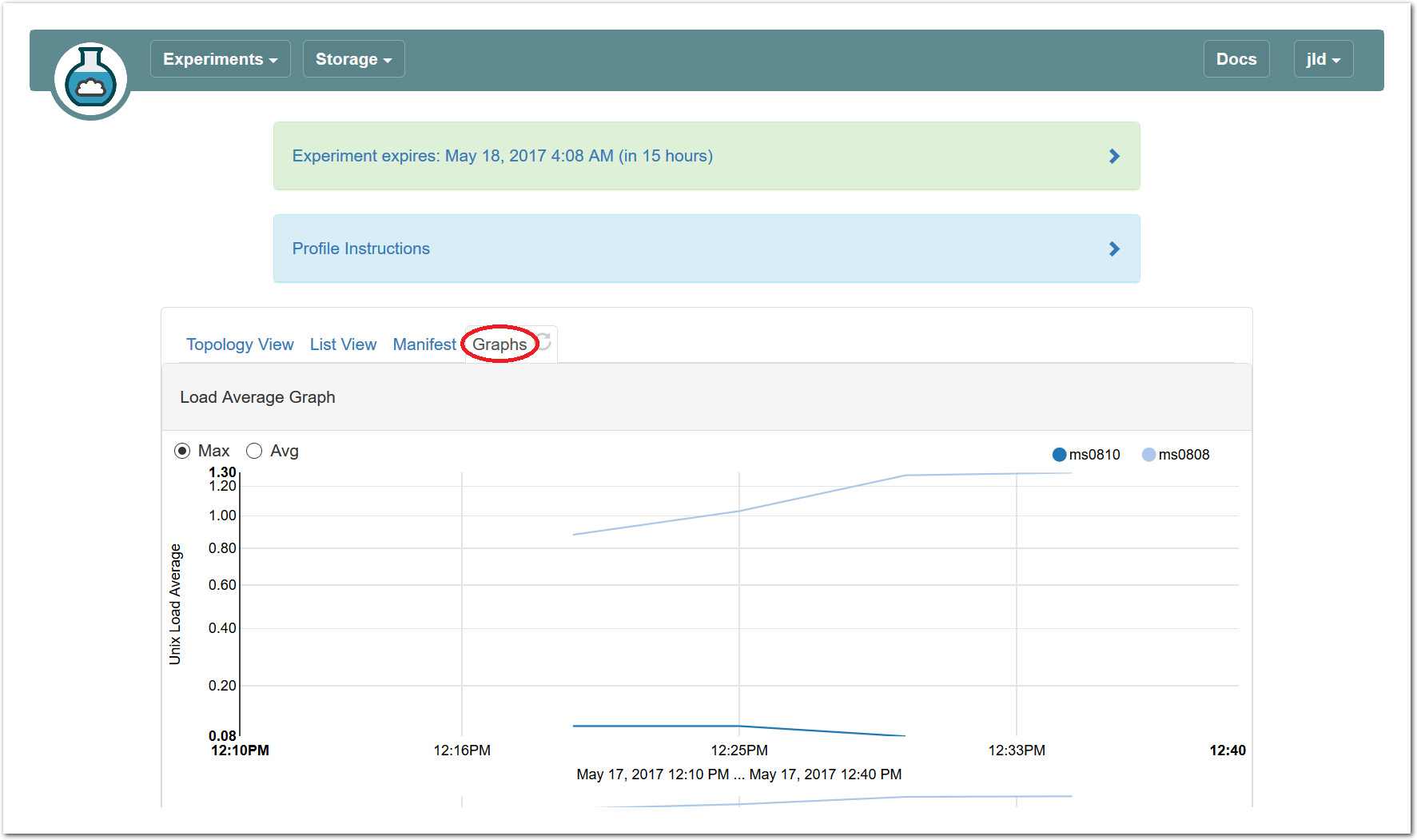

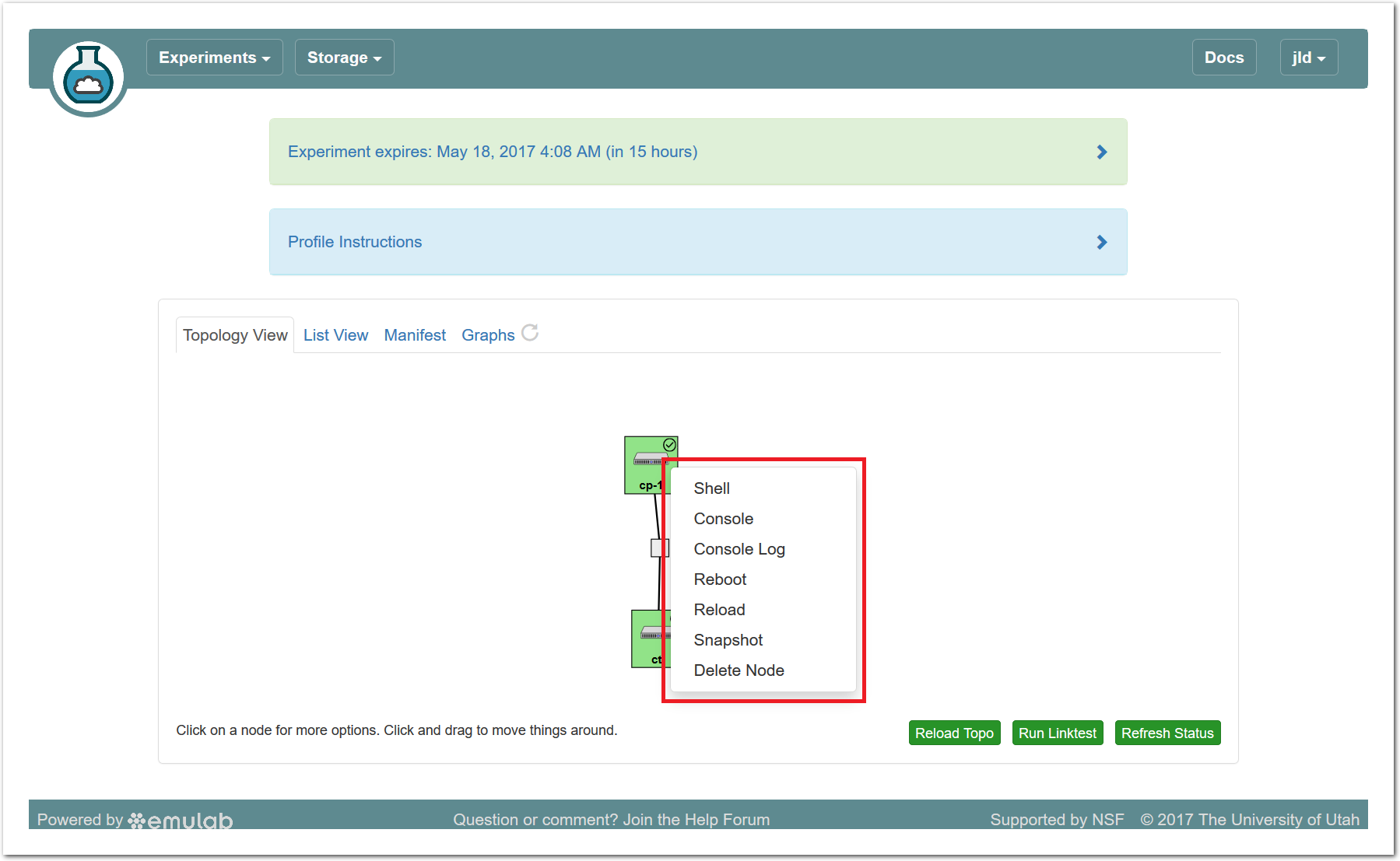

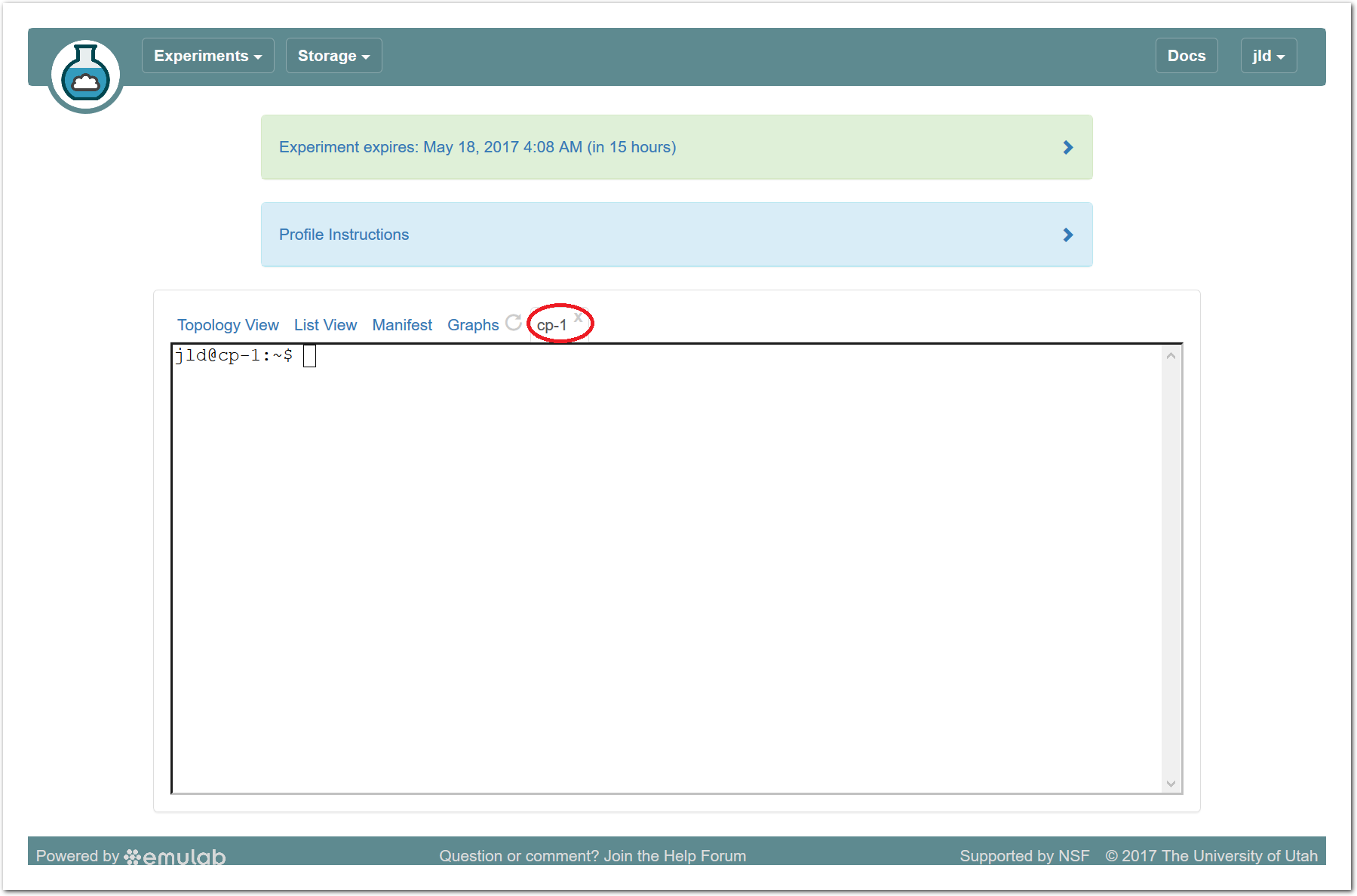

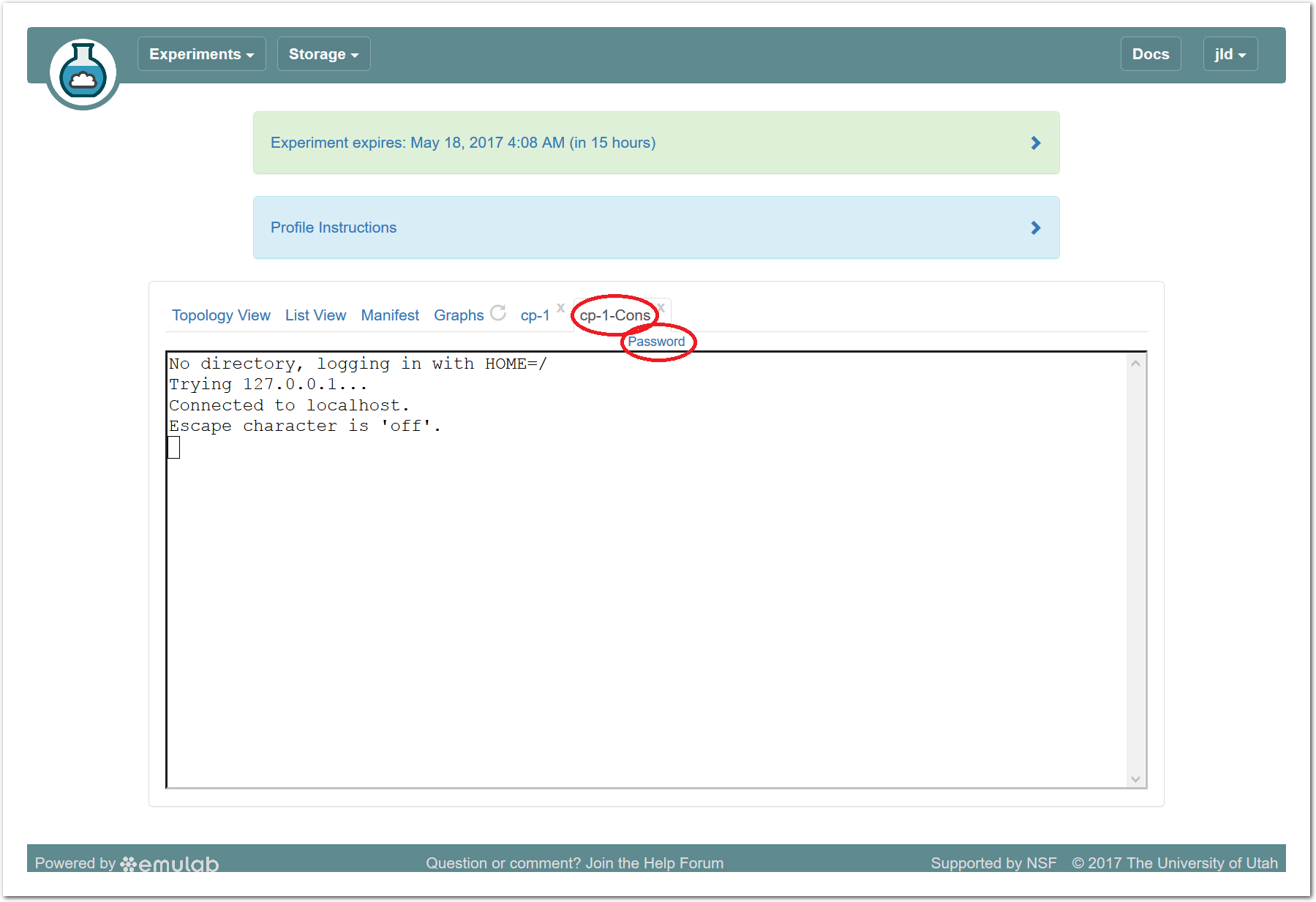

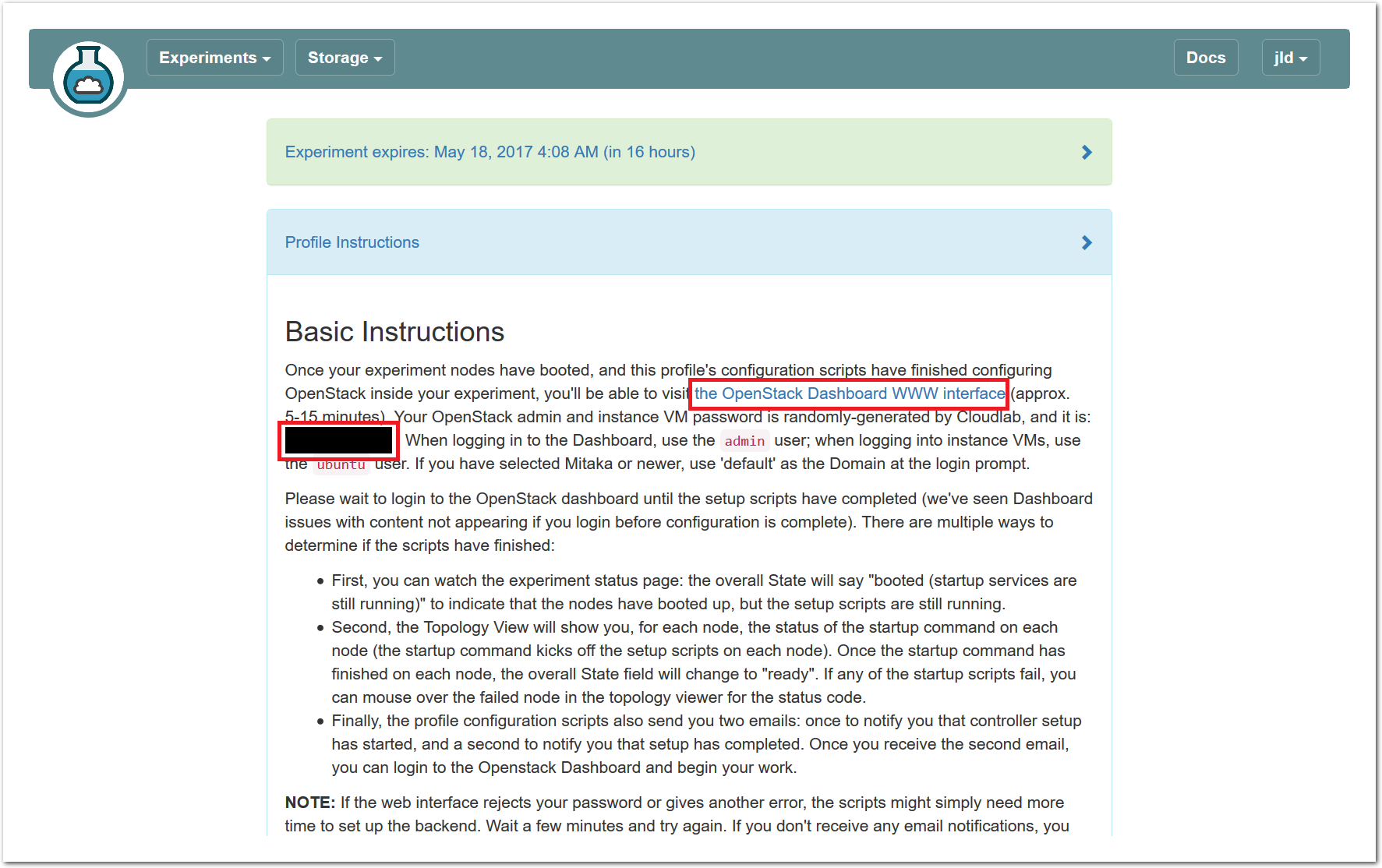

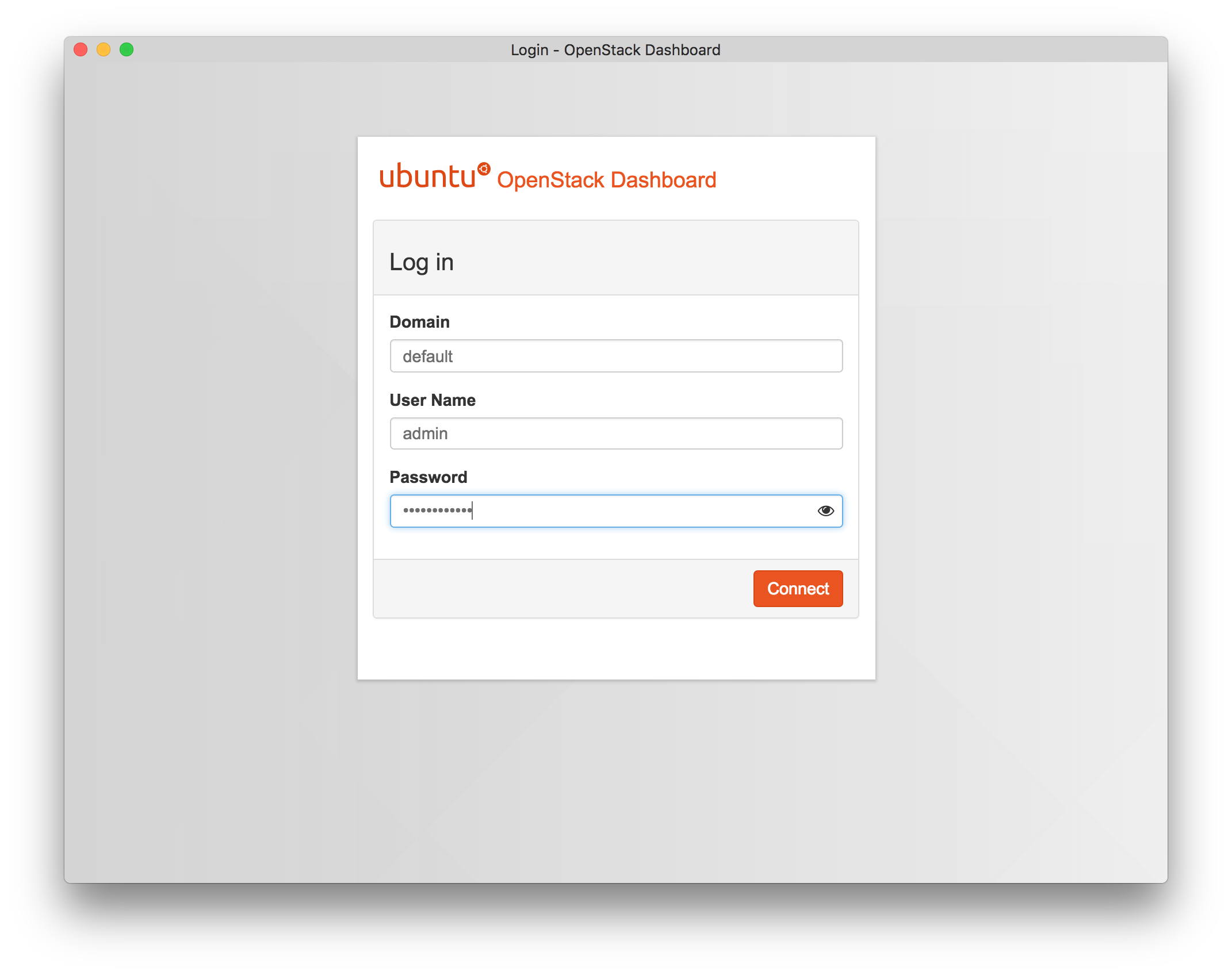

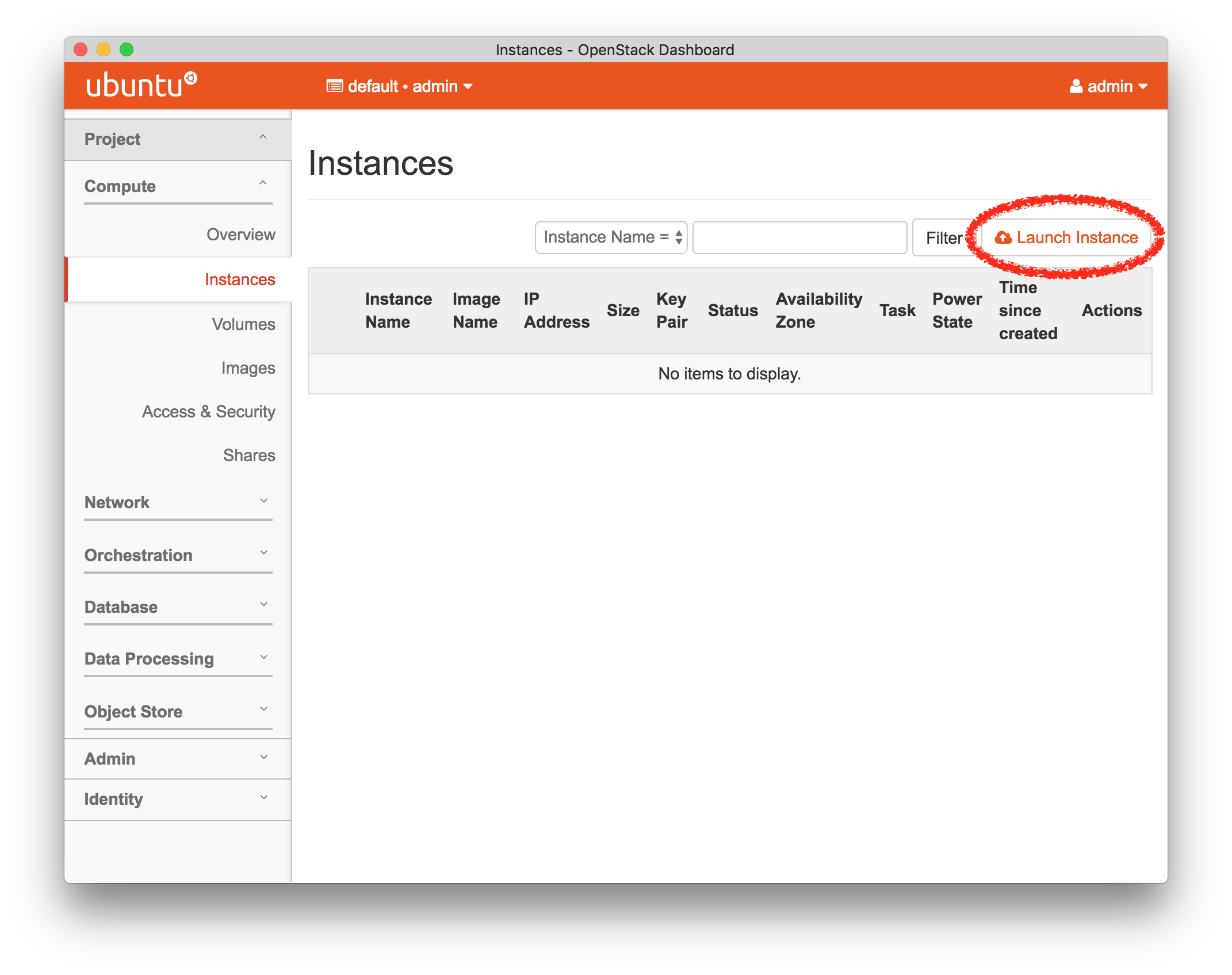

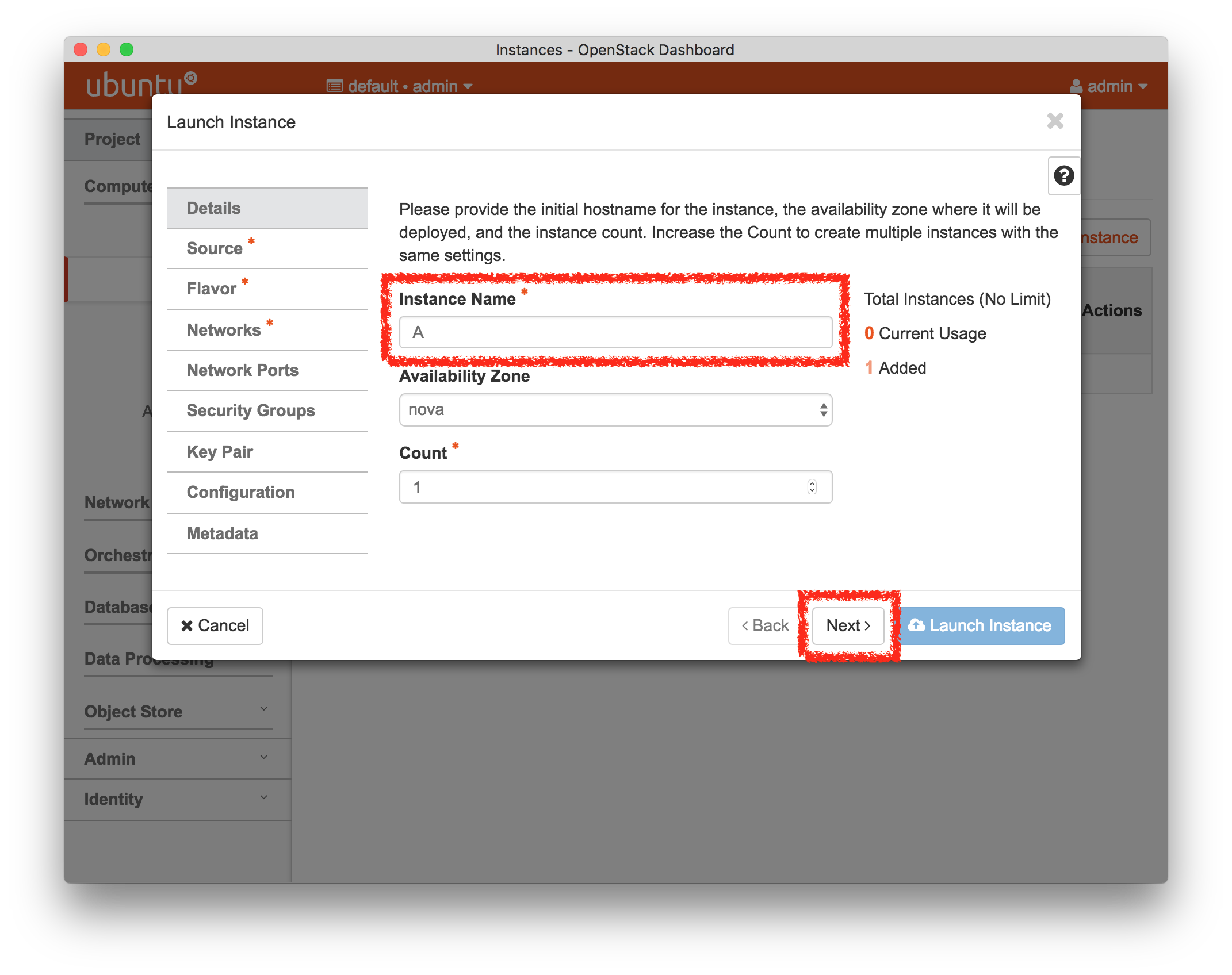

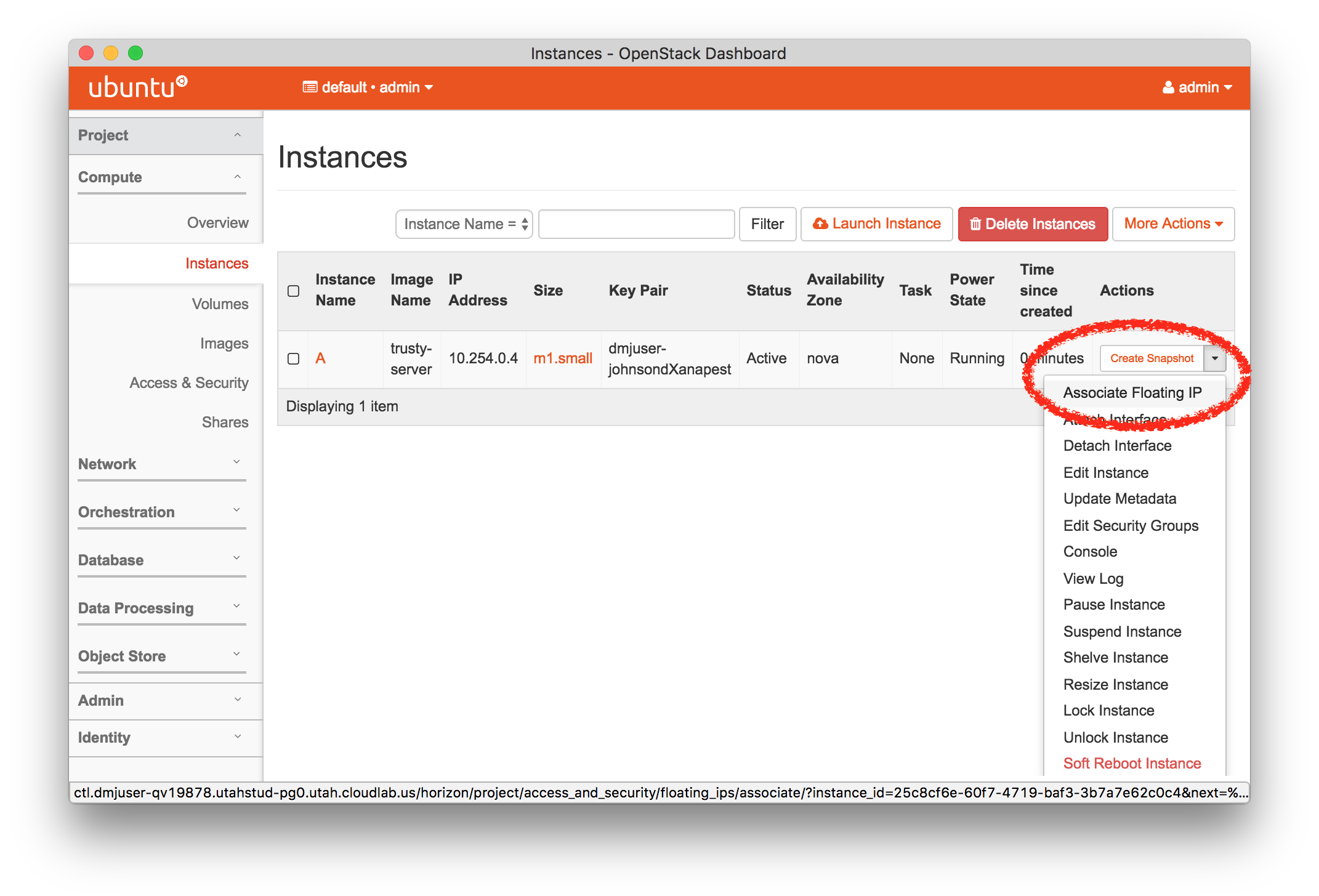

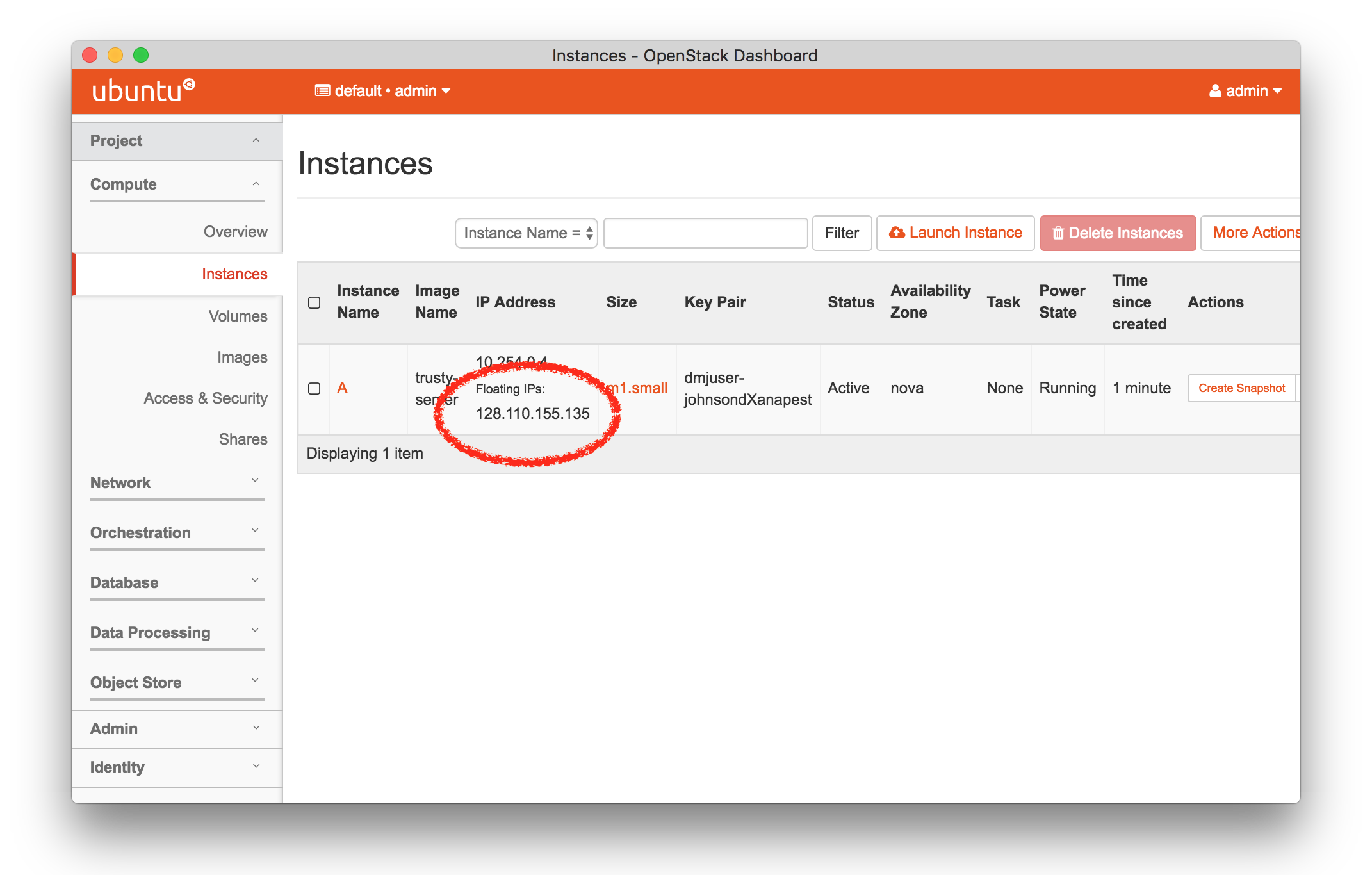

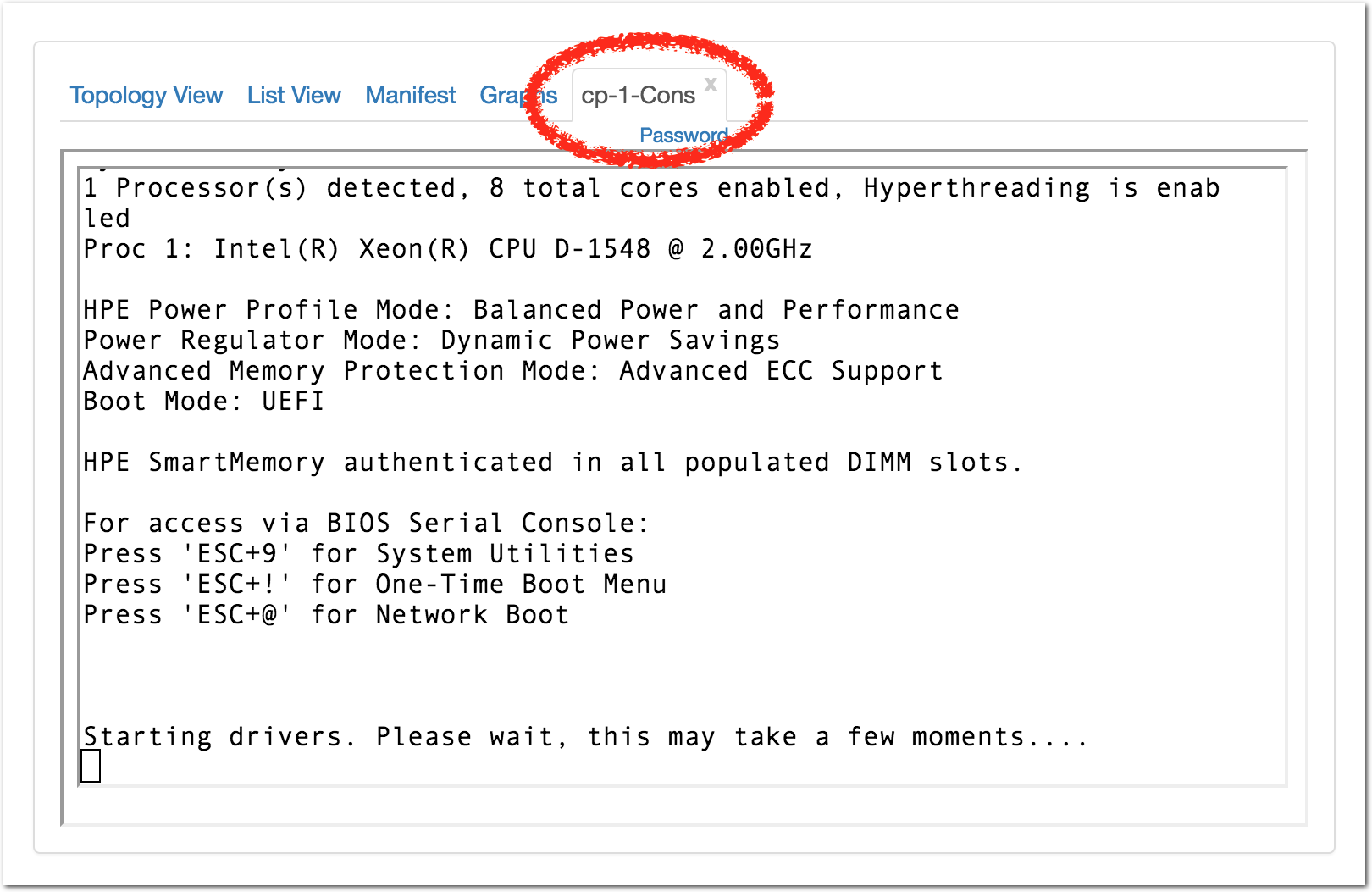

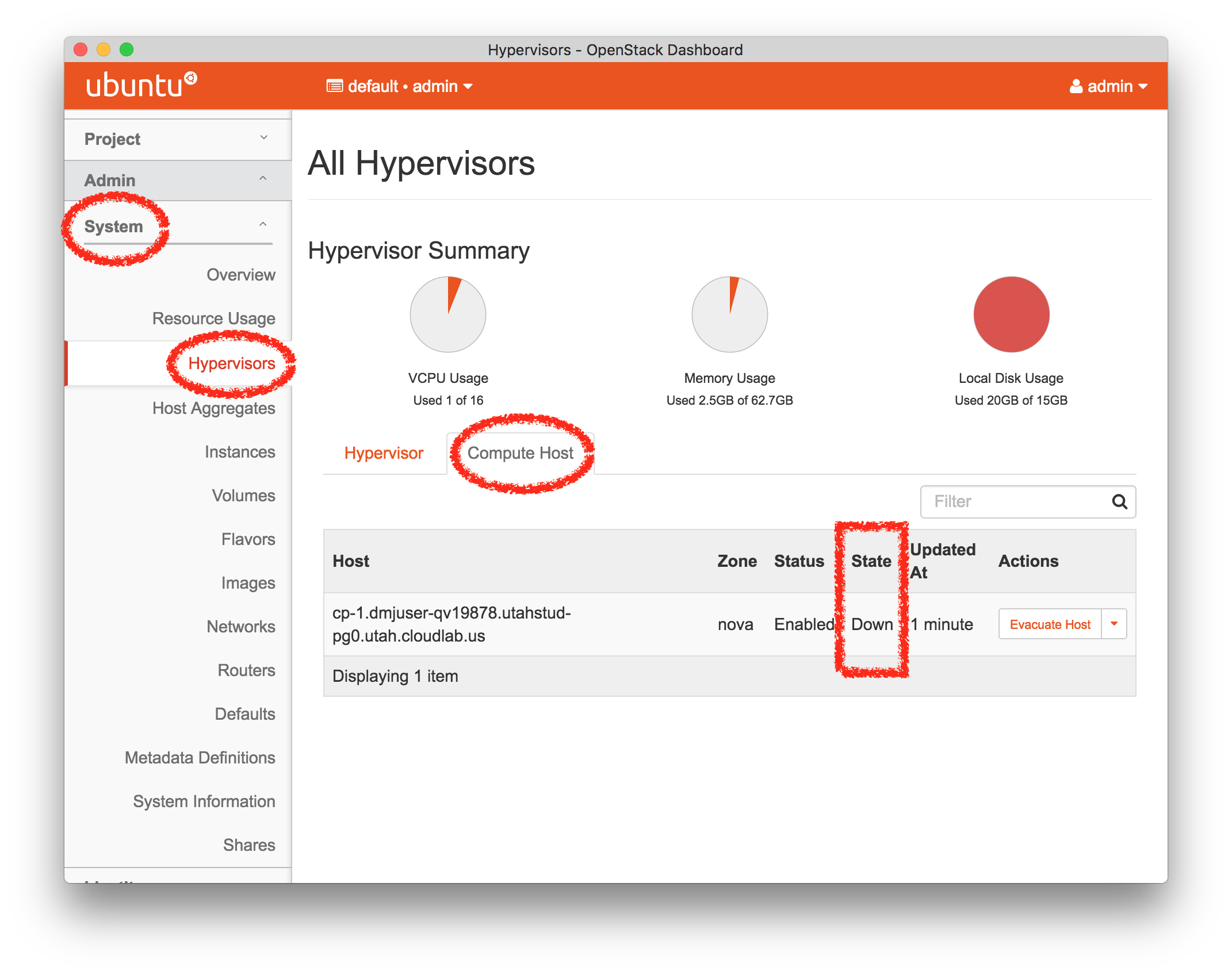

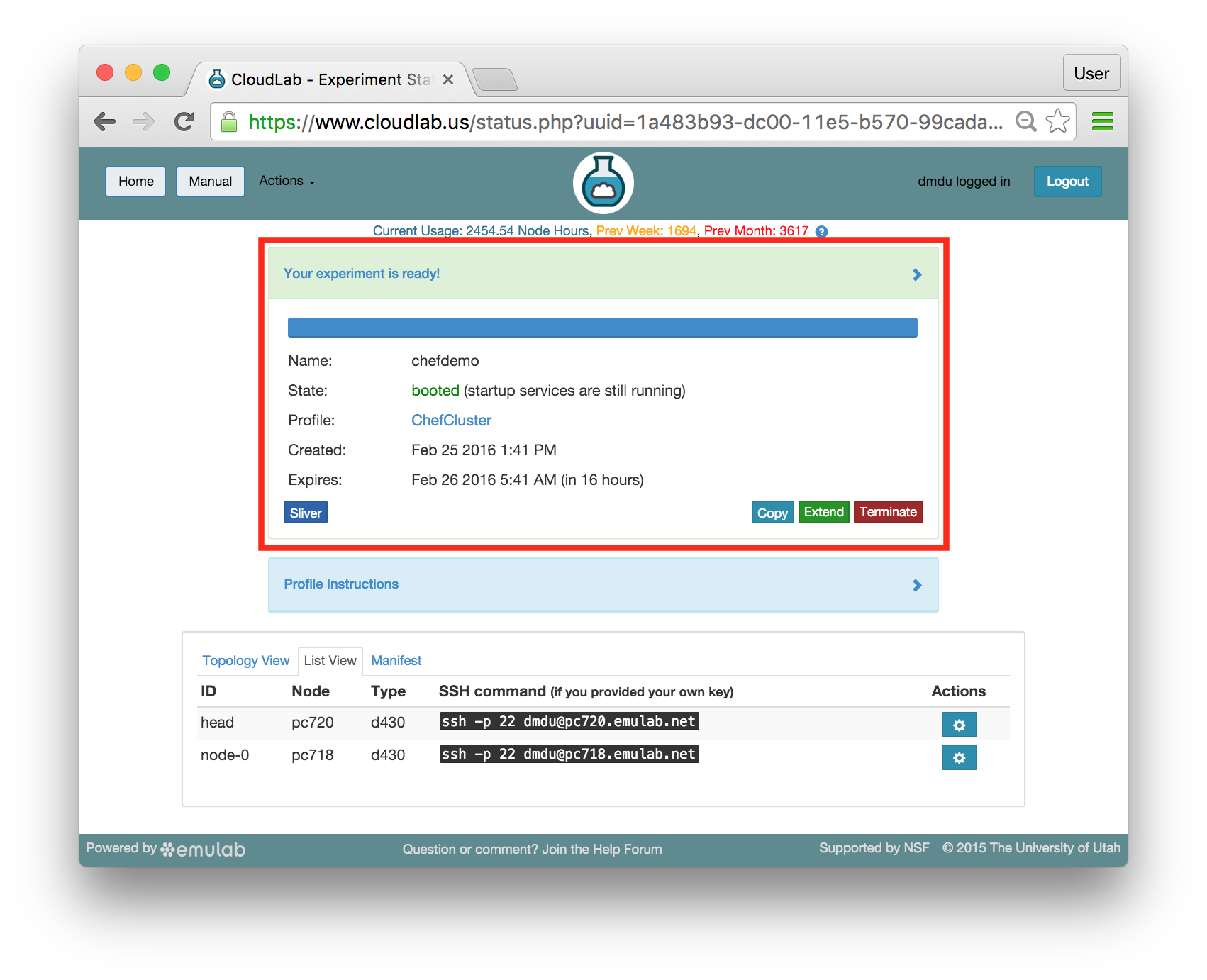

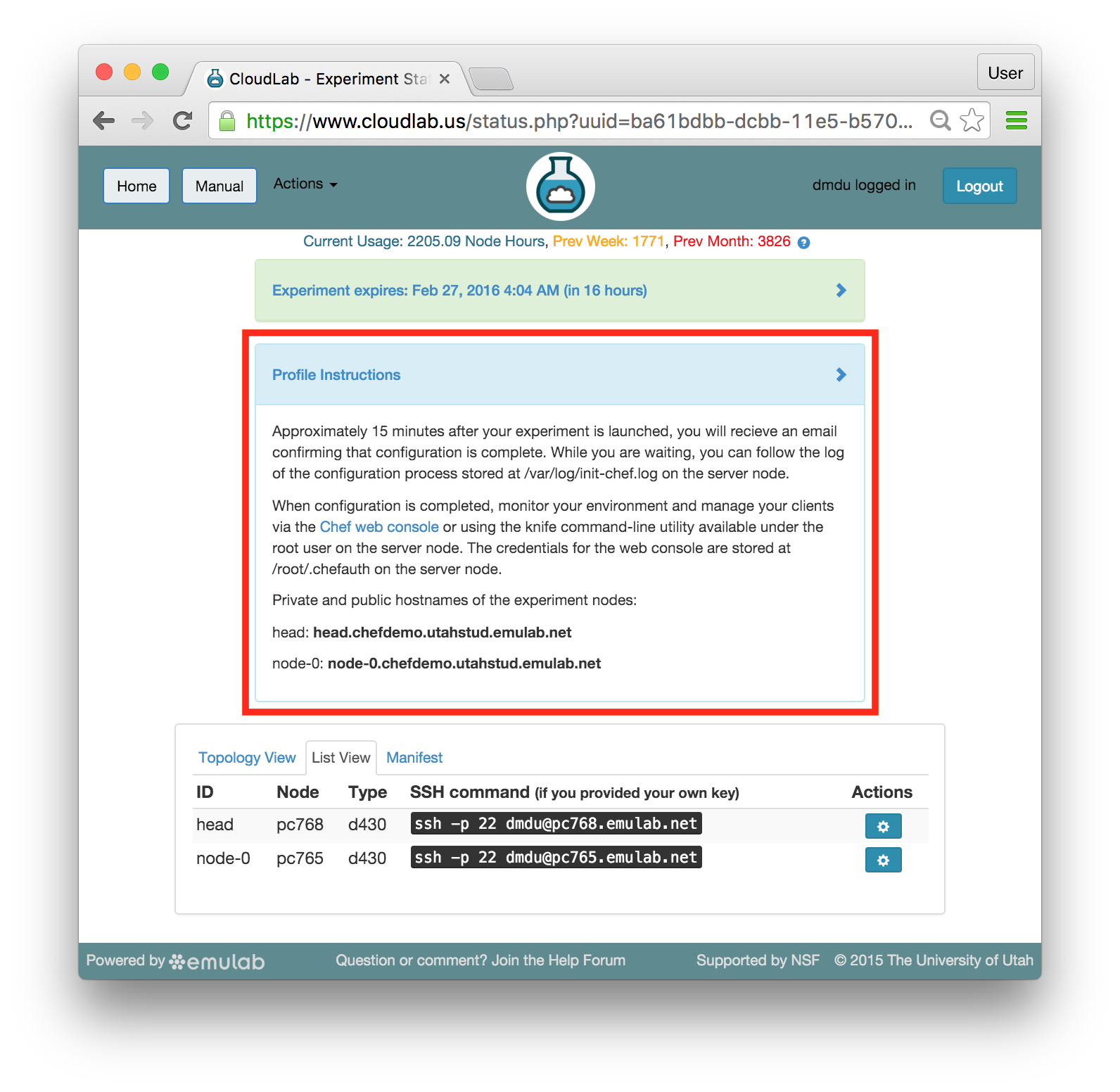

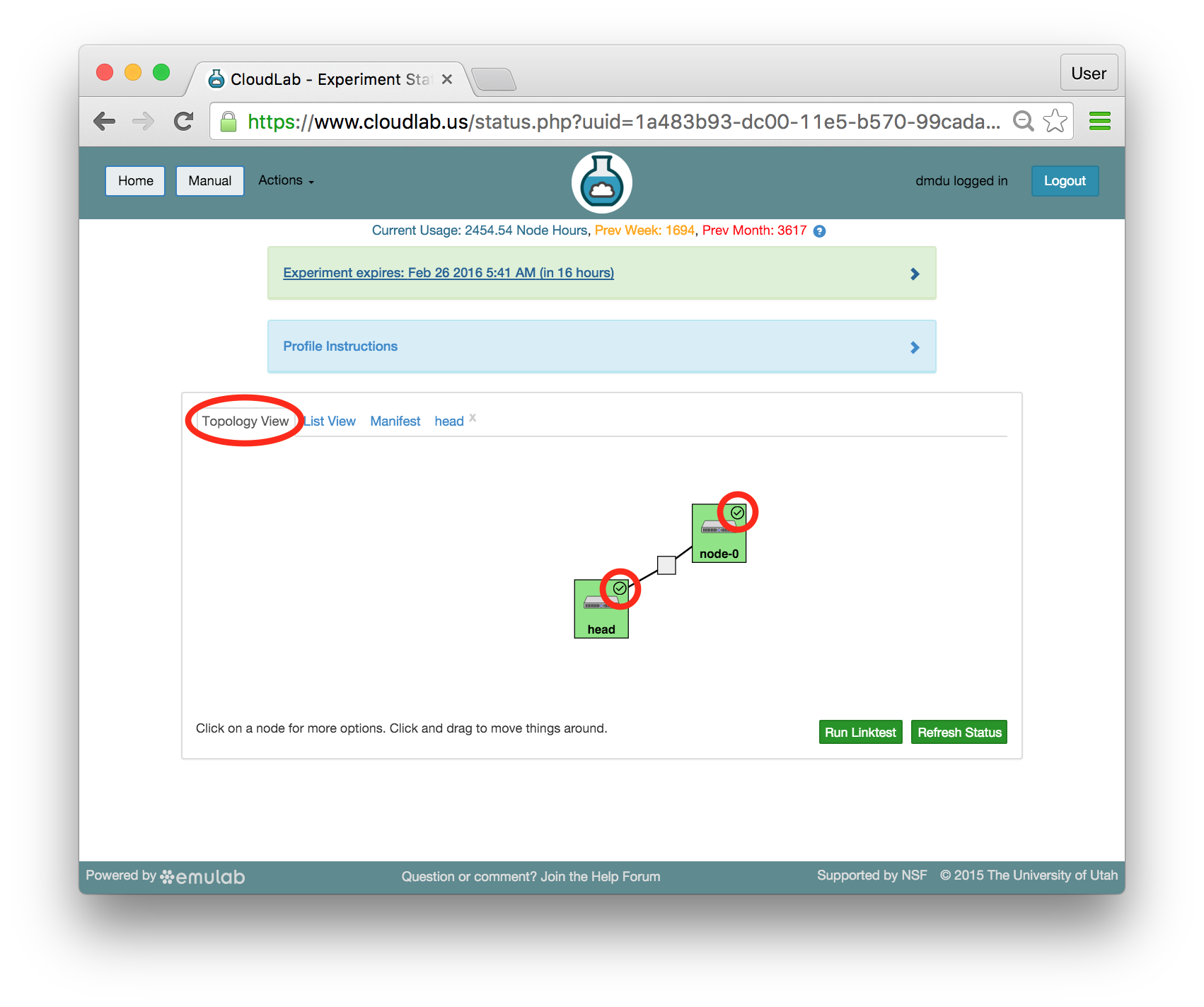

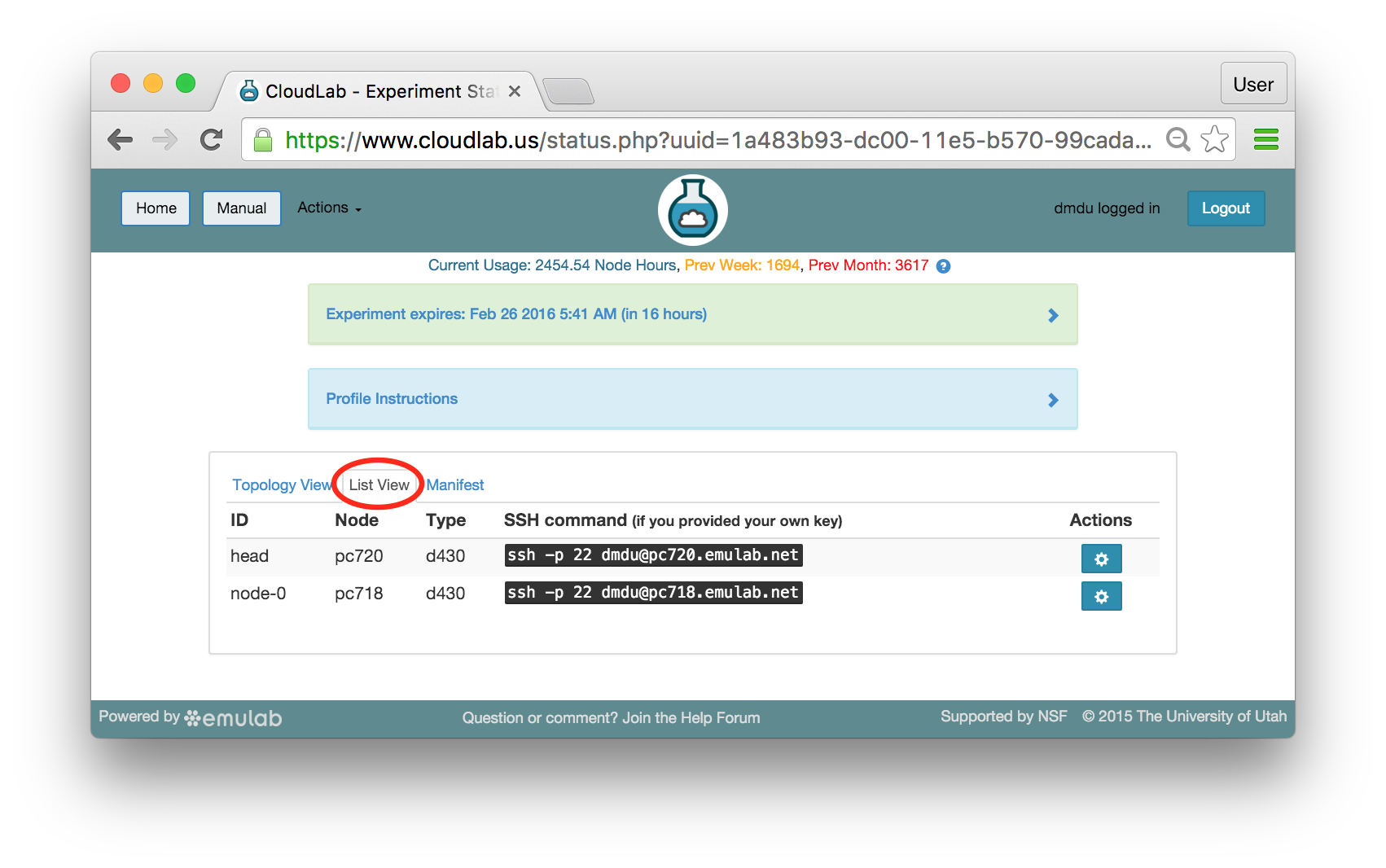

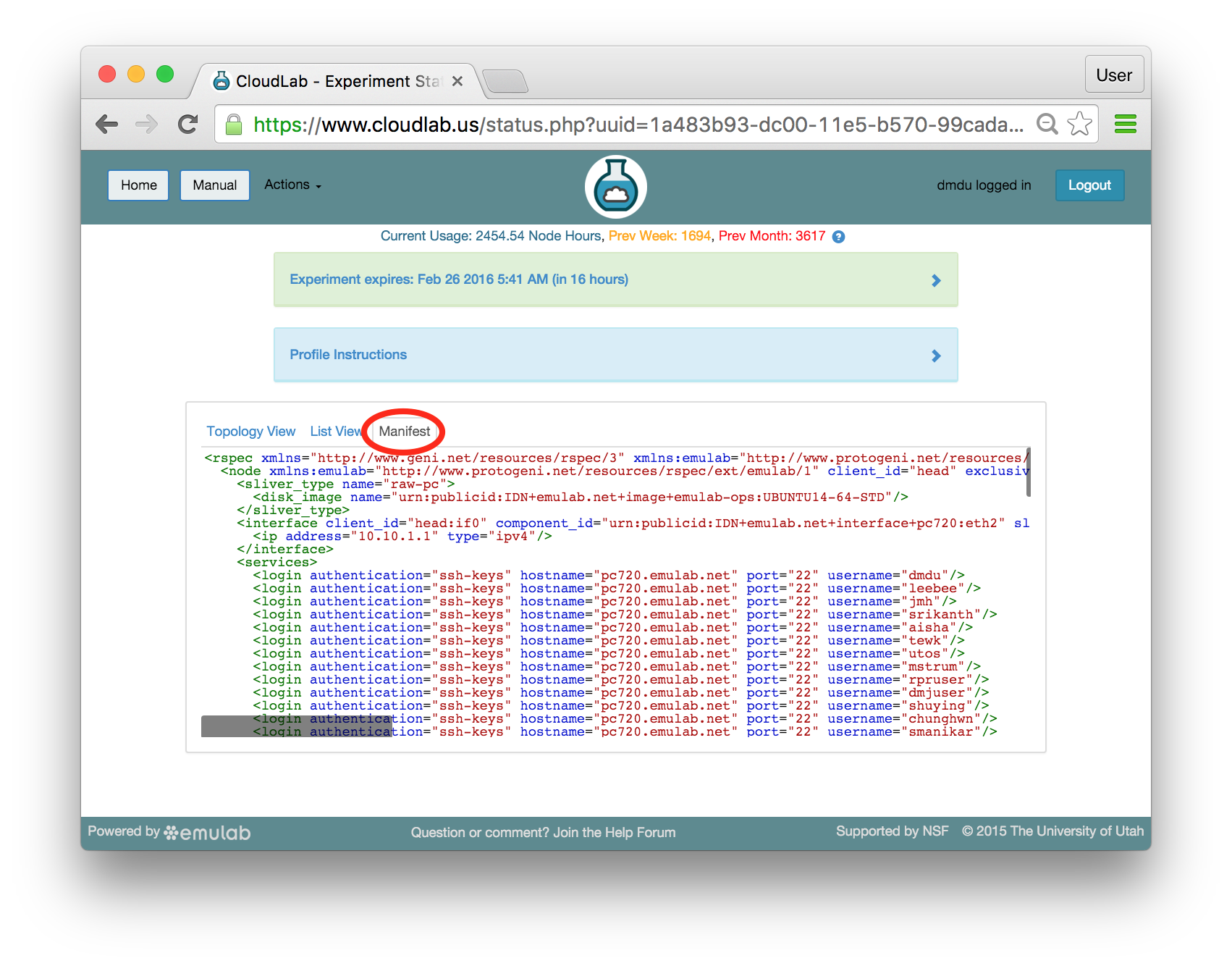

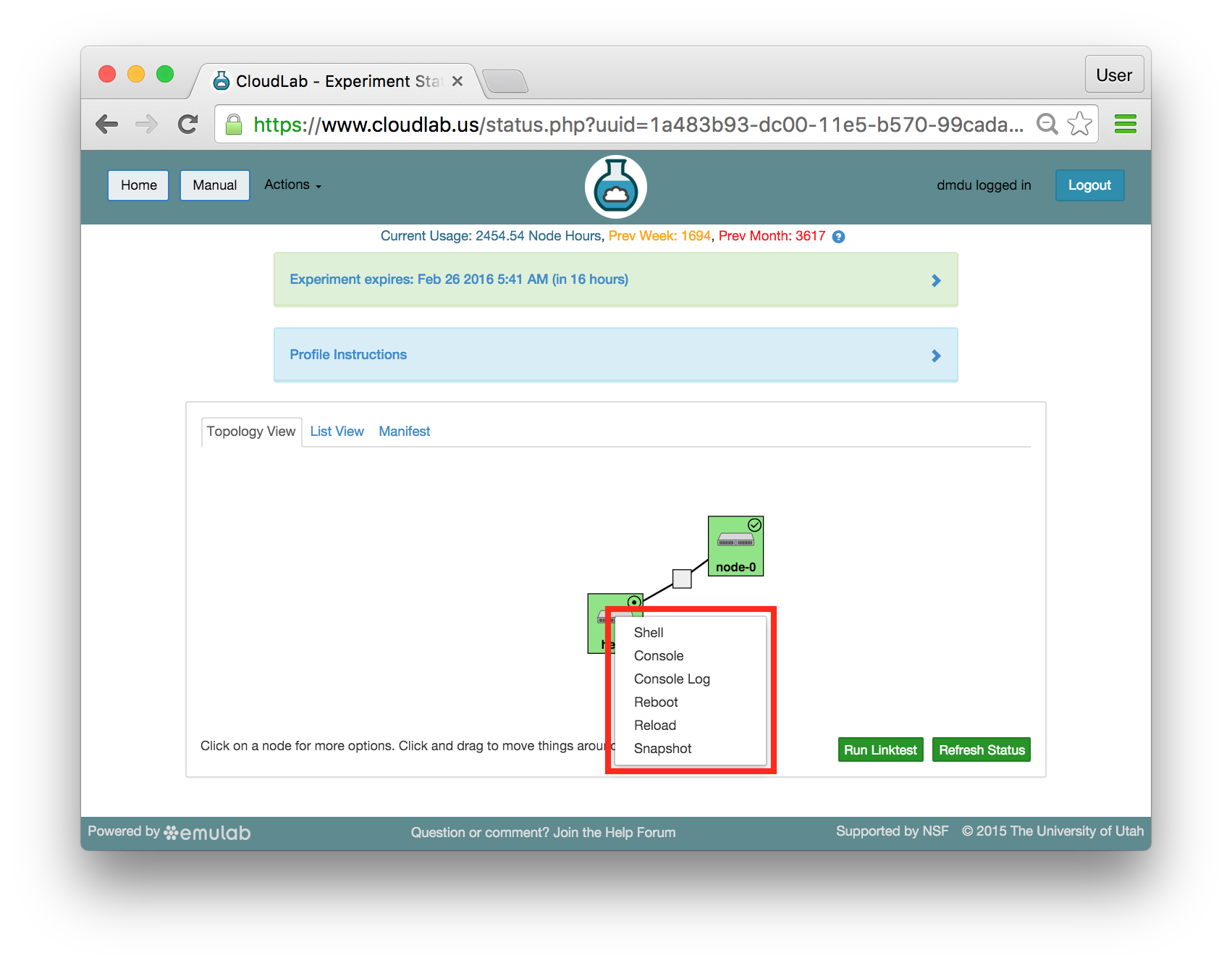

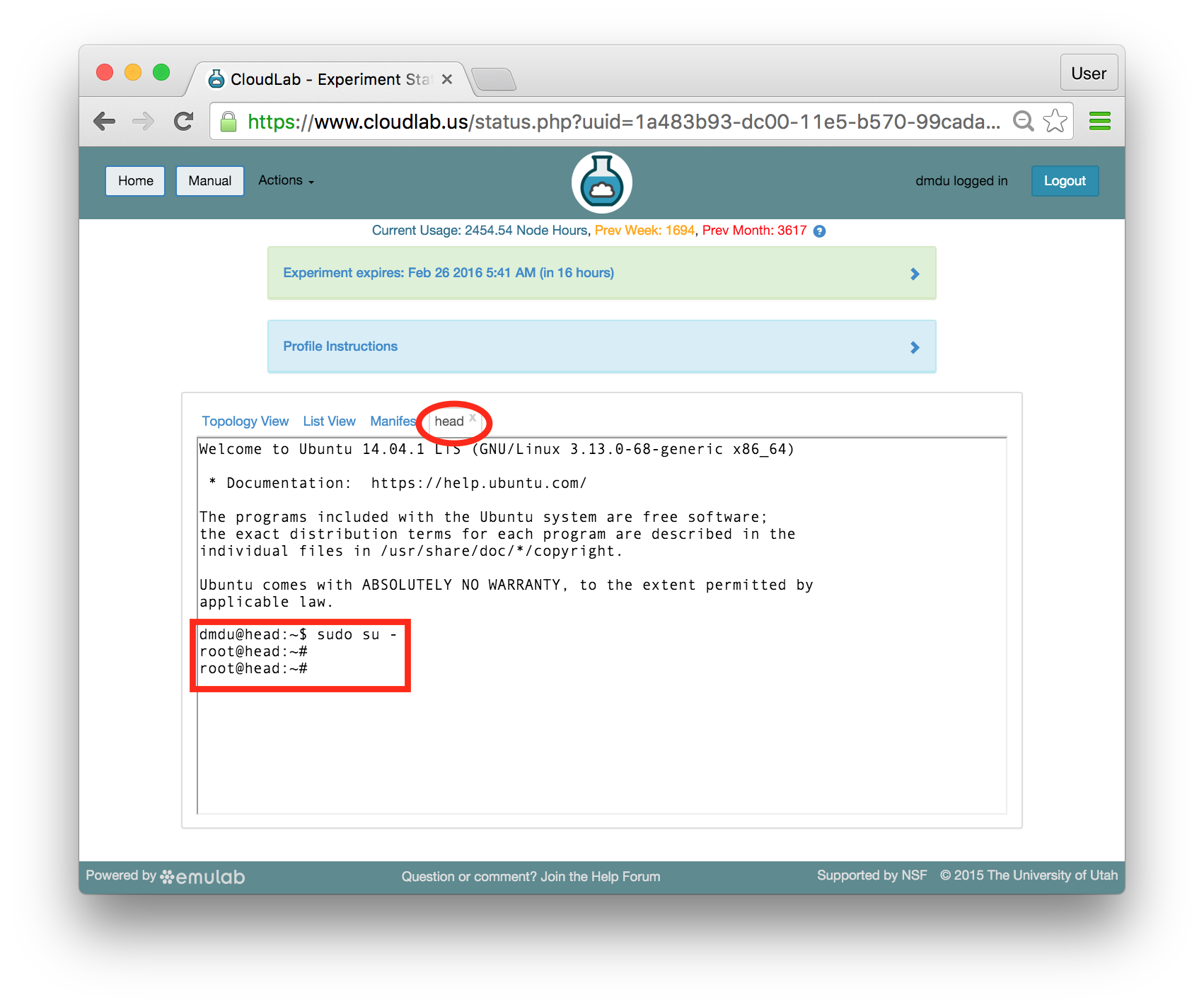

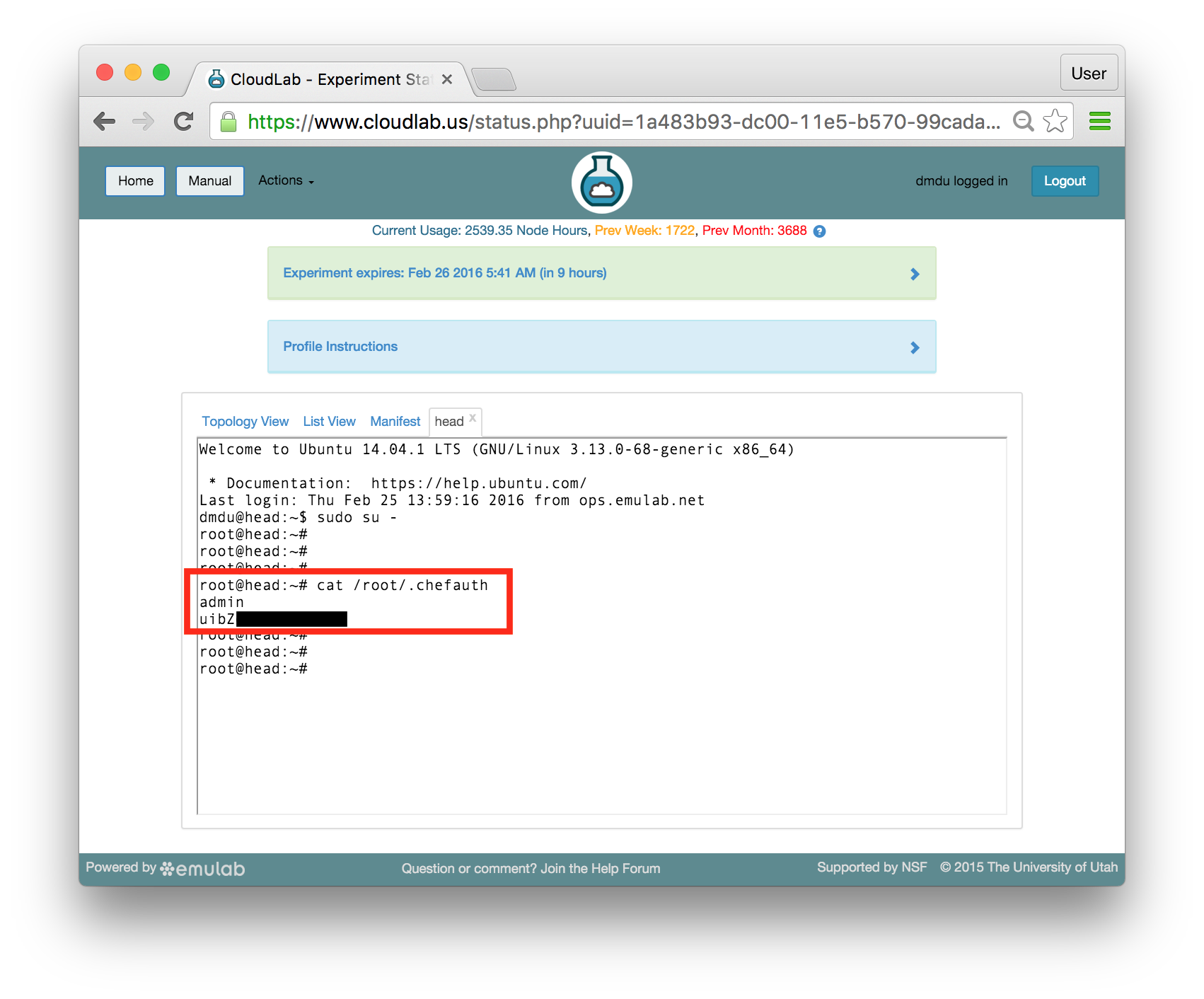

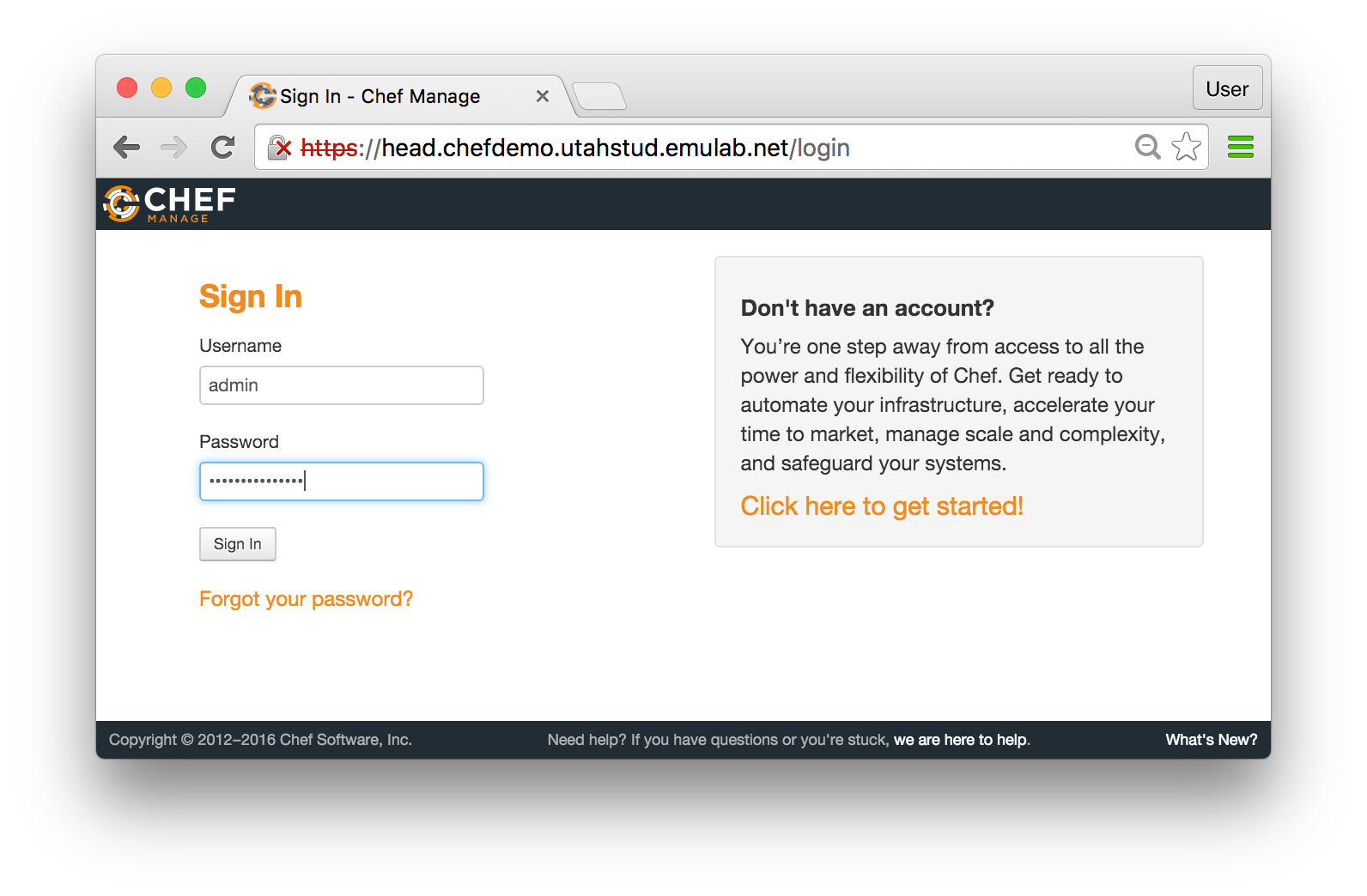

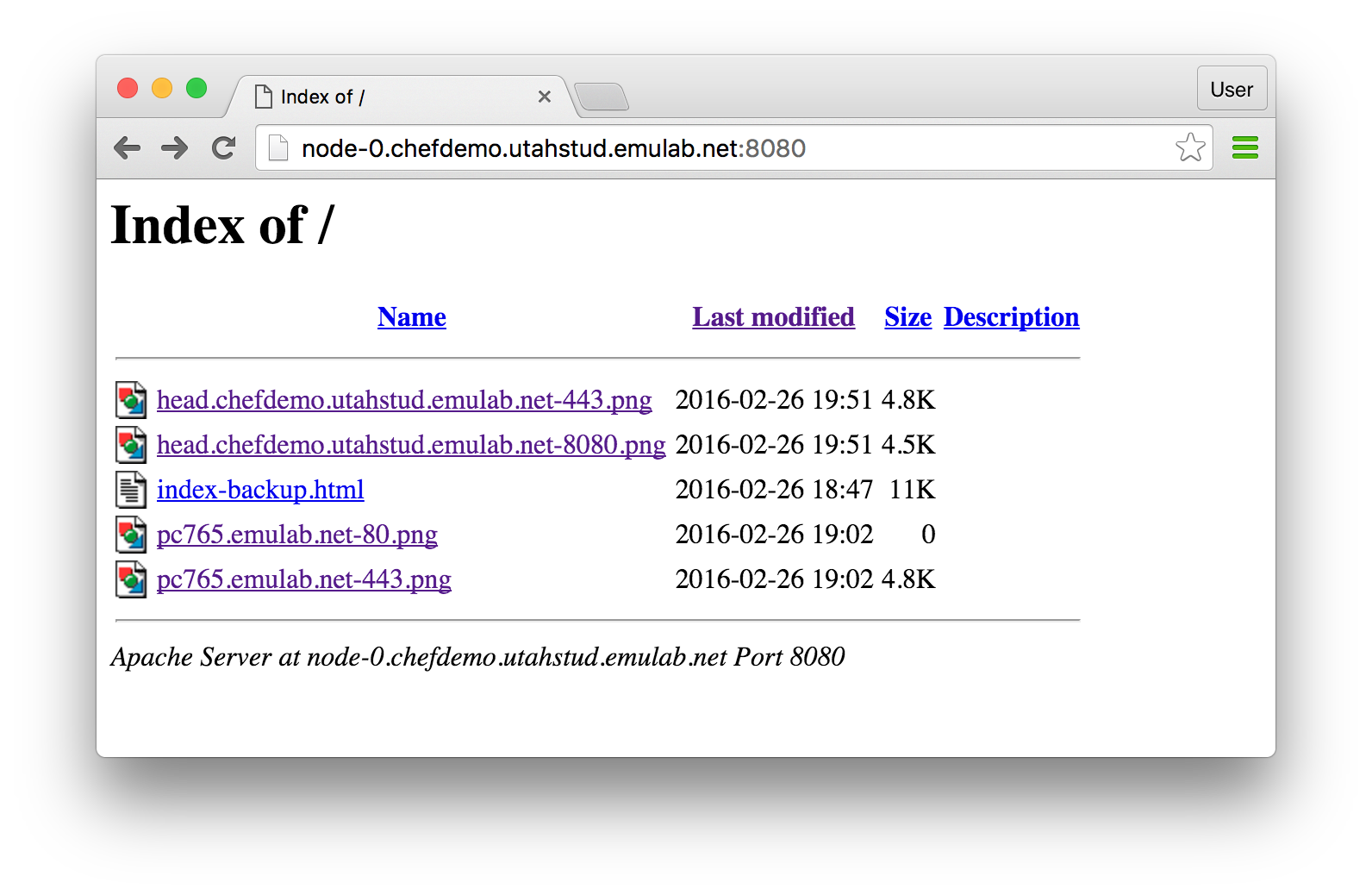

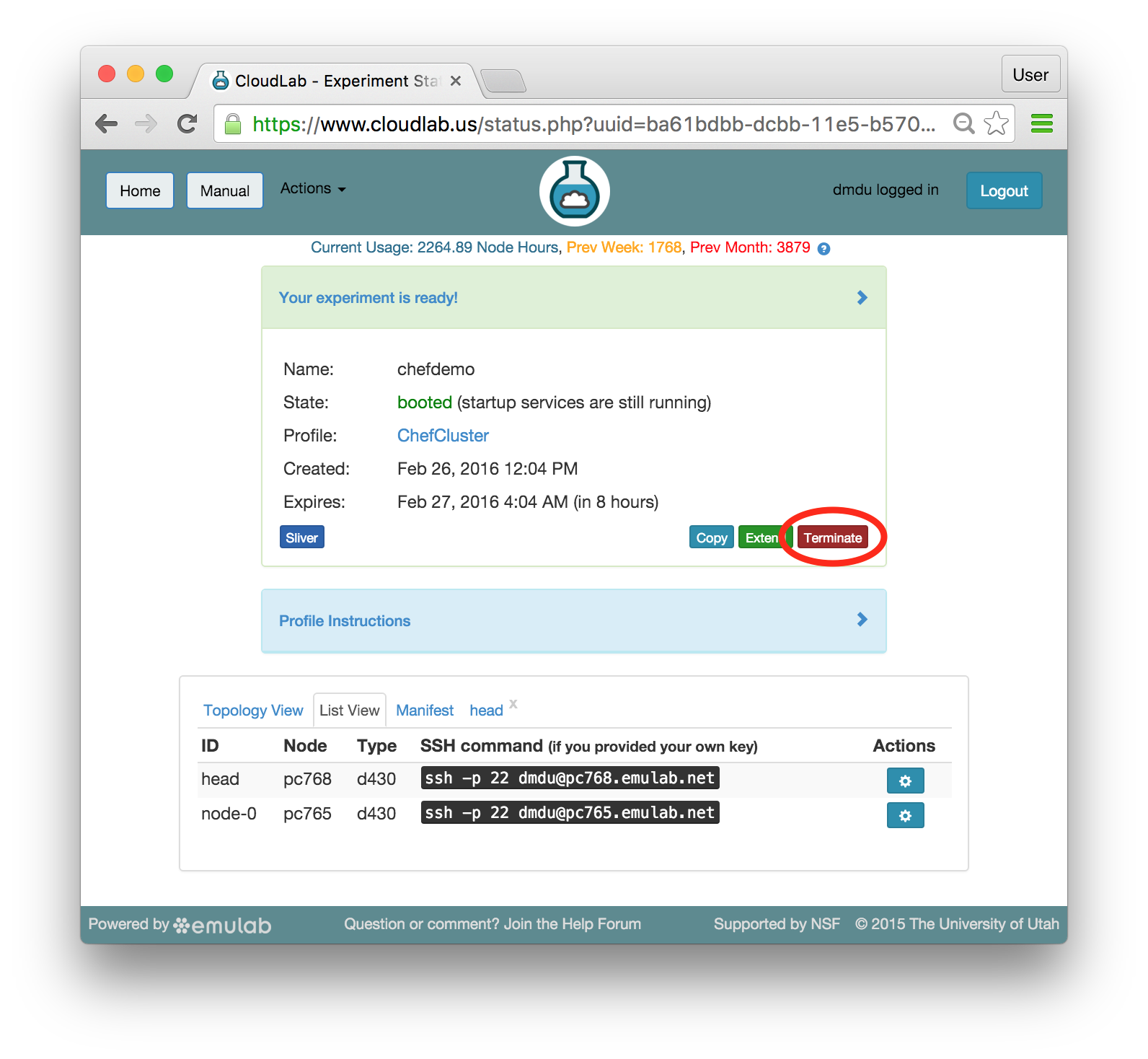

The “Topology View” shows the network topology of your experiment (which may be as simple as a single node). Clicking on a node in this view brings up a terminal in your browser that gives you a shell on the node. The “List View” lists all nodes in the topology, and in addition to the in-browser shell, gives you the command to ssh login to the node (if you provided a public key). The “Manifest” tab shows you the technical details of the resources allocated to your experiment. Any open terminals you have to the nodes show up as tabs on this page.Clicking on the “Profile Instructions” link (if present) will show instructions provided by the profile’s creator regarding its use.Your experiment is yours alone, and you have full “root” access (via the sudo command). No one else has access to the nodes in your experiment, and you may do anything at all inside of it, up to and including making radical changes to the operating system itself. We’ll clean it all up when you’re done!If you used our default OpenStack profile, the instructions will contain a link to the OpenStack web interface. The instructions will also give you a username and password to use.

The “Topology View” shows the network topology of your experiment (which may be as simple as a single node). Clicking on a node in this view brings up a terminal in your browser that gives you a shell on the node. The “List View” lists all nodes in the topology, and in addition to the in-browser shell, gives you the command to ssh login to the node (if you provided a public key). The “Manifest” tab shows you the technical details of the resources allocated to your experiment. Any open terminals you have to the nodes show up as tabs on this page.Clicking on the “Profile Instructions” link (if present) will show instructions provided by the profile’s creator regarding its use.Your experiment is yours alone, and you have full “root” access (via the sudo command). No one else has access to the nodes in your experiment, and you may do anything at all inside of it, up to and including making radical changes to the operating system itself. We’ll clean it all up when you’re done!If you used our default OpenStack profile, the instructions will contain a link to the OpenStack web interface. The instructions will also give you a username and password to use. Since you gave CloudLab an ssh public key as part of account creation, you can log in using the ssh client on your laptop or desktop. The contoller node is a good place to start, since you can poke around with the OpenStack admin commands. Go to the "list view" on the experiment page to get a full command line for the ssh command.

Since you gave CloudLab an ssh public key as part of account creation, you can log in using the ssh client on your laptop or desktop. The contoller node is a good place to start, since you can poke around with the OpenStack admin commands. Go to the "list view" on the experiment page to get a full command line for the ssh command. Your experiment will terminate automatically after a few hours. When the experiment terminates, you will lose anything on disk on the nodes, so be sure to copy off anything important early and often. You can use the “Extend” button to submit a request to hold it longer, or the “Terminate” button to end it early.

Your experiment will terminate automatically after a few hours. When the experiment terminates, you will lose anything on disk on the nodes, so be sure to copy off anything important early and often. You can use the “Extend” button to submit a request to hold it longer, or the “Terminate” button to end it early.

1.1 Next Steps

Try a profile that runs bare metal and set up a cloud stack yourself

Making your own profiles is easy: see the chapter on profile creation for instructions.

If you need help, or have questions or comments about CloudLab’s features, contact us!

2 CloudLab Users

Registering for an account is quick and easy. Registering doesn’t cost anything, it’s simply for accountability. We just ask that if you’re going to use CloudLab for anything other than light use, you tell us a bit more about who you are and what you want to use CloudLab for.

Users in CloudLab are grouped into projects: a project is a (loosely-defined) group of people working together on some common goal, whether that be a research project, a class, etc. CloudLab places a lot of trust on project leaders, including the ability to authorize others to use the CloudLab. We therefore require that project leaders be faculty, senior research staff, or others who are relatively senior positions.

2.1 Register for an Account

To get an account on CloudLab, you either join an existing project or create a new one. In general, if you are a student, you should join a project led by a faculty member with whom you’re working.

If you already have an account on

Emulab.net, you don’t need to sign

up for a new account on CloudLab—

2.1.1 Join an existing project

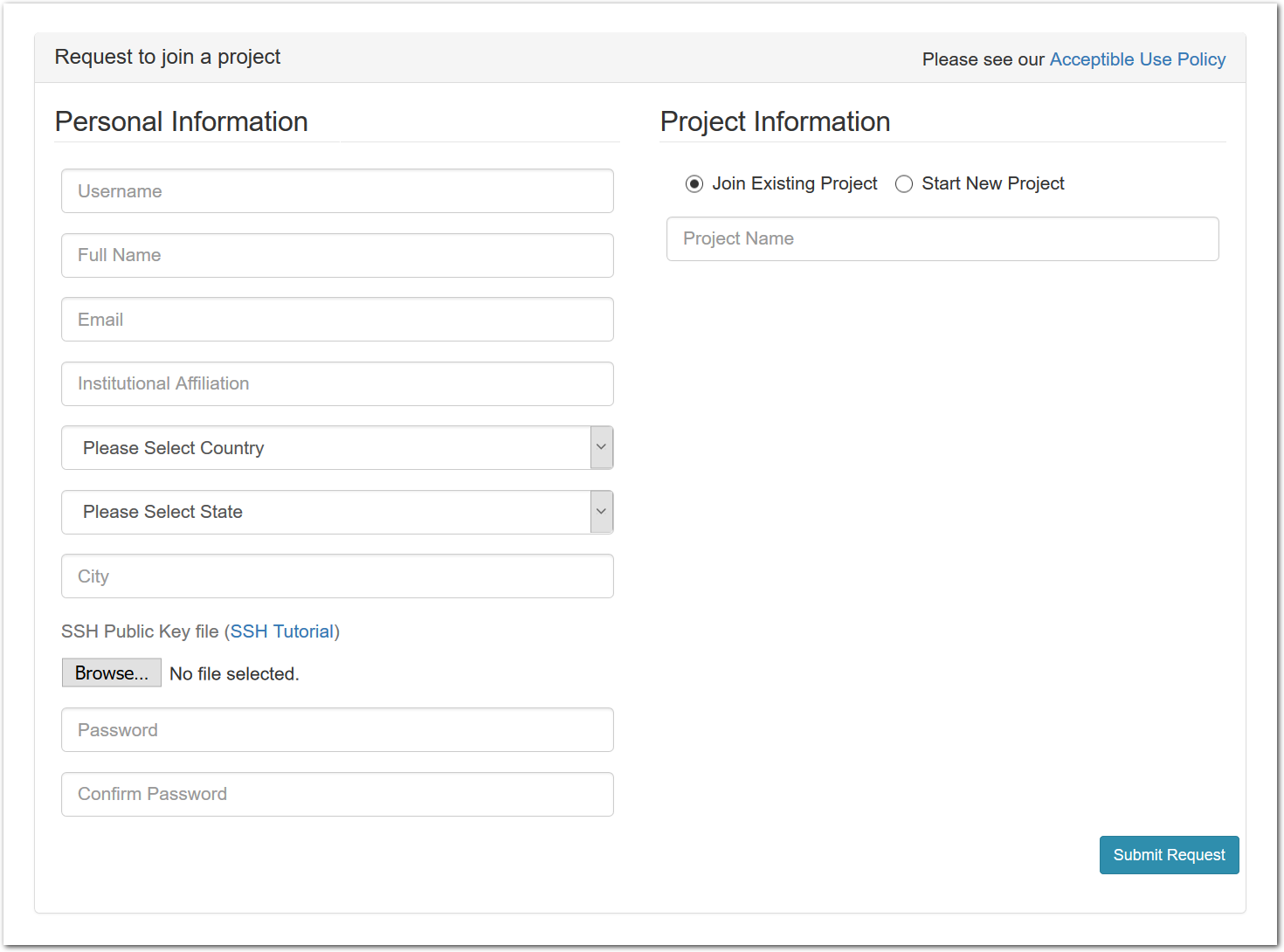

To join an existing project, simply use the “Sign Up” button found on every CloudLab page. The form will ask you a few basic questions about yourself and the institution you’re affiliated with.

An SSH public key is required; if you’re unfamiliar with creating and using ssh keypairs, we recommend taking a look at the first few steps in GitHub’s guide to generating SSH keys. (Obviously, the steps about how to upload the keypair into GitHub don’t apply to CloudLab.)

CloudLab will send you email to confirm your address—

watch for it (it might end up in your spam folder), as your request won’t be processed until you’ve confirmed your address.

2.1.2 Create a new project

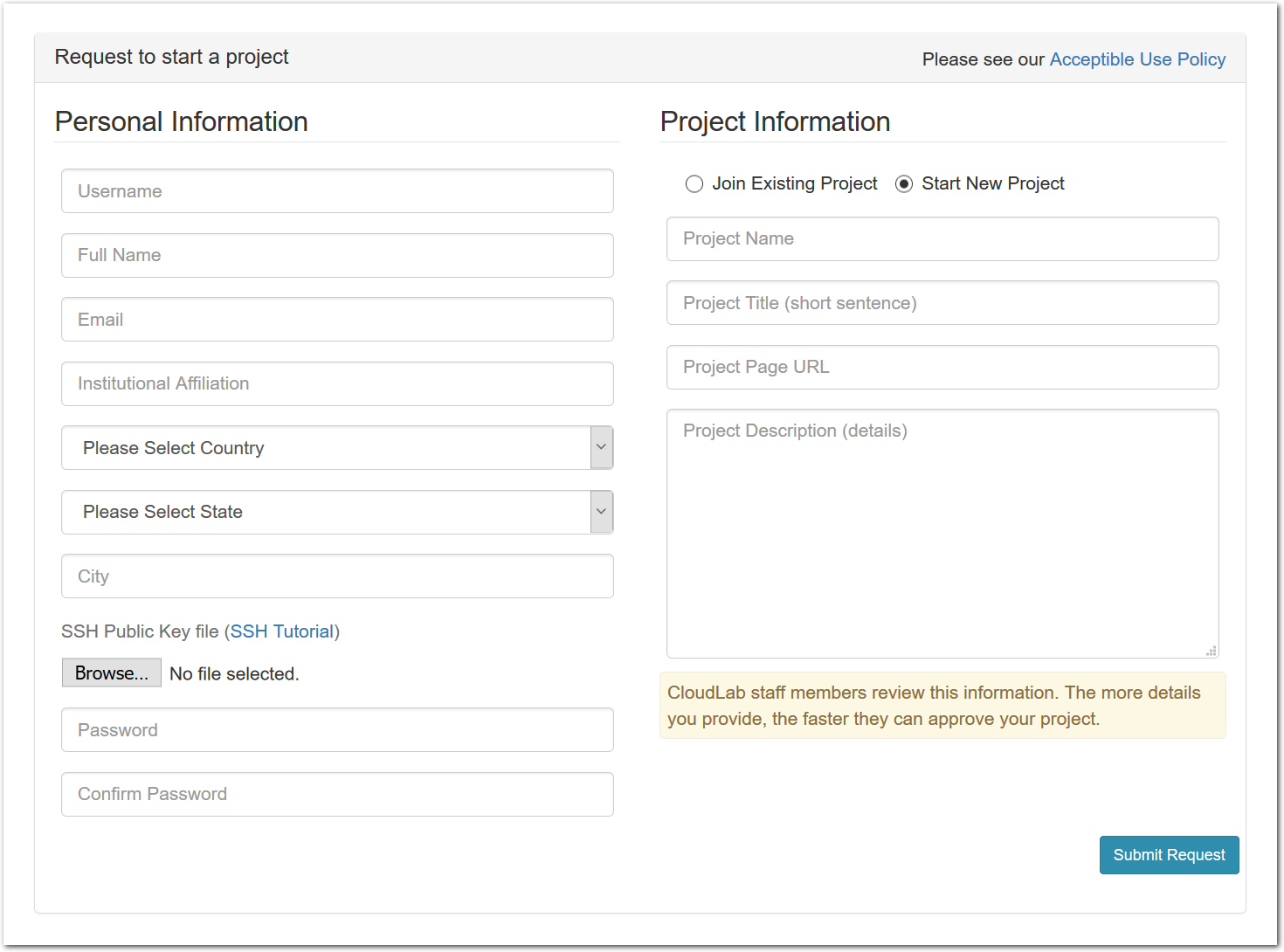

You should only start a new project if you are a faculty member, senior research staff, or in some other senior position. Students should ask their advisor or course instructor to create a new project.

To start a new project, use the “Sign Up” button found on every CloudLab page. In addition to basic information about yourself, the form will ask you a few questions about how you intend to use CloudLab. The application will be reviewed by our staff, so please provide enough information for us to understand the research or educational value of your project. The review process may take a few days, and you will receive mail informing you of the outcome.

Every person working in your project needs to have their own account. You get to approve these additional users yourself (you will receive email when anyone applies to join.) It is common, for example, for a faculty member to create a project which is primarily used by his or her students, who are the ones who run experiments. We still require that the project leader be the faculty member, as we require that there is someone in a position of authority we can contact if there are questions about the activities of the project.

Note that projects in CloudLab are publicly-listed: a page that allows users to see a list of all projects and search through them does not exist yet, but it will in the future.

2.1.3 Setting up SSH access

The first step to setting up ssh access to CloudLab is to generate an ssh key pair.

**For Windows users:**

A good option for a Windows ssh client is to make use of putty:

To generate and manage ssh keys with putty:

https://www.ssh.com/ssh/putty/windows/puttygen

**For Linux and Mac OS users:**

To generate and manage ssh keys:

https://www.ssh.com/ssh/keygen/

Once you have generated an ssh key pair, you need to upload the public key into the CloudLab portal:

Log into the CloudLab portal. Once you are logged in: Click on your username (top right), select "Manage SSH keys" and follow the prompts to load the ssh public key.

The next time you instantiate an experiment, your ssh public key will be loaded onto all (ssh capable) nodes in your experiment, allowing direct access to these nodes using an ssh client.

2.1.4 Setting up X11

Experiment based graphical user interface (GUI) interaction on CloudLab often require X windows, so you might find it useful to have an X-server running on the laptop/desktop you use to access resources on CloudLab.

**For Windows users:**

Various options are available, for example:

https://sourceforge.net/projects/vcxsrv/

**For Mac OS users:**

3 CloudLab and Repeatable Research

One of CloudLab’s key goals is to enable repeatable research—

CloudLab is designed as a scientific instrument. It gives full visibility into every aspect of the facility, and it’s designed to minimize the impact that simultaneous slices have on each other. This means that researchers using CloudLab can fully understand why their systems behave the way they do, and can have confidence that the results that they gather are not artifacts of competition for shared hardware resources. CloudLab profiles can also be published, giving other researchers the exact same environment—hardware and software—on which to repeat experiments and compare results.

CloudLab gives exclusive access to compute resources to one experiment at a time. (That experiment may involve re-exporting those resources to other users, for example, by running cloud services.) Storage resources attached to them (eg. local disk) are also used by a single experiment at a time, and it is possible to run experiments that have exclusive access to switches.

3.1 CloudLab For Artifact Evaluation

CloudLab is an ideal environment for artifact evaluation: it provides a way for authors to define an environment in which they know their experiments will work, and a way for AEC members to get access to large collections of computing hardware without having to own that hardware themselves or pay for a commercial cloud environment.

In this section, we offer advice to authors of artifacts, AEC chairs, and the members of AECs.

3.1.1 For Authors

The best way to give evaluators a predictable environment is to provide a link to a profile that is suitable for running your artifact. This could be one of our standard profiles, or one that you make yourself. Either way, you can be sure of the environment they will get so that you do not have to worry about hardware differences, software versions, etc. This profile may be simple, giving them access to a single machine with a default OS, or may have multiple machines and/or use features such as disk images and startup scripts to manage dependencies and configuration so that the evaluators have minimal work to do.

On the page for each profile, there is a "share" button: this will provide you with the link you can give to evaluators. Because profiles are versioned, you can provide them with a link to either a specific version of the profile or to the profile in general (which will always point to the latest version). If you are using a profile that you made yourself, we recommend that you set the profile to be publicly available, though it is also possible to share private profiles (these use a link with a random identifier; anyone with the link can use the profile).

Evaluators will need to have a CloudLab account to use your profile. We do not recommend having them join your project (as this removes their anonymity) or giving them access to a running experiment (as this can be unnecessarily complex, and requires your experiment to remain instantiated for a potentially long period of time). Instead, we recommend that evaluators use accounts obtained as described below in the "AEC Chairs" and "AEC Members" sections. You may point them to this manual to explain the process if they are not familiar with it.

We recommend using a profile that specifies a specific hardware type so that you can ensure that evaluators are running on machines with the same type of performance, the same amount of memory, etc. We also recommend that you use a profile that specifies an explicit disk image(s) to use, even if that image is one of CloudLab’s default images. This way, even if CloudLab’s default changes, you can be assured that evaluators will continue to get the same one you used and will not run into any unexpected software version problems.

Because many artifacts are shared as git repositories, repo-based profiles can be a good way to package the profile with the rest of the artifact. In essence, any public git repo can be made into a profile by adding a profile.py script (which defines the profile using Describing a profile with python and geni-lib) to it. If you chose to do this, you will still provide the evaluators with a link to a profile to use: it will just be the case that the definition of this profile is contained within the same repository as the rest of your artifact. For an example of this, see the artifact repository for the OrderSage project.

3.1.2 For AEC Chairs

CloudLab can be a useful resource for AECs as a place with significant resources to run artifacts. It can be useful whether or not artifacts are submitted as CloudLab profiles (as described above): AEC members can get machines with a variety of hardware and popular OSes, and they have root access, so installing dependencies, etc. is straightforward. Many members of certain research communities will already have experience using CloudLab.

We recommend that AEC chairs start a project for the AEC; the CloudLab staff will review your project application, so make sure it is clear about which venue it is an AEC for. Once the project is approved, you will receive links that you can share with AEC members to join the project. Project leads approve members of their projects, so CloudLab staff will not be involved further in the process.

CloudLab can sometimes be quite busy, and resources (especially hardware with particularly valuable properties) can fill up. We recommend that you warn your AEC members to plan ahead and leave themselves plenty of time to make sure they can get the resources they need. The reservation system can be used to help get access to scarce resources.

3.1.3 For AEC Members

CloudLab can be a useful resource for AEC members whether or not artifacts are submitted as CloudLab profiles (as described above): AEC members can get machines with a variety of hardware and popular OSes, and they have root access, so installing dependencies, etc. is straightforward.

We recommend that AEC members join a project created specifically for the AEC. If your AEC chairs have not provided you with information about a CloudLab project for the AEC, we recommend that you point them to the section above for instructions to create one.

If you already have an account on CloudLab, it is possible to be a member of multiple projects with the same account; simply log in to your existing account before applying to join the new project.

Since AECs frequently work on tight deadlines, and CloudLab has limited resources, we recommend planning ahead to make sure that you have enough time to obtain the resources you need and do the evaluation. If you are having trouble with the resources you need being unavailable, have a look at CloudLab’s reservation system.

4 Creating Profiles

In CloudLab, a profile captures an entire cloud environment—

When you create a new profile, you are creating a new geni-lib python script, and, often, creating one or more disk images that are referenced by that script. When someone uses your profile, they will get their own experiment that boots up the resources (virtual or physical) described by the profile script.

To create profiles, you must be a registered user.

When you create a new profile, you can provide the geni-lib python script to CloudLab in one of two ways. First, you can upload it as a single file, allowing CloudLab to manage its storage, and upload a modified version or edit it in the CloudLab code editor when you want to create a new version. Second, you can use CloudLab’s repository-based profile feature, in which you provide a git repository URL containing the geni-lib python script as a profile.py file in the top-level directory of the repository. This allows you to control versioning according to your git workflow and instantiate from branches. We discuss both of these modes in more detail in the following subsections.

4.1 Creating a profile from an existing one

The easiest way to create a new profile is by copying an existing one and customizing it to your needs. Your goal should be to choose the profile that is most similar to the one you want to build. Usually, this will be one of our facility-provided profiles with a generic installation of Linux.

When you copy a profile, you are creating a new profile that is identical in every way to an existing profile. You may or may not have a running experiment using the source profile. And if you do have a running experiment, it does not impact the copy. After copying a profile, you can then modify it for your own use. And if you instantiate the copy, you can then take snapshots of disk images and use them in future version of your copy. You can copy any profile that you have access to.

4.1.1 Copying a Profile

- Choose a profileFind the profile you wish to copy using the “Start Experiment” selector. Then you can click “Show Profile” button, and click the “Copy” button on the resulting page to copy the profile directly; or you can instantiate the profile if you wish to create an experiment first. Both profiles themselves and active experiments can be copied.

- Copy the profile or experimentWhile logged in, both your experiment page and the show profile page will have a copy button. Clicking this button will create a profile based on that profile or experiment.This button only copies the geni-lib python script. No state in the active experiment is preserved.

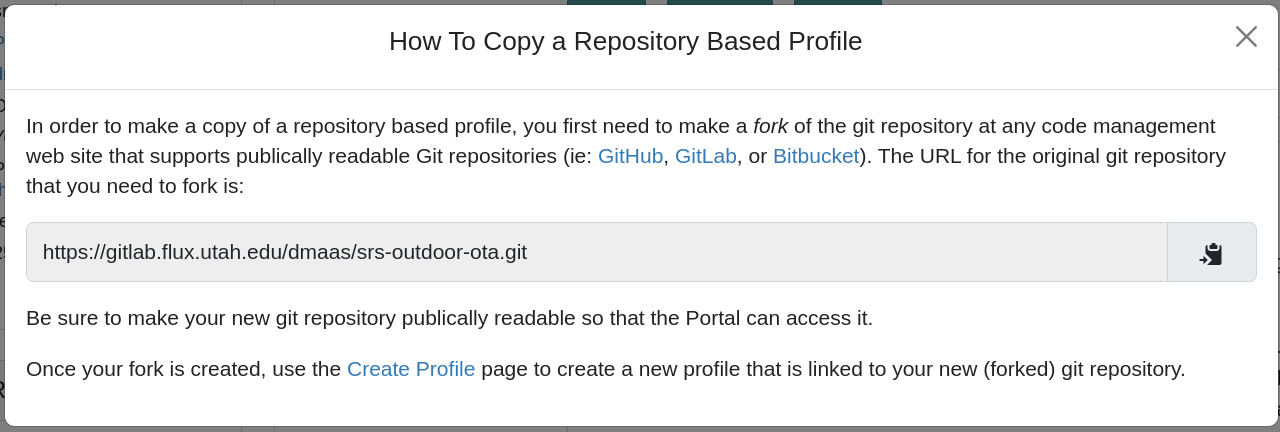

- Copying repository-based profilesNote: when you copy a repository-based profile, you will be instructed to first fork the git repository, and use that to create a new profile, as shown in the following screenshot. This is necessary because CloudLab does not act as a Git repository hosting provider, due to the potentially large size of code repositories with embedded profiles, and the fact that most repository owners prefer to store their code on Github or a private on-premises Git hosting solution.

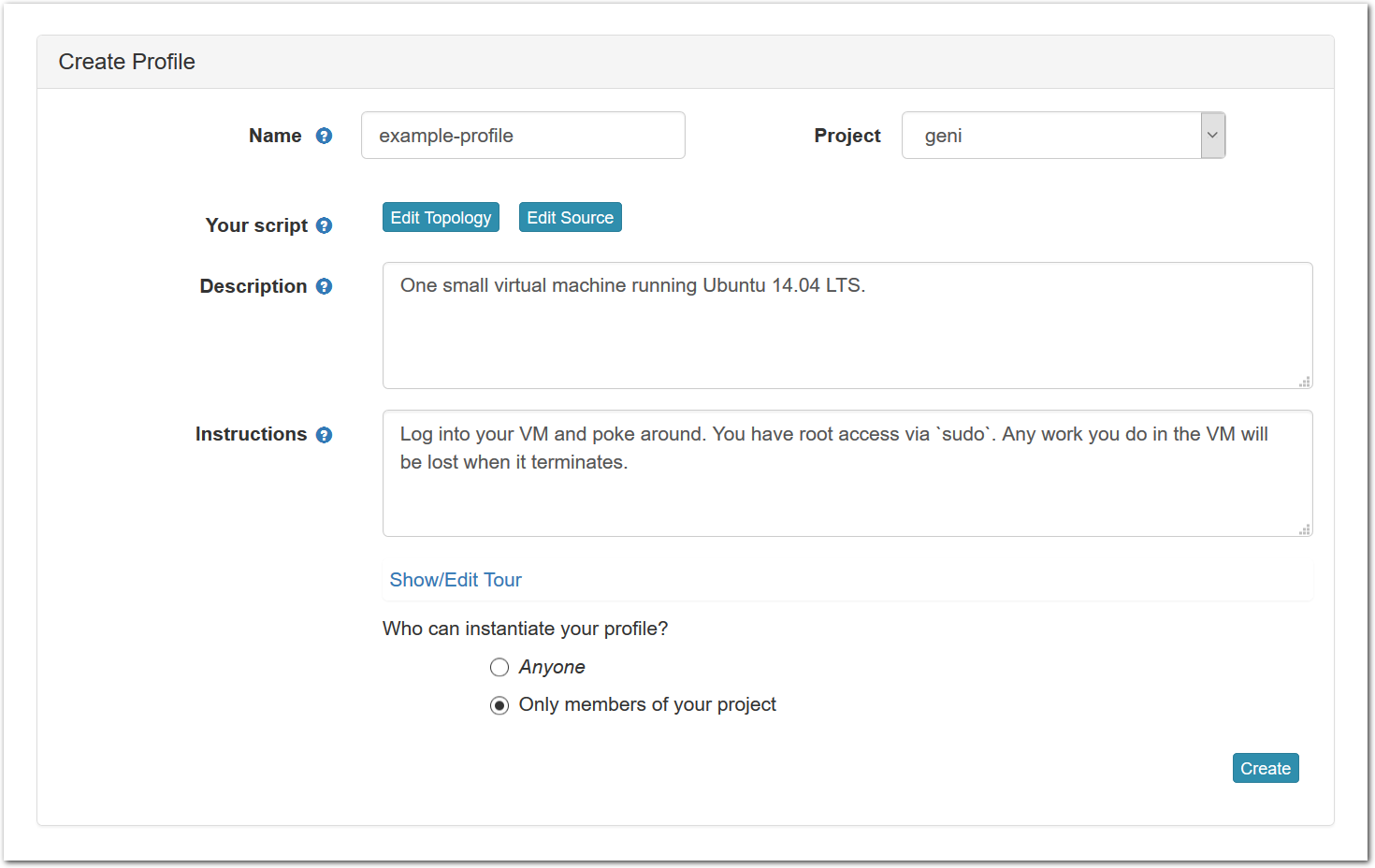

- Create ProfileYou will be taken to a complete profile form and should fill it out as described below.

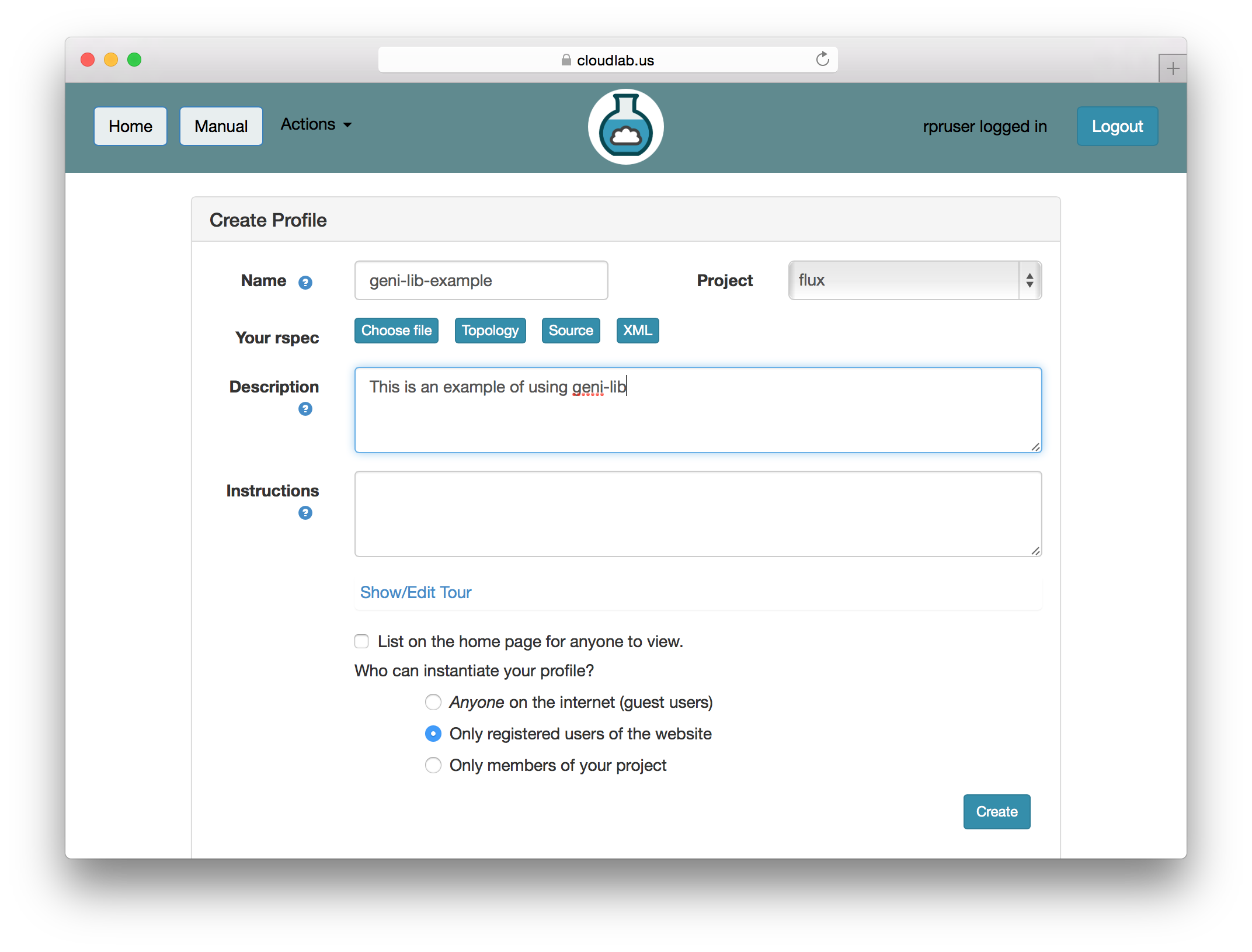

4.1.2 Creating the Profile

After copying a profile (see above) or selecting the menu option to create a new profile from scratch, you will need to fill out the profile creation form in order to complete the creation process.

- Fill out information for the new profileAfter clicking on the “Copy” button, you will see a form that allows you to view and edit the basic information associated with your profile.

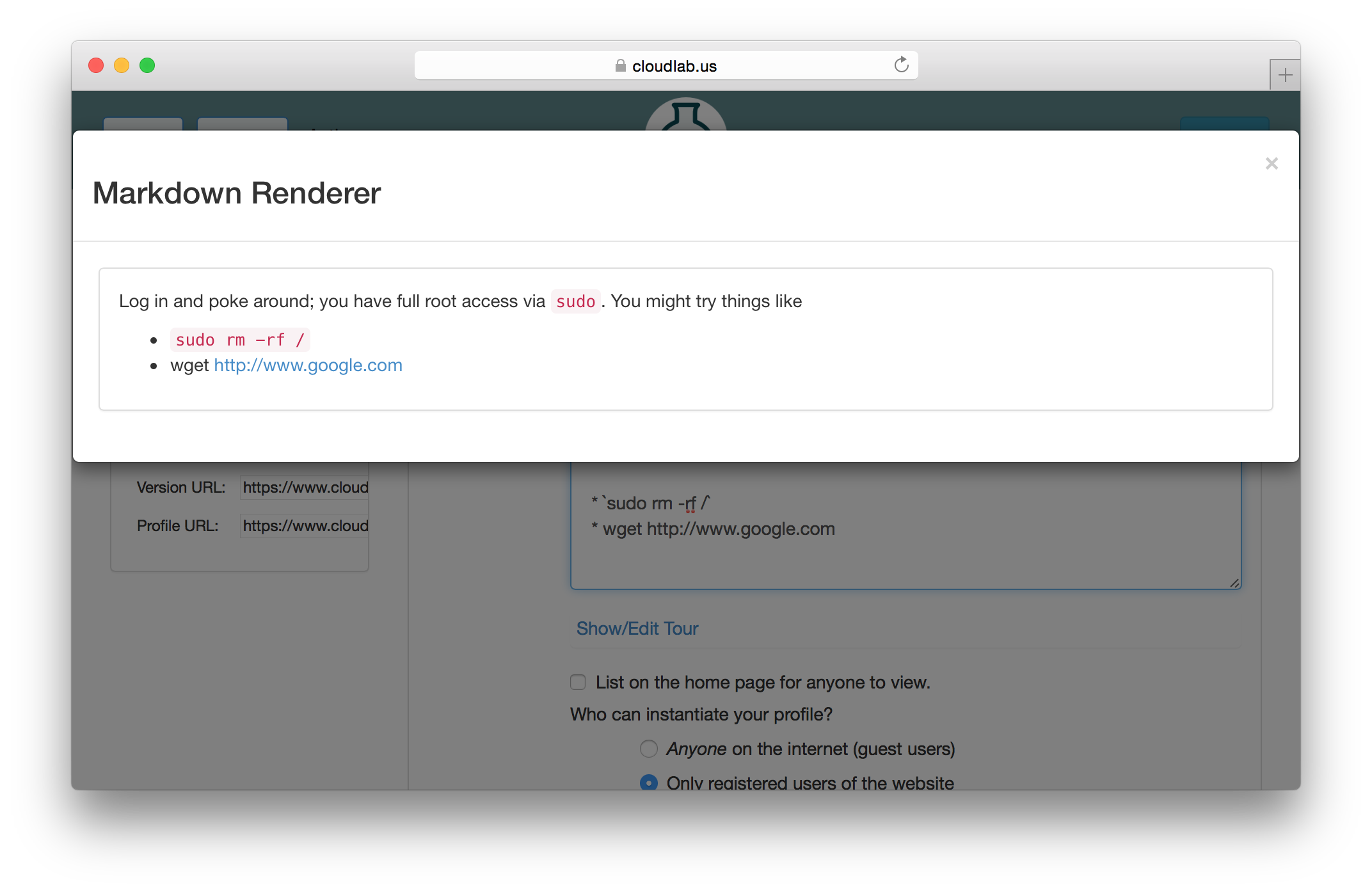

Each profile must be associated with a project. If you’re a member of more than one project, you’ll need to select which one you want the profile to belong to.Make sure to edit the profile’s Description and Instructions.The “Description” is the text that users will see when your profile is listed in CloudLab, when the user is selecting which profile to use. It is also displayed when following a direct link to your profile. It should give the reader a brief description of what they will get if they create an experiment with this profile. If the profile is associated with a paper or other publication, this is a good place to mention that. Markdown markup, including hyperlinks, are allowed in the profile description.The “Instructions” text is displayed on the experiment page after the user has created an experiment using the profile. This is a good place to tell them where the code and data can be found, what scripts they might want to run, etc. Again, Markdown is allowed.You may use template variables in your profile “Instructions” text to customize the instructions to an experiment. Currently, we support two kinds of template variables: host and password. Host variables will allow the Instructions template renderer to substitute a particular node’s FQDN—

Each profile must be associated with a project. If you’re a member of more than one project, you’ll need to select which one you want the profile to belong to.Make sure to edit the profile’s Description and Instructions.The “Description” is the text that users will see when your profile is listed in CloudLab, when the user is selecting which profile to use. It is also displayed when following a direct link to your profile. It should give the reader a brief description of what they will get if they create an experiment with this profile. If the profile is associated with a paper or other publication, this is a good place to mention that. Markdown markup, including hyperlinks, are allowed in the profile description.The “Instructions” text is displayed on the experiment page after the user has created an experiment using the profile. This is a good place to tell them where the code and data can be found, what scripts they might want to run, etc. Again, Markdown is allowed.You may use template variables in your profile “Instructions” text to customize the instructions to an experiment. Currently, we support two kinds of template variables: host and password. Host variables will allow the Instructions template renderer to substitute a particular node’s FQDN—recall that CloudLab FQDNs may be different for each experiment, since they contain both the experiment and project names as subdomains. For instance, if your profile includes a node named node-0, you could refer to its FQDN in the Instructions via {host-node-0}. You might use this to compose a URL to a service running on one of your nodes, e.g. for demos. Password variables allow you to expand specific random per-experiment passwords in the rendered instructions. If your profile includes a random password named perExptPassword, you can have it rendered in the generated Instructions via {password-perExptPassword}. The “Steps” section allows you to create a “tour” of your profile, which is displayed after a user creates an experiment with it. This feature is mostly useful if your profile contains more than one node, and you wish to explain to the user what the purpose of each node is.You have the option of making your profile usable to anyone, only registered CloudLab users, or members of your project. Regardless of the setting you chose here, CloudLab will also give you a direct link that you can use to share your profile with others of your choosing. - Test your profileIf you created your profile to capture an existing, manually configured experiment in a more reproducible manner, before terminating your experiment (or letting it expire), we strongly recommend testing out the new profile. If you elected to make it publicly visible, it will be listed in the profile selection dialog on the front page of https://www.cloudlab.us/. If not, you can instantiate it from the listing in your “My Profiles” page. If the profile will be used by guest users, we recommend testing it as one yourself: log out, and instantiate it using a different username (you will also have to use an alternate email address.)

- Share your profileNow that your profile is working, you can share it with others by sending them direct links, putting links on your webpage or in papers, etc. See “Sharing Profiles” for more details.

4.1.3 Updating a profile

You can update the metadata associated with a profile at any time by going to the “My Profiles” page and clicking on the name of the profile to go to the profile page. On this page, you can edit any of the text fields (Description, Instructions, etc.), change the permissions, etc.

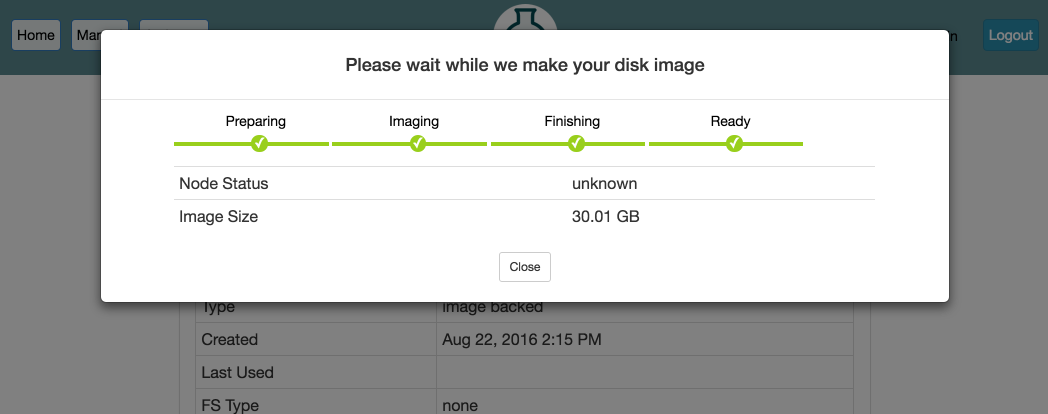

If you need to update the contents of the disk image in the profile, simply create a new experiment from the profile. (You will only see this button on experiments created from profiles that you own.) Once your experiment is ready, you will see a “Create Disk Image” button on the experiment page. Log into your node, get the disk changed the way you want, and click the button. If your experiment includes multiple nodes, you will be given a dropdown to select from which node to capture the disk image. If you want to update the existing disk image the node is using, make sure to use the same name used in the profile, excluding the project name. (Note that disk images are captured into the same project in which the experiment is running.) If you use the same name, future experiments created from the profile will use the new image. However, if you want to create a new disk image, use a different disk image name, and after the image is successfully captured, edit your profile to reference the new disk image name.

As with creating a new profile, we recommend testing the profile before letting your experiment expire. If something goes wrong, we do keep one previous image file for each profile; currently, the only way to get access to this backup is to contact us.

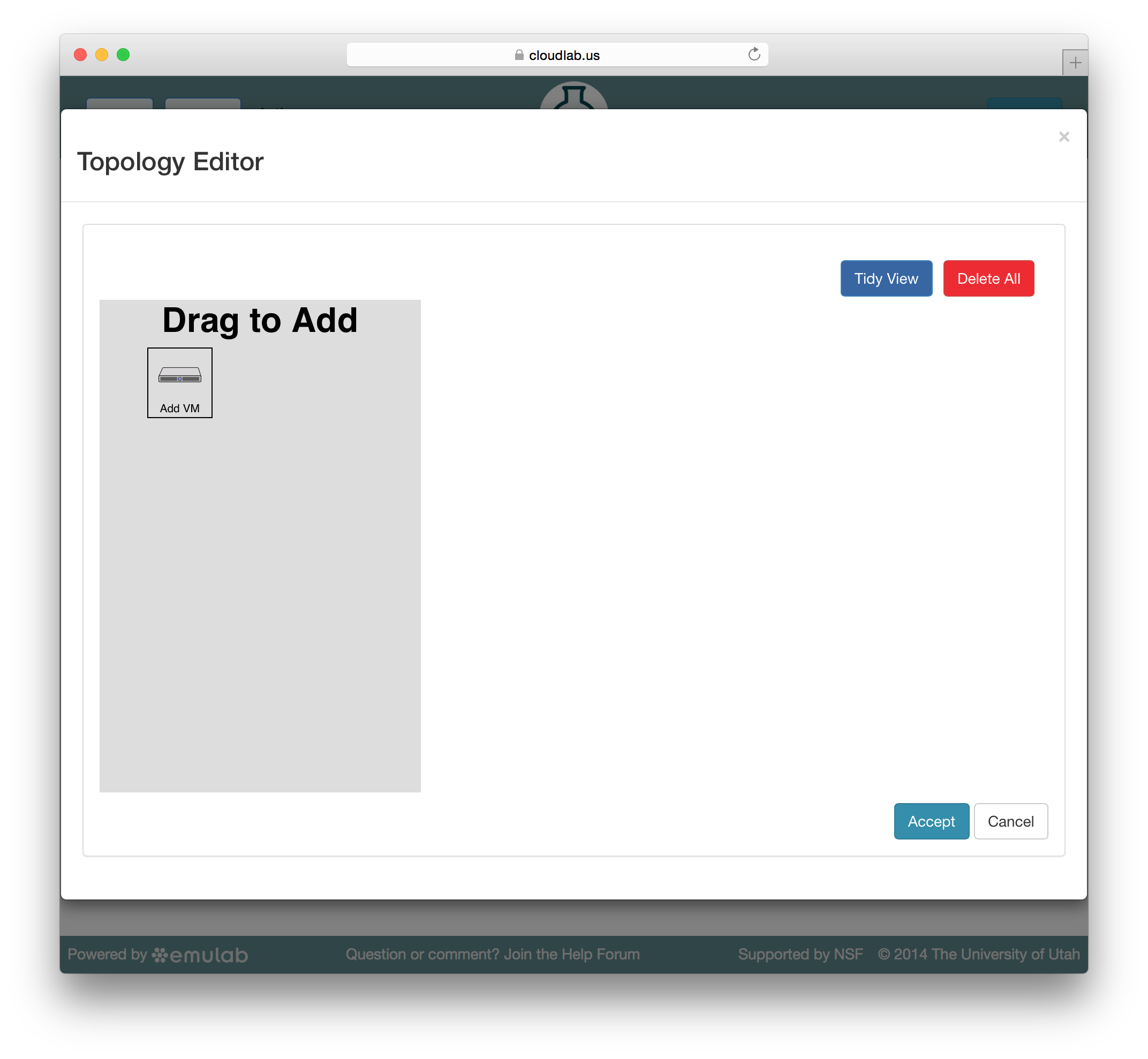

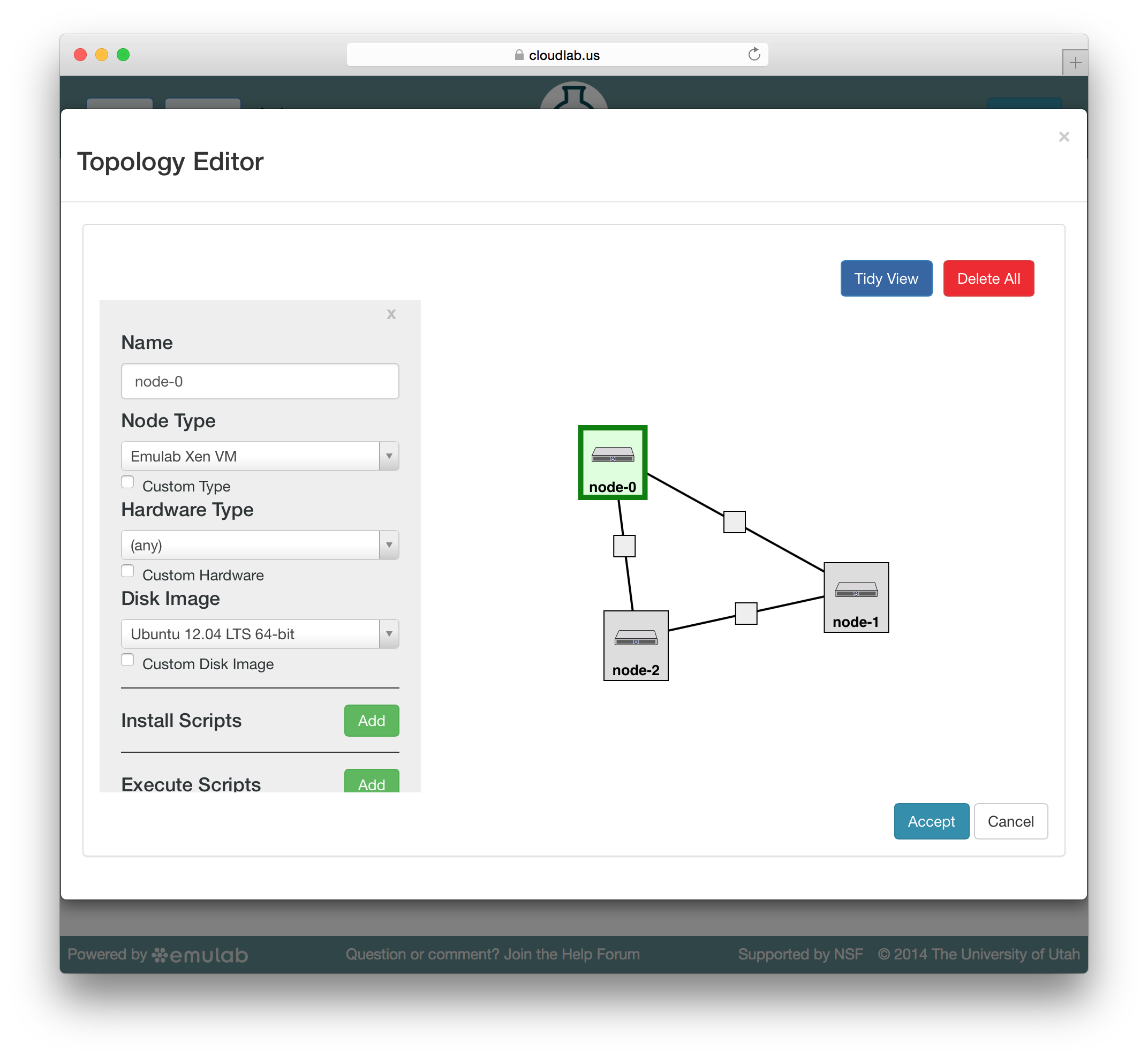

4.2 Creating a profile with a GUI

CloudLab embeds the Jacks GUI for simple creation of small profiles. Jacks can be accessed by clicking the “topology” button on the profile creation or editing page. Jacks is designed to be simple, and to ensure that the topologies drawn can be instantiated on the hardware available. Thus, after making certain choices (such as picking an operating system image) you may find that other choices (such as the node type) become limited.

Jacks has a “palette” on the left side, giving the set of node types (such as physical or virtual machines) that are available. Dragging a node from this palette onto the larger canvas area on the right adds it to the topology. To create a link between nodes, move the mouse near the first node, and a small black line will appear. Click and drag to the second node to complete the link. To create a LAN (multi-endpoint link), create a link between two nodes, then drag links from other nodes to the small grey box that appears in the middle of the original link.

To edit the properties of a node or link, select it by clicking on its icon on the canvas. The panel on the left side will be replace by a property editor that will allow you to to set the disk image for the node, set commands to be run when the node boots, etc. To unselect the current node or link, and return to the palette on the left, simply click a blank area of the canvas.

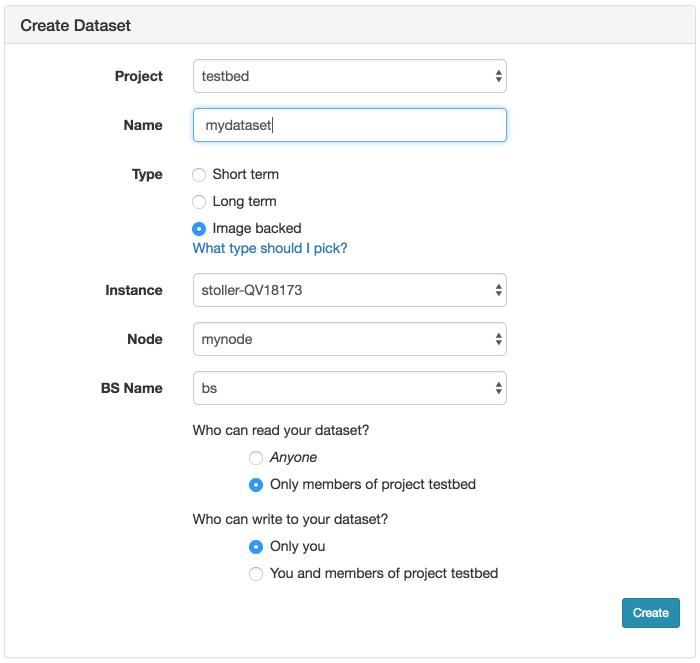

4.3 Capturing Disk Images for Profiles

If you created a new profile by copying one of our standard or example

profiles, your profile is likely using a CloudLab-supported, standard disk

image. If you copied another user’s profile, you may instead be using a

custom disk image that the other user captured when they created their

profile. Depending on the nature of your profile, you may be satisfied to

continue using the original profile’s disk images, or you may prefer to

customize the disk images and persist changes of your own. Some profile

authors rely on CloudLab’s standard disk images, and customize these images

when the experiment boots via

startup scripts contained

in their profiles—

If you want to customize your copy of the profile and persist disk image changes, or ensure that your profile no longer depends on the disk images used in the original profile, you will want to create one or more custom disk images as used in the profile, and update the geni-lib python script in your copy of the profile to use the new image names:

- Create an experimentCreate an experiment using your copy of the original profile.

- Set up the node the way you want itLog into the node and install your software, datasets, packages, etc. Note the caveat below that any changes you make must remain outside of your home directory, and should not be tied to your user account.

- Capture a new disk imageWhile you are logged in, the experiment page for your active experiments will have a “Create Disk Image” button. Clicking this button will create a new disk image from one of the nodes in your running experiment. When customizing the image used by a profile that you do not own, you will need to create new image names, and update the geni-lib python script in your copy of the profile to use the new image names.

Capturing a disk image from an experiment can take a while, so we recommend that you extend your experiment before capturing the disk image, and contact us if you are worried your experiment might expire before you’re done creating your image. We also strongly recommend testing your profile fully before terminating the experiment you’re capturing the disk image from.

When capturing an image, your home directory is not included in the disk image snapshot! You will need to install your code and data elsewhere in the image. We recommend /local/. Keep in mind that others who use your profile are going to have their own accounts, so make sure that nothing in your image makes assumptions about the username, home directory, etc. of the user running it.

When capturing an image, be aware that only disk contents (not running process, etc.) are preserved, and as part of the creation process, your node(s) will first be rebooted in order to take consistent snapshots of the disk.

When copying a profile, remember that the disk images of a currently running experiment are not saved. If you want to customize the disk images using copy, you must copy the profile first, then instantiate your copy, then take snapshots of the modified disk image in your experiment.

4.4 Repository-Based Profiles

You can turn any public git repository (including those hosted on GitHub) into a CloudLab profile. Simply place a geni-lib script named profile.py into the top-level directory of your repository. When you create a new profile, you can provide the URL for your repository. The URL needs to be a http:// or https:// URL, and the CloudLab portal needs to be able to clone the repository without authentication.

Note that CloudLab is not a git hosting service; while we do keep a cache of your repository, we don’t guarantee that the profile will continue to work if the original repository becomes unavailable. We also have limits on the size of the repositories that we will clone.

When you instantiate a repository-based profile, the repository will be cloned into the directory /local/repository on all nodes in the experiment. This means that you can keep source code, startup scripts, etc. in your repository and reference them from profile.py. CloudLab sets the pull URL for all of these clones to be your “upstream” repository, and attempts to set a suitable push URL for (it assumes that the hosting service uses ssh for pushes, and uses the git@<hostname>:user/repo convention). As a result, git pull and git push should be connected to your repository.

There is an example repository on GitHub at https://github.com/emulab/my-profile; if you don’t already have a git repository created, a good way to get started is to fork this one and create a new profile pointing at your fork.

Pushing to your repository is still governed by the authentication and permissions of your git hosting service, so others using your profile will generally be unable to push to your repository.

4.4.1 Updating Repository-Based Profiles

By default, the CloudLab profile does not automatically update whenever you push to your upstream repository; this means that people instantiating your profile see the repository as it existed at the time CloudLab last pulled from it.

You can manually cause CloudLab to pull from your repository using the “Update” button on the profile management page.

You can also set up CloudLab to automatically pull from your repository whever it is updating. To do so, you will need to set up a “web hook” on the service that hosts your git repository. CloudLab currently supports webhooks for GitHub.com, BitBucket.org, and sites hosted using GitLab (including both GitLab.com and self-hosted GitLab installations.) See the “push URL” in the Repository Info panel on the left side of the profile page for the webhook URL, and use the “information” icon next to it to get specific instructions for setting up a webhook on each service. Once you have set the webhook up, every time you push to your repository, your hosting service will let CloudLab know that it should automatically initiate a pull. (This will not be instantaneous, but should complete quickly in most cases.)

4.4.2 Branches and Tags in Repository-Based Profiles

By default, repository-based profiles will be instantiated from the default branch of the repository; this is typically the main or master branch. At the bottom of the profile page, you will also find a list of all branches and tags in the repository, and can instantiate the version contained in any of them. Branches can be used for development work that is not yet ready to become the master (default) version of the profile, and tags can be used to mark specific versions of the profiles that were used for specific papers or course assignments, for example.

4.5 Creating a profile from scratch

CloudLab profiles are described by GENI RSpecs. You can create a profile directly from an RSpec by using the “Create Profile” option from the “Storage” menu. Note that you cannot edit the text fields until you upload an RSpec, as these fields edit (in-browser) fields in the RSpec.

4.6 Sharing Profiles

If you chose to make your profile publicly visible, it will show up in the main

“Select Profile” list on https://www.cloudlab.us/. CloudLab also gives you direct links to

your profiles so that you can share them with others, post them on your

website, publish them in papers, etc. The link can be found on the profile’s

detail page, which is linked for your “My Profiles” page. If you chose to

make your profile accessible to anyone, the link will take the form

https://www.cloudlab.us//p/<project-id>/<profile-id>. If you didn’t make the profile

public, the URL will have the form https://www.cloudlab.us//p/<UUID>, where

UUID is a 128-bit number so that the URL is not guessable. You can still

share this URLs with anyone you want to have access to the profile—

4.7 Versioned Profiles

Profiles are versioned to capture the evolution of a profile over time. When updating profiles, the result is be a new version that does not (entirely) replace the profile being updated.

When sharing a profile, you are given two links to share. One link will take the user to the most recent version of the profile that exists at the time they click the link. This is the most appropriate option in most cases. There is also a link that takes one to a specific version of the profile. This link is most useful for publication in papers or other cases in which reproducability with the exact same environment is a concnern.

5 Basic Concepts

This chapter covers the basic concepts that you’ll need to understand in order to use CloudLab.

5.1 Profiles

A profile encapsulates everything needed to run an experiment. It consists of two main parts: a description of the resources (hardware, storage, network, etc.) needed to run the experiment, and the software artifacts that run on those resources.

The resource specification is in the RSpec format. The RSpec describes an entire topology: this includes the nodes (hosts) that the software will run on, the storage that they are attached to, and the network that connects them. The nodes may be virtual machines or physical servers. The RSpec can specify the properties of these nodes, such as how much RAM they should have, how many cores, etc., or can directly reference a specific class of hardware available in one of CloudLab’s clusters. The network topology can include point to point links, LANs, etc. and may be either built from Ethernet or Infiniband.

The primary way that software is associated with a profile is through

disk images. A disk image (often just called an

“image”) is a block-level snapshot of the contents of a real or virtual

disk—

Profiles come from two sources: some are provided by CloudLab itself; these tend to be standard installations of popular operating systems and software stacks. Profiles may also be provided by CloudLab’s users, as a way for communities to share research artifacts.

5.1.1 On-demand Profiles

Profiles in CloudLab may be on-demand profiles, which means that they are designed to be instantiated for a relatively short period of time (hours or days). Each person instantiating the profile gets their own experiment, so everyone using the profile is doing so independently on their own set of resources.

5.1.2 Persistent Profiles

CloudLab also supports persistent profiles, which are longer-lived (weeks

or months) and are set up to be shared by multiple users. A persistent profile

can be thought of as a “testbed within a testbed”—

An instance of a cloud software stack, providing VMs to a large community

A cluster set up with a specific software stack for a class

A persistent instance of a database or other resource used by a large research community

Machines set up for a contest, giving all participants access to the same hardware

An HPC cluster temporarily brought up for the running of a particular set of jobs

A persistent profile may offer its own user interface, and its users may not necessarily be aware that they are using CloudLab. For example, a cloud-style profile might directly offer its own API for provisioning virtual machines. Or, an HPC-style persistent profile might run a standard cluster scheduler, which users interact with rather than the CloudLab website.

5.2 Experiments

See the chapter on repeatability for more information on repeatable experimentation in CloudLab.

An experiment is an instantiation of a profile. An experiment uses resources, virtual or physical, on one or more of the clusters that CloudLab has access to. In most cases, the resources used by an experiment are devoted to the individual use of the user who instantiates the experiment. This means that no one else has an account, access to the filesystems, etc. In the case of experiments using solely physical machines, this also means strong performance isolation from all other CloudLab users. (The exceptions to this rule are persistent profiles, which may offer resources to many users.) Note that members of the project that the experiment belongs to typically also have full (root) access to the resources in that experiment.

Running experiments on CloudLab consume real resources, which are limited. We ask that you be careful about not holding on to experiments when you are not actively using them. If you are are holding on to experiments because getting your working environment set up takes time, consider creating a profile with one or more custom disk images that captures your environment.

The contents of local disks on nodes in an experiment are considered

ephemeral—

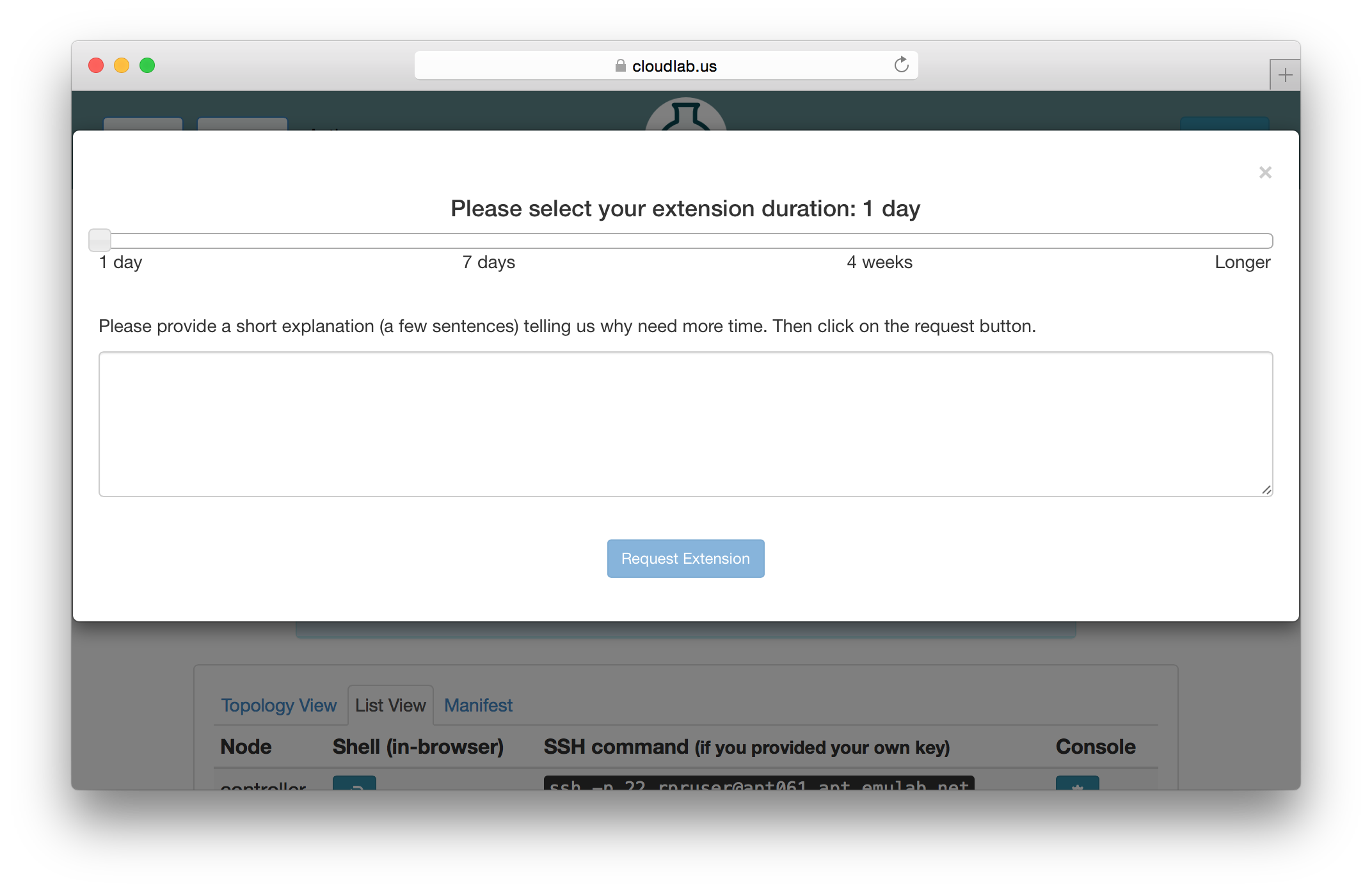

All experiments have an expiration time. By default, the expiration time is short (a few hours), but users can use the “Extend” button on the experiment page to request an extension. A request for an extension must be accompanied by a short description that explains the reason for requesting an extension, which will be reviewed by CloudLab staff. You will receive email a few hours before your experiment expires reminding you to copy your data off or request an extension.

5.2.1 Extending Experiments

If you need more time to run an experiment, you may use the “Extend” button on the experiment’s page. You will be presented with a dialog that allows you to select how much longer you need the experiment. Longer time periods require more extensive appoval processes. Short extensions are auto-approved, while longer ones require the intervention of CloudLab staff or, in the case of indefinite extensions, the steering commitee.

5.3 Projects

Users are grouped into projects. A project is, roughly speaking, a group of people working together on a common research or educational goal. This may be people in a particular research lab, a distributed set of collaborators, instructors and students in a class, etc.

A project is headed by a project leader. We require that project leaders be faculty, senior research staff, or others in an authoritative position. This is because we trust the project leader to approve other members into the project, ultimately making them responsible for the conduct of the users they approve. If CloudLab staff have questions about a project’s activities, its use of resources, etc., these questions will be directed to the project leader. Some project leaders run a lot of experiments themselves, while some choose to approve accounts for others in the project, who run most of the experiments. Either style works just fine in CloudLab.

Permissions for some operations/resources depend on the project that they belong to.

Currently, the only such permission is the ability to make a profile visible onto to the owning project. We expect to introduce more project-specific permissions features in the future.

5.4 Physical Resources

Users of CloudLab may get exclusive, low-level (root) control over physical machines. When allocated this way, no layers of virtualization or indirection get in the way of the way of performance, and users can be sure that no other users have access to the machines at the same time. This is an ideal situation for repeatable research.

Physical machines are re-imaged between users, so you can be sure that your physical machines don’t have any state left around from the previous user. You can find descriptions of the hardware in CloudLab’s clusters in the hardware chapter.

5.5 Virtual Machines

While CloudLab does have the ability to provision virtual machines (using the Xen hypervisor), we expect that the dominant use of CloudLab is that users will provision physical machines. Users (or the cloud software stacks that they run) may build their own virtual machines on these physical nodes using whatever hypervisor they wish. However, if your experiment could still benefit from use of virtual machines (e.g. to form a scalable pool of clients issuing requests to your cloud software stack), you can find more detail in the advanced topics section.

6 CloudLab for Classes

CloudLab can be a good environment for classes: it provides students with separate, isolated environments in which to do their work, and those environments can easily be brought to a known clean state so that all students get a consistent environment. Students get root access on their nodes, making CloudLab ideal for classes that require configuration or code changes to the operating system, or low-level access to the hardware. Because CloudLab is a network testbed, it is also idea for class projects that make use of multiple nodes.

There are some limitations that you should be aware of before deciding to use CloudLab for a class:

CloudLab has limited resources: in large classes, if students leave work to the last minute, or all students try to work on a deadline at the same time, they may run into problems getting the nodes they need in time.

CloudLab has a policy that resources can only be held while they are actively being used. Make sure that students are aware that individual resource allocations are not meant to last all quarter/semester: students should start experiments when they are ready to begin work, and terminate them when they are done.

You will want to make sure that students understand that storage on nodes in CloudLab is ephemeral and will be cleared out when experiments terminate. Addtionally, while CloudLab makes every effort to provide a reliable service, hardware failures, etc. can cause data loss. Students need to be sure to regularly copy off important work to avoid loss due to experiment termination or equipment failures.

Resource reservations can be useful for classes: they ensure that a certain number of resources are available for use by a project during a specified time period. It’s important to note that the resource pool is shared by all members of the project: for example, if you reserve 10 nodes, they can be used by up to 10 different experiments. Students do not need to do anything special to use these reservations: simply starting an experiment during the reservation period will automatically make use of the reservation.

If you have any questions about using CloudLab for classes, see the getting help chapter of this manual. We ask that instructors and TAs be the primary point of contact for students in requesting help with CloudLab: CloudLab has a small support staff that cannot handle a large volume of student support.

6.1 Creating Your Project on CloudLab

Students should not apply for their own projects on CloudLab: the instructor or an assistant (such as a TA) should apply for a project. Be sure to check the box indicating that the project is for a class, and include enough information in the application for us to have a look at the class, such as a link to the syllabus if available.

We ask that you create a new project for each class that you teach; eg. each quarter/semester of a course should apply for a new project on CloudLab. If course staff (instructors, TAs, etc.) already have an account on CloudLab, they can re-use their existing accounts: simply log in before applying for the new project.

Once the project has been approved, students will apply to join it as described below. Course staff can approve these accounts, without CloudLab staff having to get involved. One way to smooth the process of approving accounts for large classes is to allow TAs to approve them; when approving a new account, you may give it "manager" permissions, which allows the account to approve others. You may also give users this status via the "Members" tab of the project page at any time.

6.2 Having Students Join Your Project

When a CloudLab project is approved, the project leader will receive an email with a link to join the project. This link can be distributed to students in the course, who will use it to apply for an account. Once a student applies, the project leader will receive email with the application, and can approve or deny it. Alternately, students can join the project by following the standard CloudLab "join an existing project" instructions, and enter the name of the class’s project.

Students who apply to start their own project will have their project requests denied, and be asked to join the main project for the class.

Students who already have an account on CloudLab can join the project without making a new account; simply log in before applying to join the new project.

6.3 Project-Based Classes

Classes that are based around students working independently or in groups are an ideal use case for CloudLab; all members of the project are given logins on all experiments started under the project, which enables easy collaboration.

6.4 Assignment-Based Classes

For classes in which all students are working on the same task, it can be useful to either use an existing profile or create a new one. This way, all students will have the same environment, simplifying instructions and making debugging easier. The page for each profile has a "share" button that can be used to get a link to include with the assignment.

For large classes, we recommend considering whether virtual machines can meet students’ needs, as this reduces the resource load on CloudLab. Virtual machines should use shared mode so that they run on shared, not exclusive, hosts.

All members of a project are given shells on all experiments in the project: thus, TAs can log into nodes being used by students to help examine or debug code.

7 Resource Reservations

CloudLab supports reservations that allow you to request resources ahead of time. This can be useful for tutorials, classes, and to run larger experiments than are typically possible on a first-come, first-served basis.

Reservations in CloudLab are per-cluster and per-type. They are tied to a project: an experiment must belong to that project to use the reserved nodes (in some cases, the scope of reservations might be further restricted to experiments associated with a particular user, or a particular subgroup). For homogeneous groups of resources (such as compute nodes of the same type), reservations are not tied to specific nodes; this gives CloudLab maximum flexibility to do late-binding of nodes to experiments instantiated from reservations, which makes them minimally intrusive on other users of the testbed.

7.1 What Reservations Guarantee

Having a reservation guarantees that, at minimum, the specified quantity of nodes of the specified type will be available for use by the project during the specified time window.

Having a reservation does not automatically start an experiment at that time: it ensures that the specified number of nodes are available for use by any experiments that you (or in some cases, your fellow project members) start.

More than one experiment may use nodes from the reservation; for example, a tutorial in which 40 students each will run an experiment having a single node may be handled as a single 40-node reservation. You may also start and terminate multiple experiments in series over the course of the reservation: reserved nodes will not be returned to general use until your reservation ends.

A reservation guarantees the minimum number of nodes that will be available; you may use more so long as they are not used by other experiments or reservations.

Experiments run during a reservation do not automatically terminate at the end of the reservation; they simply become subject to the normal resource usage policies, and, for example, may become non-extendable due to other reservations that start after yours.

Important caveats include:

Nodes can take several minutes to be freed and reloaded between experiments; this means that they may take a few minutes to be available at the beginning of your reservation, and if you terminate an experiment during your reservation, they may take a few minutes to be usable for your next experiment.

The reservation system cannot account for factors outside of its control such as hardware failures; this many result in occasional failures to get the full number of nodes in exceptional circumstances.

The reservation system ensures that enough nodes of the specified type are available, but does not consider other factors such as network topology, and so cannot guarantee that all possible experiments can be started, even if they fit within the number of nodes.

7.2 How Reservations May Affect You

Reservations held by others may affect your experiments in two ways: they may prevent you from creating new experiments or may prevent you from extending existing experiments. This “admission control system” is how we ensure that nodes are available for those that have them reserved.

If there is an ongoing or upcoming reservation by another project, you may encounter an “admission control” failure when trying to create a new experiment. This means that, although there are enough nodes that are not currently allocated to a particular experiment, some or all of those nodes are required in order to fulfill a reservation. Note that the admission control system assumes that your experiment will last for the full default experiment duration when making this calcuation. For example, if the default experiment duration is 24 hours, and a large reservation will start in 10 hours, your experiment may fail to be created due to the admission control system. If the large reservation starts in 30 hours, you will be able to create the experiment, but you may not be able to extend it.

Reservations can also prevent you from extending existing experiments, if that extension would cause too few nodes to be available to satisfy a reservation. A message will appear on the experiment’s status page warning you when this situation will occur in the near future, and the reservation request dialog will limit the length of reservation that you can request. If this happens, be sure to save all of your work, as the administrators cannot grant extensions that would interfere with reservations.

7.3 Making a Reservation

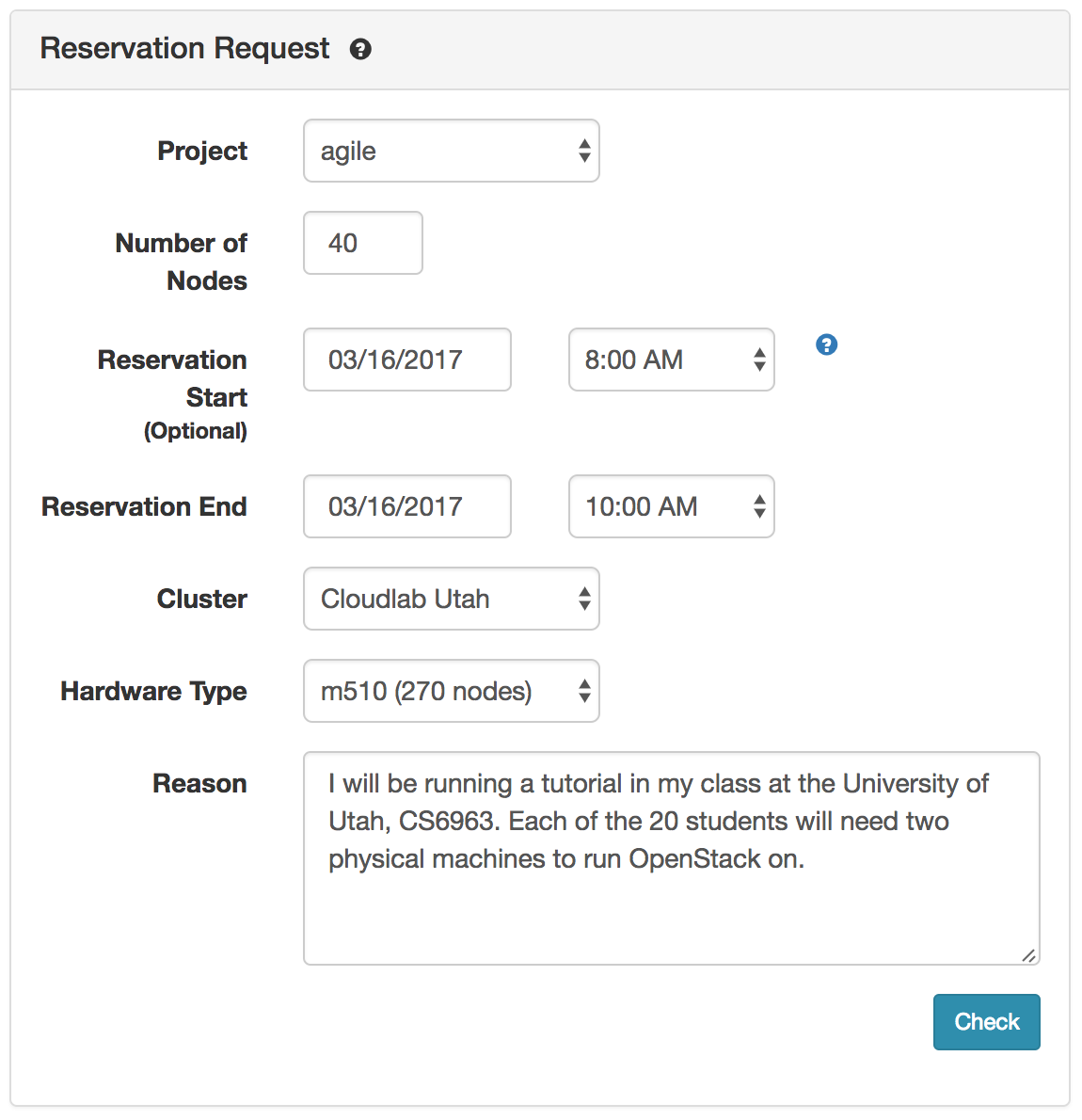

To request a reservation, use the “Reserve Nodes” item from the “Experiments” menu.

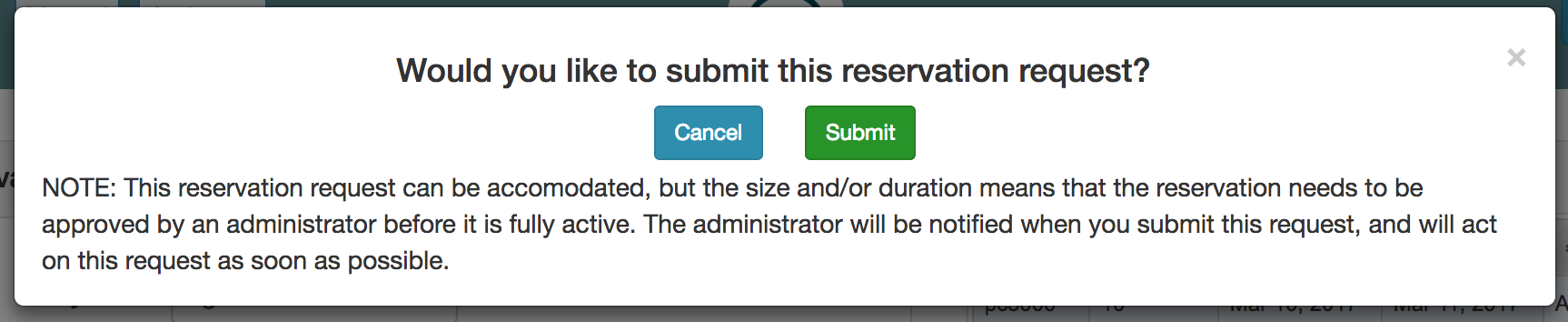

After filling out the number of and type of nodes and the time, the check button checks to see if the reservation is possible. If your request is satisfiable, you will get a dialog box that lets you submit the request.

If your request is not satisfiable, you will be given a chance to modify the request and “check” again. In this case, the time when there will not be enough nodes is shown, as will the number of nodes by which the request exceeds availalbe resources. To make your reservation fit, try asking for a different type of nodes, a smaller number, or a time further in the future.

Not all reservation requests are automatically accepted. Your request will be shown as “pending” while it is being reviewed by the CloudLab administrators. Requesting the smaller numbers of nodes, or for shorter periods of time, will maximize the chances that youre request is accepted. Be sure to include meaningful text in the “Reason” field, as administrators will use this to determine whether to grant your reservation.

You may have more than one reservation at a time; if you need resources of more than one type, or on different clusters, you can get this by requesting mutliple reservations.

7.4 Using a Reservation

To use a reservation, simply create experiments as normal. Experiments run during the duration of the reservation (even those begun before its start time) are automatically counted towards the reservation. Experiments run during reservations have expiration times as do normal experiments, so be sure to extend them if necessary.

Since reservations are associated with a project, if you belong to more than one, make sure to create the experiment under the correct project. (If the project is organised into subgroups, selecting the appropriate subgroup might also be necessary.)

Experiments are not automatically terminated at the conclusion of a reservation (though it may not be possible to extend them due to other reservations). Remember to terminate your experiments when you are done with them, as you would do normally.

If you finish your work, and still have any active experiments or related reservations, we ask that you terminate the associated experiments and delete the reservations so that the resources can be freed up for use by others.

7.5 Who Shares Access to Reservations

As mentioned above, every reservation is tied to exactly one project, and experiments belonging to other projects will be excluded from reserved resources for the duration of the reservation.

Different projects can have very different organisational models, however. One project might be run by a manager (such as a class instructor) who makes reservations on behalf of the entire project, and then individual project members start experiments each using a fraction of the reserved resources. Another project might operate informally, and its project members behave independently: those members might prefer to make private reservations for their own use (excluding their fellow project members from intentionally or unintentionally consuming the reserved resources with experiments of their own).

(Some projects are organised hierarchically, and make use of subgroups within the project; reservations can also be handled by subgroup, if applicable.)

CloudLab offers three reservation sharing options a project may choose from:

By user |

| An experiment will be able to access reserved resources only if the experiment and reservation are in the same project, and the experiment was started by the same user who requested the reservation. |

By project |

| An experiment will be able to access reserved resources only if the experiment and reservation are in the same project. |

By subgroup |

| An experiment will be able to access reserved resources only if the experiment and reservation are in the same project, and the experiment and the reservation specify the same subgroup. |

A project leader can request the reservation sharing semantics suitable for their project by contacting support@cloudlab.us.

Please note that the sharing semantics of reservations must be chosen (by the project leader) for the project as a whole. It is not possible to request a custom scope for individual reservations.

8 Describing a profile with python and geni-lib

See the geni-lib manual

geni-lib is a tool that allows users to generate RSpec files from Python code. CloudLab offers the ability to use geni-lib scripts as the definition of a profile, rather then the more primitive RSpec format. When you supply a geni-lib script on the Create Profile page, your script is uploaded to the server so that it can be executed in the geni-lib environment. This allows the script to be verified for correctness, and also produces the equivalent RSpec representation that you can view if you so desire.

When you provide a geni-lib script, you will see a slightly different set of buttons on the Create Profile page; next to the “Source” button there is an “XML” button that will pop up the RSpec XML for you to look at. The XML is read-only; if you want to change the profile, you will need to change the python source code that is displayed when you click on the “Source” button. Each time you change the python source code, the script is uploaded to the server and processed. Be sure to save your changes if you are updating an existing profile.

The following examples demonstrate basic geni-lib usage. More information about geni-lib and additional examples, can be found in the geni-lib repository. Its full documentation is online as part of this manual.

8.1 A single XEN VM node

"""An example of constructing a profile with a single Xen VM. Instructions: Wait for the profile instance to start, and then log in to the VM via the ssh port specified below. (Note that in this case, you will need to access the VM through a high port on the physical host, since we have not requested a public IP address for the VM itself.) """ # Import the Portal object. import geni.portal as portal import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Add a XenVM (named "node") to the request node = request.XenVM("node") # Write the request in RSpec format portal.context.printRequestRSpec() Open this profile on CloudLab"""An example of constructing a profile with a single Xen VM. Instructions: Wait for the profile instance to start, and then log in to the VM via the ssh port specified below. (Note that in this case, you will need to access the VM through a high port on the physical host, since we have not requested a public IP address for the VM itself.) """ # Import the Portal object. import geni.portal as portal import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Add a XenVM (named "node") to the request node = request.XenVM("node") # Write the request in RSpec format portal.context.printRequestRSpec()

This example demonstrates the two most important objects: the portal context (accessed through the portal.context object in the geni.portal module), and the request RSpec created by calling makeRequestRSpec() on it. These fundamental objects are central to essentially all CloudLab geni-lib profiles.

Another way to create a Request RSpec object is to call its constructuor, geni.rspec.pg.Request directly. We ask the Context to create it for us so it it is "bound" to the context and does not need to be explicitly passed to other functions on the context

Once the request object has been created, resources may be added to it by calling methods on it like RawPC() or rspec.pg.LAN. In this example, just a single node (created with the XenVM() constructor, asking for a single VM identified by the name "node") is requested.

Most functions called on Request objects are not directly members of that class. Rather, they are loaded as "extensions" by modules such as geni.rspec.emulab.

The final action the geni-lib script performs is to generate the XML representation of the request RSpec, with the printRequestRSpec() call on the last line. This has the effect of communicating the description of all the resources requested by the profile back to CloudLab.

You will also notice that the profile begins with a string literal (to be precise, it is a Python docstring). The initial text will also be used as the profile description; the text following the Instructions: line will be used as the corresponding instructions. This documentation is so important that adding the description to the profile is mandatory. (Using a docstring like this is not the only way to produce the description and instructions, although it is the most convenient.)

This simple example has now demonstrated all the important elements of a geni-lib profile. The portal context and request RSpec objects, the final printRequestRSpec() call, and the docstring description and instructions are “boilerplate” constructions, and you will probably include similar or identical versions of them in every geni-lib profile you create unless you are doing something quite unusual.

8.2 A single physical host

"""An example of constructing a profile with a single raw PC. Instructions: Wait for the profile instance to start, and then log in to the host via the ssh port specified below. """ import geni.portal as portal import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Create a raw PC node = request.RawPC("node") # Print the RSpec to the enclosing page. portal.context.printRequestRSpec() Open this profile on CloudLab"""An example of constructing a profile with a single raw PC. Instructions: Wait for the profile instance to start, and then log in to the host via the ssh port specified below. """ import geni.portal as portal import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Create a raw PC node = request.RawPC("node") # Print the RSpec to the enclosing page. portal.context.printRequestRSpec()

As mentioned above, most of these simple examples consist of boilerplate geni-lib fragments, and indeed the portal context and request RSpec operations are unchanged from the previous script. The big difference, though (other than the updated documentation) is that in this case the RawPC() method is invoked on the Request object instead of XenVM(). As you might expect, the new profile will request a physical host instead of a virtual one. (A side effect of using a real machine is that it automatically comes with a unique public IP address, where the VM used in the earlier example did not. Profiles can request public IP addresses for VMs too, though it does not happen by default.)

8.3 Two XenVM nodes with a link between them

"""An example of constructing a profile with two VMs connected by a LAN. Instructions: Wait for the profile instance to start, and then log in to either VM via the ssh ports specified below. """ import geni.portal as portal import geni.rspec.pg as rspec request = portal.context.makeRequestRSpec() # Create two XenVM nodes. node1 = request.XenVM("node1") node2 = request.XenVM("node2") # Create a link between them link1 = request.Link(members = [node1,node2]) portal.context.printRequestRSpec() Open this profile on CloudLab"""An example of constructing a profile with two VMs connected by a LAN. Instructions: Wait for the profile instance to start, and then log in to either VM via the ssh ports specified below. """ import geni.portal as portal import geni.rspec.pg as rspec request = portal.context.makeRequestRSpec() # Create two XenVM nodes. node1 = request.XenVM("node1") node2 = request.XenVM("node2") # Create a link between them link1 = request.Link(members = [node1,node2]) portal.context.printRequestRSpec()

This example demonstrates two important geni-lib concepts: first, adding more than a single node to the request (which is a relatively straightforward matter of calling more than one node object constructor, being careful to use a different name each time). It also shows how to add links between nodes. It is possible to construct links and LANs in a more complicated manner (such as explicitly creating Interface objects to control interfaces), but the simplest case is to supply the member nodes at the time the link is created.

8.4 Two ARM64 servers in a LAN

"""An example of constructing a profile with two ARM64 nodes connected by a LAN. Instructions: Wait for the profile instance to start, and then log in to either host via the ssh ports specified below. """ import geni.portal as portal import geni.rspec.pg as rspec request = portal.context.makeRequestRSpec() # Create two raw "PC" nodes node1 = request.RawPC("node1") node2 = request.RawPC("node2") # Set each of the two to specifically request "m400" nodes, which in CloudLab, are ARM node1.hardware_type = "m400" node2.hardware_type = "m400" # Create a link between them link1 = request.Link(members = [node1, node2]) portal.context.printRequestRSpec() Open this profile on CloudLab"""An example of constructing a profile with two ARM64 nodes connected by a LAN. Instructions: Wait for the profile instance to start, and then log in to either host via the ssh ports specified below. """ import geni.portal as portal import geni.rspec.pg as rspec request = portal.context.makeRequestRSpec() # Create two raw "PC" nodes node1 = request.RawPC("node1") node2 = request.RawPC("node2") # Set each of the two to specifically request "m400" nodes, which in CloudLab, are ARM node1.hardware_type = "m400" node2.hardware_type = "m400" # Create a link between them link1 = request.Link(members = [node1, node2]) portal.context.printRequestRSpec()

We now come to demonstrate requesting particular properties of nodes—

8.5 A VM with a custom size

"""An example of constructing a profile with a single Xen VM. Instructions: Wait for the profile instance to start, and then log in to the VM via the ssh port specified below. (Note that in this case, you will need to access the VM through a high port on the physical host, since we have not requested a public IP address for the VM itself.) """ import geni.portal as portal import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Create a XenVM node = request.XenVM("node") # Ask for two cores node.cores = 2 # Ask for 2GB of ram node.ram = 2048 # Add an extra 8GB of space on the primary disk. # NOTE: Use fdisk, the extra space is in the 4th DOS partition, # you will need to create a filesystem and mount it. node.disk = 8 # Alternate method; request an ephemeral blockstore mounted at /mydata. # NOTE: Comment out the above line (node.disk) if you do it this way. #bs = node.Blockstore("bs", "/mydata") #bs.size = "8GB" #bs.placement = "nonsysvol" # Print the RSpec to the enclosing page. portal.context.printRequestRSpec() Open this profile on CloudLab"""An example of constructing a profile with a single Xen VM. Instructions: Wait for the profile instance to start, and then log in to the VM via the ssh port specified below. (Note that in this case, you will need to access the VM through a high port on the physical host, since we have not requested a public IP address for the VM itself.) """ import geni.portal as portal import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Create a XenVM node = request.XenVM("node") # Ask for two cores node.cores = 2 # Ask for 2GB of ram node.ram = 2048 # Add an extra 8GB of space on the primary disk. # NOTE: Use fdisk, the extra space is in the 4th DOS partition, # you will need to create a filesystem and mount it. node.disk = 8 # Alternate method; request an ephemeral blockstore mounted at /mydata. # NOTE: Comment out the above line (node.disk) if you do it this way. #bs = node.Blockstore("bs", "/mydata") #bs.size = "8GB" #bs.placement = "nonsysvol" # Print the RSpec to the enclosing page. portal.context.printRequestRSpec()

The earlier examples requesting VMs used the default number of cores, quantity of RAM, and disk size. It’s also possible to customize these value, as this example does by setting the cores, ram, and disk properties of the XenVM class (which is a subclass of rspec.pg.Node.)

8.6 Set a specific IP address on each node

"""An example of constructing a profile with node IP addresses specified manually. Instructions: Wait for the profile instance to start, and then log in to either VM via the ssh ports specified below. (Note that even though the EXPERIMENTAL data plane interfaces will use the addresses given in the profile, you will still connect over the control plane interfaces using addresses given by the testbed. The data plane addresses are for intra-experiment communication only.) """ import geni.portal as portal import geni.rspec.pg as rspec request = portal.context.makeRequestRSpec() node1 = request.XenVM("node1") iface1 = node1.addInterface("if1") # Specify the component id and the IPv4 address iface1.component_id = "eth1" iface1.addAddress(rspec.IPv4Address("192.168.1.1", "255.255.255.0")) node2 = request.XenVM("node2") iface2 = node2.addInterface("if2") # Specify the component id and the IPv4 address iface2.component_id = "eth2" iface2.addAddress(rspec.IPv4Address("192.168.1.2", "255.255.255.0")) link = request.LAN("lan") link.addInterface(iface1) link.addInterface(iface2) portal.context.printRequestRSpec() Open this profile on CloudLab"""An example of constructing a profile with node IP addresses specified manually. Instructions: Wait for the profile instance to start, and then log in to either VM via the ssh ports specified below. (Note that even though the EXPERIMENTAL data plane interfaces will use the addresses given in the profile, you will still connect over the control plane interfaces using addresses given by the testbed. The data plane addresses are for intra-experiment communication only.) """ import geni.portal as portal import geni.rspec.pg as rspec request = portal.context.makeRequestRSpec() node1 = request.XenVM("node1") iface1 = node1.addInterface("if1") # Specify the component id and the IPv4 address iface1.component_id = "eth1" iface1.addAddress(rspec.IPv4Address("192.168.1.1", "255.255.255.0")) node2 = request.XenVM("node2") iface2 = node2.addInterface("if2") # Specify the component id and the IPv4 address iface2.component_id = "eth2" iface2.addAddress(rspec.IPv4Address("192.168.1.2", "255.255.255.0")) link = request.LAN("lan") link.addInterface(iface1) link.addInterface(iface2) portal.context.printRequestRSpec()

This code sample assigns specific IP addresses to interfaces on the nodes it requests.

Some of the available qualifiers on requested nodes are specified by manipulating attributes within the node (or interface) object directly. The hardware_type in the previous example is one such case, as is the component_id here. (Note that the component_id in this example is applied to an interface, although it is also possible to specify component_ids on nodes, too, to request a particular physical host.)

Other modifications to requests require dedicated methods. For instance, see the addAddress() calls made on each of the two interfaces above. In each case, an IPv4Address object is obtained from the appropriate constructor (the parameters are the address and the netmask, respectively), and then added to the corresponding interface.

8.7 Specify an operating system and set install and execute scripts

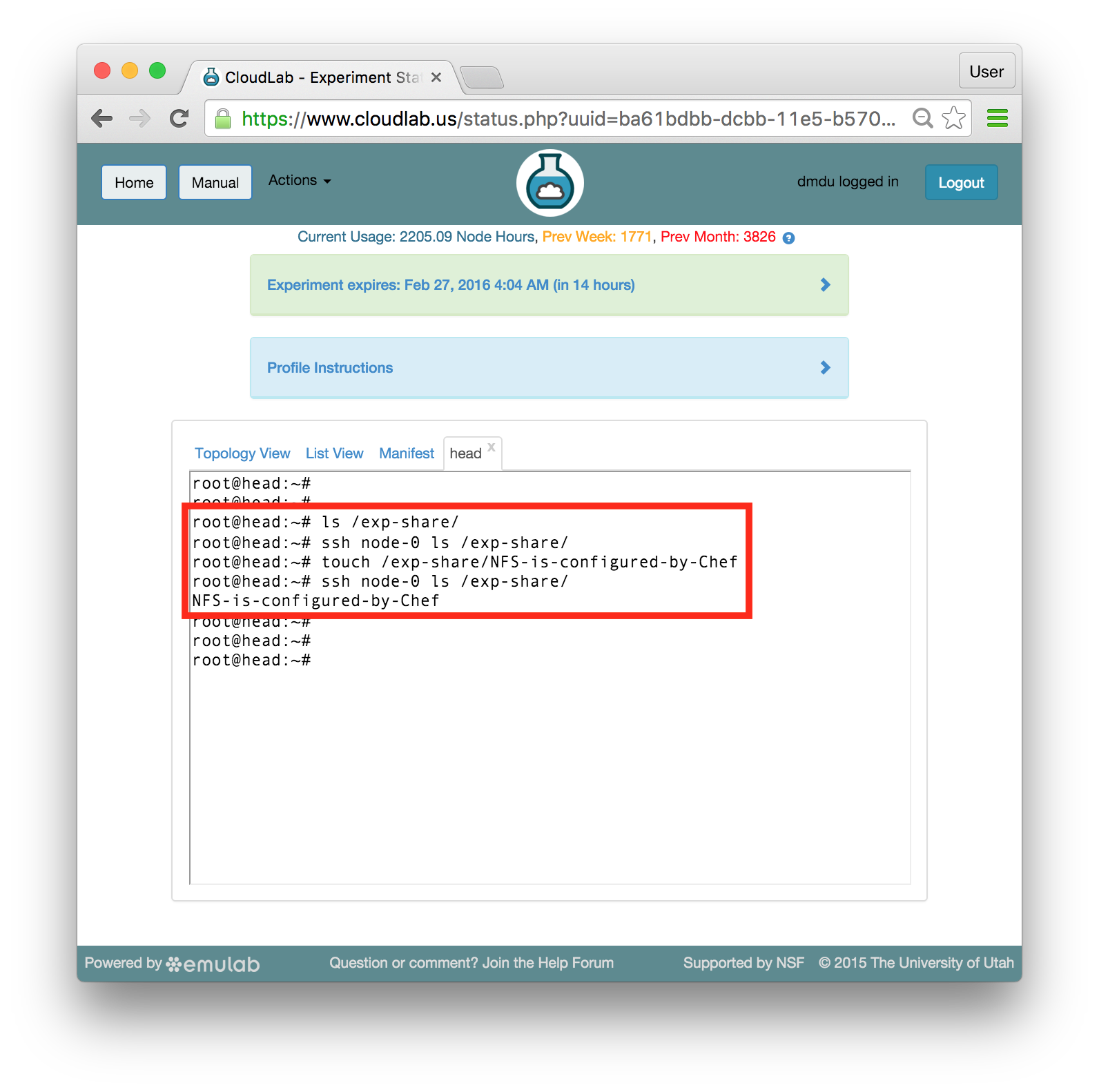

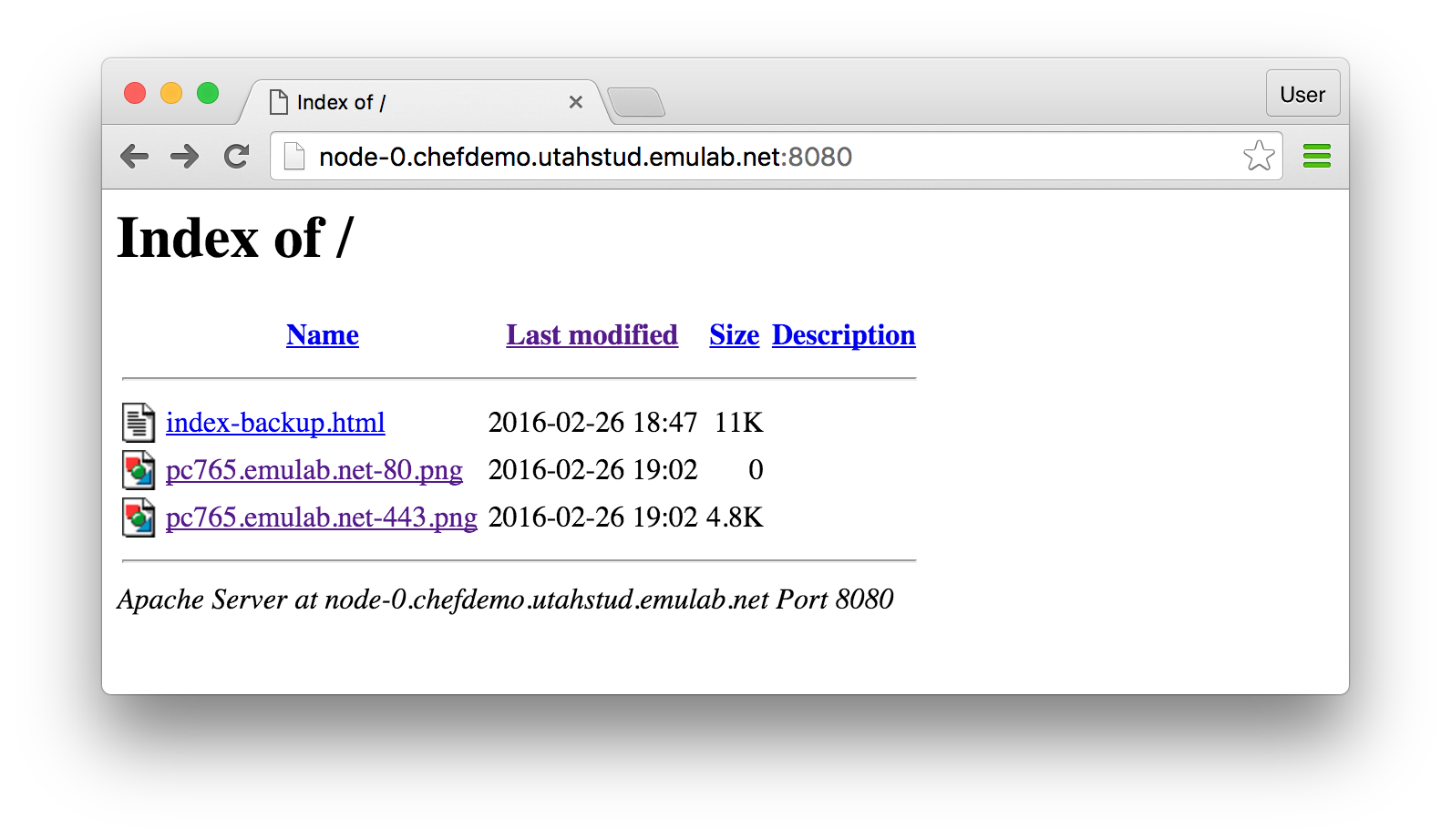

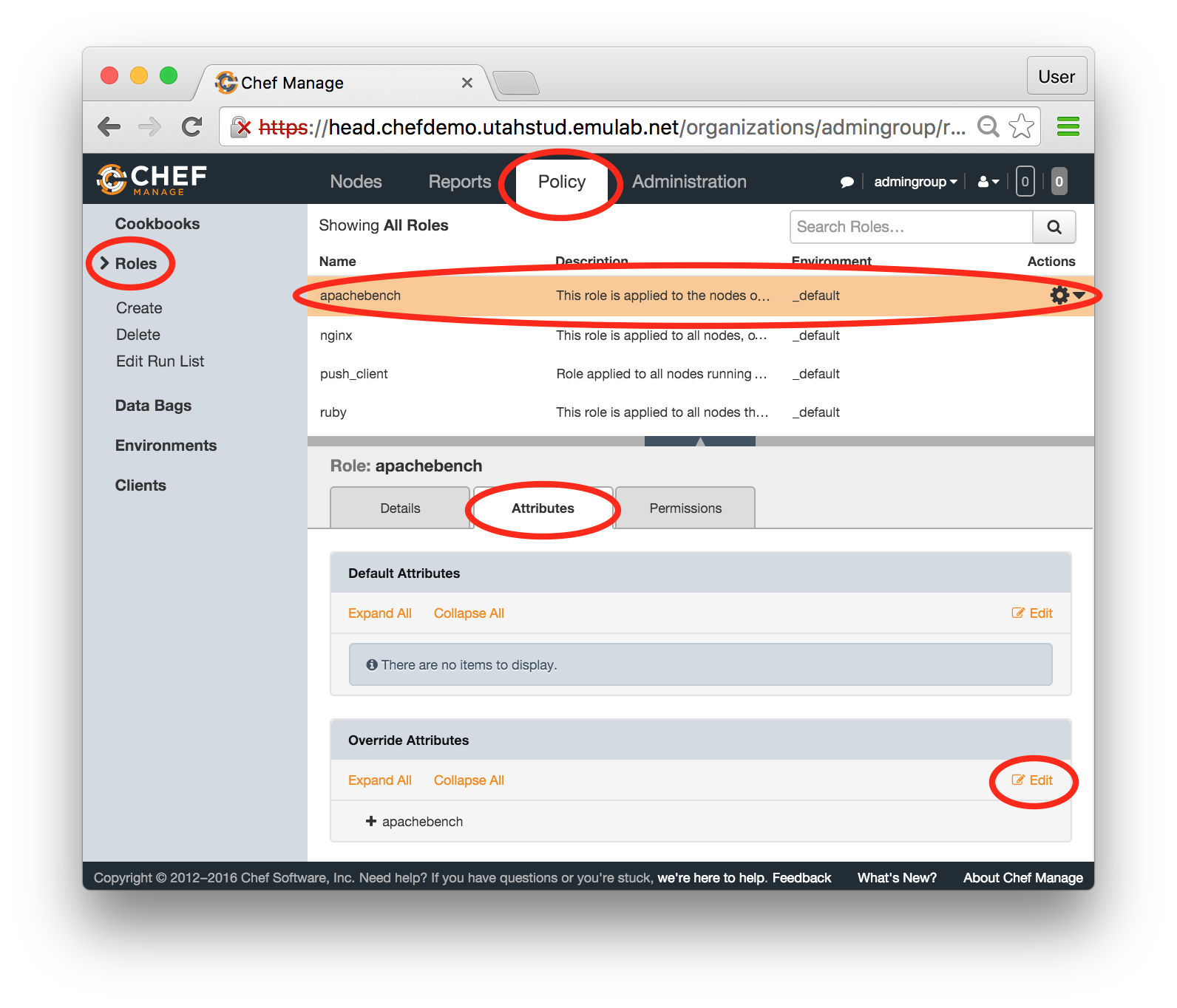

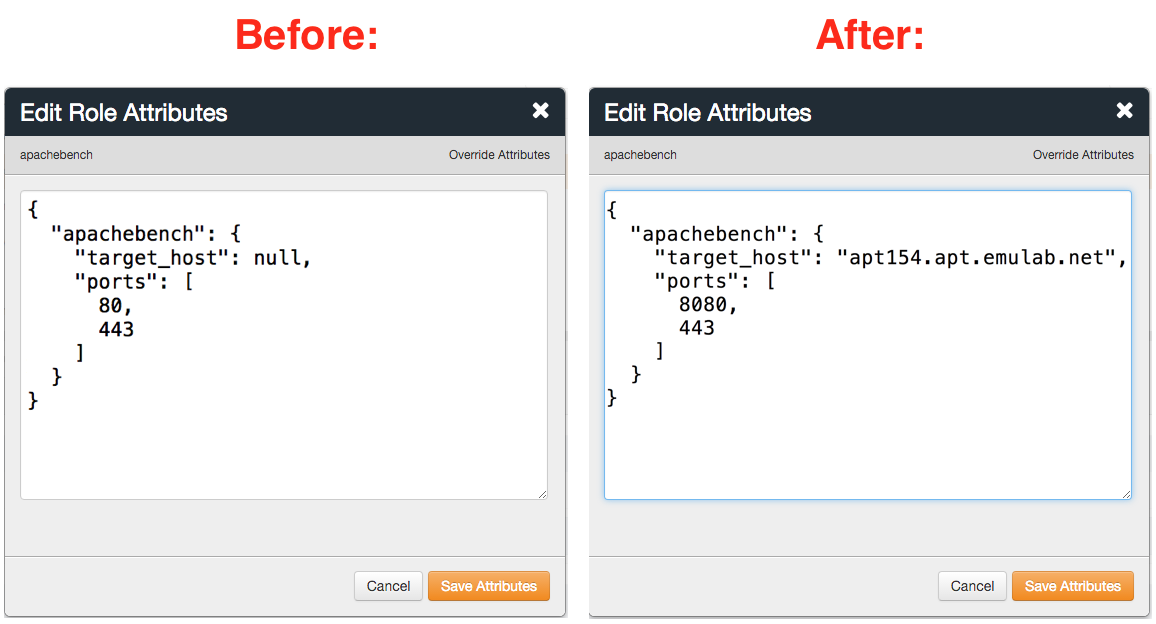

"""An example of constructing a profile with install and execute services. Instructions: Wait for the profile instance to start, then click on the node in the topology and choose the `shell` menu item. The install and execute services are handled automatically during profile instantiation, with no manual intervention required. """ # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Add a raw PC to the request. node = request.RawPC("node") # Request that a specific image be installed on this node node.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU20-64-STD"; # Install and execute scripts on the node. THIS TAR FILE DOES NOT ACTUALLY EXIST! node.addService(rspec.Install(url="http://example.org/sample.tar.gz", path="/local")) node.addService(rspec.Execute(shell="bash", command="/local/example.sh")) portal.context.printRequestRSpec()Open this profile on CloudLab"""An example of constructing a profile with install and execute services. Instructions: Wait for the profile instance to start, then click on the node in the topology and choose the `shell` menu item. The install and execute services are handled automatically during profile instantiation, with no manual intervention required. """ # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as rspec # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Add a raw PC to the request. node = request.RawPC("node") # Request that a specific image be installed on this node node.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU20-64-STD"; # Install and execute scripts on the node. THIS TAR FILE DOES NOT ACTUALLY EXIST! node.addService(rspec.Install(url="http://example.org/sample.tar.gz", path="/local")) node.addService(rspec.Execute(shell="bash", command="/local/example.sh")) portal.context.printRequestRSpec()